Instantly share code, notes, and snippets.

shantanuatgit / Assignment3.py

- Download ZIP

- Star 0 You must be signed in to star a gist

- Fork 0 You must be signed in to fork a gist

- Embed Embed this gist in your website.

- Share Copy sharable link for this gist.

- Clone via HTTPS Clone using the web URL.

- Learn more about clone URLs

- Save shantanuatgit/2054ad91d1b502bae4a8965d6fb297e1 to your computer and use it in GitHub Desktop.

Browse Course Material

Course info.

- Dr. Casey Rodriguez

Departments

- Mathematics

As Taught In

- Mathematical Analysis

Learning Resource Types

Real analysis, assignment 3 (pdf).

You are leaving MIT OpenCourseWare

Assignment 3: Hello Vectors

Assignment 3: hello vectors #.

Welcome to this week’s programming assignment of the specialization. In this assignment we will explore word vectors. In natural language processing, we represent each word as a vector consisting of numbers. The vector encodes the meaning of the word. These numbers (or weights) for each word are learned using various machine learning models, which we will explore in more detail later in this specialization. Rather than make you code the machine learning models from scratch, we will show you how to use them. In the real world, you can always load the trained word vectors, and you will almost never have to train them from scratch. In this assignment you will

Predict analogies between words.

Use PCA to reduce the dimensionality of the word embeddings and plot them in two dimensions.

Compare word embeddings by using a similarity measure (the cosine similarity).

Understand how these vector space models work.

1.0 Predict the Countries from Capitals #

During the presentation of the module, we have illustrated the word analogies by finding the capital of a country from the country. In this part of the assignment we have changed the problem a bit. You are asked to predict the countries that corresponde to some capitals . You are playing trivia against some second grader who just took their geography test and knows all the capitals by heart. Thanks to NLP, you will be able to answer the questions properly. In other words, you will write a program that can give you the country by its capital. That way you are pretty sure you will win the trivia game. We will start by exploring the data set.

Important Note on Submission to the AutoGrader #

Before submitting your assignment to the AutoGrader, please make sure you are not doing the following:

You have not added any extra print statement(s) in the assignment.

You have not added any extra code cell(s) in the assignment.

You have not changed any of the function parameters.

You are not using any global variables inside your graded exercises. Unless specifically instructed to do so, please refrain from it and use the local variables instead.

You are not changing the assignment code where it is not required, like creating extra variables.

If you do any of the following, you will get something like, Grader not found (or similarly unexpected) error upon submitting your assignment. Before asking for help/debugging the errors in your assignment, check for these first. If this is the case, and you don’t remember the changes you have made, you can get a fresh copy of the assignment by following these instructions .

1.1 Importing the data #

As usual, you start by importing some essential Python libraries and the load dataset. The dataset will be loaded as a Pandas DataFrame , which is very a common method in data science. Because of the large size of the data, this may take a few minutes.

To Run This Code On Your Own Machine: #

Note that because the original google news word embedding dataset is about 3.64 gigabytes, the workspace is not able to handle the full file set. So we’ve downloaded the full dataset, extracted a sample of the words that we’re going to analyze in this assignment, and saved it in a pickle file called word_embeddings_capitals.p

If you want to download the full dataset on your own and choose your own set of word embeddings, please see the instructions and some helper code.

Download the dataset from this page .

Search in the page for ‘GoogleNews-vectors-negative300.bin.gz’ and click the link to download.

You’ll need to unzip the file.

Copy-paste the code below and run it on your local machine after downloading the dataset to the same directory as the notebook.

Now we will load the word embeddings as a Python dictionary . As stated, these have already been obtained through a machine learning algorithm.

Each of the word embedding is a 300-dimensional vector.

Predict relationships among words #

Now you will write a function that will use the word embeddings to predict relationships among words.

The function will take as input three words.

The first two are related to each other.

It will predict a 4th word which is related to the third word in a similar manner as the two first words are related to each other.

As an example, “Athens is to Greece as Bangkok is to ______”?

You will write a program that is capable of finding the fourth word.

We will give you a hint to show you how to compute this.

A similar analogy would be the following:

You will implement a function that can tell you the capital of a country. You should use the same methodology shown in the figure above. To do this, you’ll first compute the cosine similarity metric or the Euclidean distance.

1.2 Cosine Similarity #

The cosine similarity function is:

\(A\) and \(B\) represent the word vectors and \(A_i\) or \(B_i\) represent index i of that vector. Note that if A and B are identical, you will get \(cos(\theta) = 1\) .

Otherwise, if they are the total opposite, meaning, \(A= -B\) , then you would get \(cos(\theta) = -1\) .

If you get \(cos(\theta) =0\) , that means that they are orthogonal (or perpendicular).

Numbers between 0 and 1 indicate a similarity score.

Numbers between -1 and 0 indicate a dissimilarity score.

Instructions : Implement a function that takes in two word vectors and computes the cosine distance.

- Python's NumPy library adds support for linear algebra operations (e.g., dot product, vector norm ...).

- Use numpy.dot .

- Use numpy.linalg.norm .

Expected Output :

\(\approx\) 0.651095

1.3 Euclidean distance #

You will now implement a function that computes the similarity between two vectors using the Euclidean distance. Euclidean distance is defined as:

\(n\) is the number of elements in the vector

\(A\) and \(B\) are the corresponding word vectors.

The more similar the words, the more likely the Euclidean distance will be close to 0.

Instructions : Write a function that computes the Euclidean distance between two vectors.

Expected Output:

1.4 Finding the country of each capital #

Now, you will use the previous functions to compute similarities between vectors, and use these to find the capital cities of countries. You will write a function that takes in three words, and the embeddings dictionary. Your task is to find the capital cities. For example, given the following words:

1: Athens 2: Greece 3: Baghdad,

your task is to predict the country 4: Iraq.

Instructions :

To predict the capital you might want to look at the King - Man + Woman = Queen example above, and implement that scheme into a mathematical function, using the word embeddings and a similarity function.

Iterate over the embeddings dictionary and compute the cosine similarity score between your vector and the current word embedding.

You should add a check to make sure that the word you return is not any of the words that you fed into your function. Return the one with the highest score.

Expected Output: (Approximately)

(‘Egypt’, 0.7626821)

1.5 Model Accuracy #

Now you will test your new function on the dataset and check the accuracy of the model:

Instructions : Write a program that can compute the accuracy on the dataset provided for you. You have to iterate over every row to get the corresponding words and feed them into you get_country function above.

- Use pandas.DataFrame.iterrows .

NOTE: The cell below takes about 30 SECONDS to run.

\(\approx\) 0.92

3.0 Plotting the vectors using PCA #

Now you will explore the distance between word vectors after reducing their dimension. The technique we will employ is known as principal component analysis (PCA) . As we saw, we are working in a 300-dimensional space in this case. Although from a computational perspective we were able to perform a good job, it is impossible to visualize results in such high dimensional spaces.

You can think of PCA as a method that projects our vectors in a space of reduced dimension, while keeping the maximum information about the original vectors in their reduced counterparts. In this case, by maximum infomation we mean that the Euclidean distance between the original vectors and their projected siblings is minimal. Hence vectors that were originally close in the embeddings dictionary, will produce lower dimensional vectors that are still close to each other.

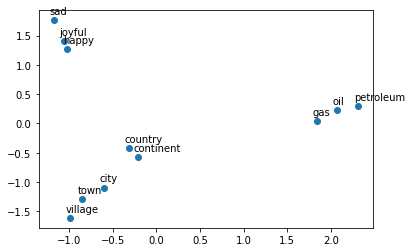

You will see that when you map out the words, similar words will be clustered next to each other. For example, the words ‘sad’, ‘happy’, ‘joyful’ all describe emotion and are supposed to be near each other when plotted. The words: ‘oil’, ‘gas’, and ‘petroleum’ all describe natural resources. Words like ‘city’, ‘village’, ‘town’ could be seen as synonyms and describe a similar thing.

Before plotting the words, you need to first be able to reduce each word vector with PCA into 2 dimensions and then plot it. The steps to compute PCA are as follows:

Mean normalize the data

Compute the covariance matrix of your data ( \(\Sigma\) ).

Compute the eigenvectors and the eigenvalues of your covariance matrix

Multiply the first K eigenvectors by your normalized data. The transformation should look something as follows:

You will write a program that takes in a data set where each row corresponds to a word vector.

The word vectors are of dimension 300.

Use PCA to change the 300 dimensions to n_components dimensions.

The new matrix should be of dimension m, n_componentns .

First de-mean the data

Get the eigenvalues using linalg.eigh . Use ‘eigh’ rather than ‘eig’ since R is symmetric. The performance gain when using eigh instead of eig is substantial.

Sort the eigenvectors and eigenvalues by decreasing order of the eigenvalues.

Get a subset of the eigenvectors (choose how many principle components you want to use using n_components).

Return the new transformation of the data by multiplying the eigenvectors with the original data.

- Use numpy.mean(a,axis=None) : If you set axis = 0 , you take the mean for each column. If you set axis = 1 , you take the mean for each row. Remember that each row is a word vector, and the number of columns are the number of dimensions in a word vector.

- Use numpy.cov(m, rowvar=True) . This calculates the covariance matrix. By default rowvar is True . From the documentation: "If rowvar is True (default), then each row represents a variable, with observations in the columns." In our case, each row is a word vector observation, and each column is a feature (variable).

- Use numpy.linalg.eigh(a, UPLO='L')

- Use numpy.argsort sorts the values in an array from smallest to largest, then returns the indices from this sort.

- In order to reverse the order of a list, you can use: x[::-1] .

- To apply the sorted indices to eigenvalues, you can use this format x[indices_sorted] .

- When applying the sorted indices to eigen vectors, note that each column represents an eigenvector. In order to preserve the rows but sort on the columns, you can use this format x[:,indices_sorted]

- To transform the data using a subset of the most relevant principle components, take the matrix multiplication of the eigenvectors with the original data.

- The data is of shape (n_observations, n_features) .

- The subset of eigenvectors are in a matrix of shape (n_features, n_components) .

- To multiply these together, take the transposes of both the eigenvectors (n_components, n_features) and the data (n_features, n_observations).

- The product of these two has dimensions (n_components,n_observations) . Take its transpose to get the shape (n_observations, n_components) .

Your original matrix was: (3,10) and it became:

Now you will use your pca function to plot a few words we have chosen for you. You will see that similar words tend to be clustered near each other. Sometimes, even antonyms tend to be clustered near each other. Antonyms describe the same thing but just tend to be on the other end of the scale They are usually found in the same location of a sentence, have the same parts of speech, and thus when learning the word vectors, you end up getting similar weights. In the next week we will go over how you learn them, but for now let’s just enjoy using them.

Instructions: Run the cell below.

What do you notice?

The word vectors for gas, oil and petroleum appear related to each other, because their vectors are close to each other. Similarly, sad, joyful and happy all express emotions, and are also near each other.

Assignment 3: SAT Problems Solving

In logic or computer science, the Boolean Satisfiability Problem (abbreviated as SAT in this assignment) is to determine whether or not a given propositional logic formulae is true, and to further determine the model which makes the formulae true. The program or tool to answer the SAT problem is called an SAT solver. In this assignment, we'll learn how a SAT solver works and how to encode propositions and check its satisfiability by such SAT solvers. And as applications, we will also learn how to model practical problems by satisfiability, and thus solve them with the aid of SAT solvers.

There are many SAT/SMT solvers available, each with its pros and cons. The solver we'll be using in this assignment is the Z3 theorem solver/prover , developed by Microsoft Research. There is no special reason for us to choose Z3, any other SAT solvers will also be OK, but Z3's Python APIs will be very convenient.

This assignment is divided into four parts, each of which contains both some tutorials and problems. The first part is the SAT encoding of the basic propositions; the second part covers validity checking; part three covers the DPLL algorithm implementation; and the fourth part covers some SAT applications. Some problems are tagged with Exercise , which you should solve. And several problems are tagged with Challenge , which are optional.

Before starting with this assignment, make sure you've finished Software Setup in the assignment 1, and have Z3 and Python properly installed on your computer. For any installation problems, please feel free to contact us for help. As we'll be using Z3's Python-based API; you may find it useful to refer to the z3py tutorial and the documentation for Z3's Python API .

Hand-in Procedure

When you finished the assignment, zip you code files with file name studentid-assignment3.zip (e.g SA19225111-assignment3.zip ), and submit it to Postgraduate Information Platform . The deadline TBA (Beijing time). Any late submission will NOT be accepted.

Part A: Basic Propositional Logic

In this section, let's start by learning how to solve basic propositional logic problems with Z3.

Declare propositions:

In Z3, we can declare two propositions to be just booleans, this is rather natural, for propositions can have values true or false. To declare two propositions P and Q :

Or, we can use a more compact shorthand:

Build propositions with connectives:

Z3 supports connectives: /\(And) , \/(Or) , ~(Not) , ->(Implies) , along with several others. We can build propositions by writing Lisp-style abstract syntax trees directly, for example, the disjunction: P \/ Q (Note that the connective \/ is called Or in Z3) can be encoded as the following AST:

Usage of Solve:

Example A-1: The simplest usage for Z3 is to feed the proposition to Z3 directly, to check the satisfiability, this can be done by calling the solve() function, the solve() function will create an instance of solver, check the satisfiability of the proposition, and output a model if that proposition is satisfiable, the code looks like:

For the above call, Z3 will output something like this:

which is a model with assignments to proposition P and Q that makes the proposition F satisfiable. Obviously, this is just one of several possible models.

Example A-2: Not all propositions are satisfiable, consider this proposition:

Z3 will output:

Obtain More Possible Solutions:

Consider again example A-1 in the previous:

After all, we're asking Z3 about the satisfiability of the proposition, so one row of evidence is enough. What if we want Z3 to output all the assignment of propositions that make the proposition satisfiable, not just the first one? For the above example, we want the all first 3 rows. Here is the trick: when we get an answer, we negate the answer, make a conjunction with the original proposition, then check the satisfiability again. For the above example:

The output will obtained all the 3 possible solutions:

Part B: SAT And Validity

In Exercise 1, we've learned how to represent propositions in Z3 and how to use Z3 to obtain the solutions that make a given proposition satisfiable. In this part, we continue to discuss how to use Z3 to check the validity of propositions. Recall in exercise 2, we once used theorem prover to prove the validity of propositions, so this is another strategy.

Example B-1: As we've discussed in previous lecture, the relationship between the validity and satisfiability of a proposition P is established by: valid(P) unsat(~P) Let consider our previous example:

Z3 will output the following solution: [P = False, Q = False] the fact that ~F is satisfiable means that the proposition F is not valid. By this, it should be very clear how to use solvers like Z3 to prove the validity of a proposition.

Example B-2: Now we try to prove the double negation law( ~~P -> P ) is valid:

Part C: The DPLL algorithm

- nnf(P) : to convert the proposition P into negation normal form;

- cnf(P) : to convert the proposition P into conjunction normal form;

- dpll(P) : to calculate the satisfiability P of the proposition P ;

After finishing this algorithm to experiment how large propositions your algorithm can solve. For instance, you can generate some very large propositions using this generator and feed the generated propositions to your solver.

How to make you DPLL more efficient, one idea to make your solver concurrent. To be specific, for the splitting step, to the two cases, instead of using two sequential calls, we can create two threads/processes to do concurrent calls.

Part D: Applications

In the previous part we've discussed how to obtain solutions and prove the validity for propositions, and implemented the DPLL algorithm. In this part, we will try to use Z3 to solve some practical problems.

Usually when engineers design circuit layouts, they need do some verifications to make sure those layouts will not only output a permanent electrical signal since it's useless. We want to guarantee that the layouts can output different signals based on the inputs.

As the graph above shows, there are three kinds of logic gates used in design circuit layout, And, Or, Not. And there four inputs in the graph, A, B, C and D.

- Alice can not sit near to Carol;

- Bob can not sit right to Alice.

- Is there any possible arrangement?

- How many possible different arrangement in total?

- one person can only takes just one seat;

- one seat can only be taken by one person;

- each row has just 1 queen;

- each column has just 1 queen;

- each diagonal has at most 1 queen;

- each anti-diagonal has at most 1 queen;

- How long does your program take to solve 8-queen?

- How long does your program take to solve 100-queens?

- What's the maximal of N your program can solve?

Happy hacking!

API for Assignments 3.1 and 3.2

This page describes the endpoints for the API you will use as a client in assignment 3.1 and implement in assignment 3.2.

GET /users/:id

Post /users, patch /users/:id, get /users/:id/feed, post /users/:id/posts, post /users/:id/follow, delete /users/:id/follow, general notes.

- All endpoints return JSON. The response will always be an object (so e.g. if an endpoint returns an array, it will be wrapped in an object with a single key/value pair).

- When the endpoint returns an error status, the response body will be an object with an error key, whose value is a human-readable error message.

- In the examples, lines that begin with > are the request, and lines with no prefix are the response.

- Unless stated explicitly, elements in an array or key/value pairs in an object are in no particular order.

An endpoint to confirm the API is running. You won't have to use it, but it may be useful for testing. For assignment 3.2, it is already implemented.

Returns: An object with basic info about the database.

Get a list of existing users.

Returns: An object with a single key, users , mapping to an array of user IDs.

Get the profile for the user id .

Returns: An object with the keys id , name , avatarURL (which are all strings), and following (which is an array of user IDs the given user is following).

- 404 if the user does not exist

Create a new user. The new user's name is the same as their ID, their avatar URL is the default avatar ( images/default.png ), and they are not following anyone.

Request body: An object with a single key id , whose value is the new user's ID.

Returns: Same as GET /users/:id after user is created.

- 400 if the request body is missing an id property, or the id is empty

- 400 if the user already exists

Update a user's profile (name or avatar URL). User IDs cannot be changed, but including the id in the request body is allowed.

If the specified display name is the empty string, the user's display name is reset to their user ID. If the specified avatar URL is the empty string, the user's avatar is reset to the default avatar ( images/default.png ).

Request body: An object with any number of the keys id (ignored), name , and avatarURL .

Note: Explicitly sending an empty string for name / avatarURL will set them to their defaults; not including them at all will leave them unchanged.

Returns: Same as GET /users/:id after user is updated.

Note: For assignment 3.1, our API checks if extra keys are included in the request body and returns an error if so. For assignment 3.2, you do not have to check for this case.

Returns: An object with a single key posts , mapping to an array. Each element has the following structure:

Create a new post by the given user. The post time is set to the current date/time.

Request body: An object with a single key text , containing the post text.

Returns: The exact object { "success": true } .

- 400 if the request body is missing a text property, or the text is empty

Have the user id follow the target user.

Query string: target : the ID of the user to follow

- 404 if either user id or target does not exist

- 400 if the query string is missing a target property, or the target is empty

- 400 if the user is already following the target

- 400 if the requesting user is the same as the target

Have the user id stop following the target user.

Query string: target : the ID of the user to stop following

- 404 if the user id does not exist

- 400 if the target user isn't being followed by the requesting user

Group Admins

- Show: — Everything — Updates Group Memberships Group Updates Topics Replies

- WordPress.org

- Documentation

- Learn WordPress

- Open access

- Published: 15 April 2024

Demuxafy : improvement in droplet assignment by integrating multiple single-cell demultiplexing and doublet detection methods

- Drew Neavin 1 ,

- Anne Senabouth 1 ,

- Himanshi Arora 1 , 2 ,

- Jimmy Tsz Hang Lee 3 ,

- Aida Ripoll-Cladellas 4 ,

- sc-eQTLGen Consortium ,

- Lude Franke 5 ,

- Shyam Prabhakar 6 , 7 , 8 ,

- Chun Jimmie Ye 9 , 10 , 11 , 12 ,

- Davis J. McCarthy 13 , 14 ,

- Marta Melé 4 ,

- Martin Hemberg 15 &

- Joseph E. Powell ORCID: orcid.org/0000-0002-5070-4124 1 , 16

Genome Biology volume 25 , Article number: 94 ( 2024 ) Cite this article

Metrics details

Recent innovations in single-cell RNA-sequencing (scRNA-seq) provide the technology to investigate biological questions at cellular resolution. Pooling cells from multiple individuals has become a common strategy, and droplets can subsequently be assigned to a specific individual by leveraging their inherent genetic differences. An implicit challenge with scRNA-seq is the occurrence of doublets—droplets containing two or more cells. We develop Demuxafy, a framework to enhance donor assignment and doublet removal through the consensus intersection of multiple demultiplexing and doublet detecting methods. Demuxafy significantly improves droplet assignment by separating singlets from doublets and classifying the correct individual.

Droplet-based single-cell RNA sequencing (scRNA-seq) technologies have provided the tools to profile tens of thousands of single-cell transcriptomes simultaneously [ 1 ]. With these technological advances, combining cells from multiple samples in a single capture is common, increasing the sample size while simultaneously reducing batch effects, cost, and time. In addition, following cell capture and sequencing, the droplets can be demultiplexed—each droplet accurately assigned to each individual in the pool [ 2 , 3 , 4 , 5 , 6 , 7 ].

Many scRNA-seq experiments now capture upwards of 20,000 droplets, resulting in ~16% (3,200) doublets [ 8 ]. Current demultiplexing methods can also identify doublets—droplets containing two or more cells—from different individuals (heterogenic doublets). These doublets can significantly alter scientific conclusions if they are not effectively removed. Therefore, it is essential to remove doublets from droplet-based single-cell captures.

However, demultiplexing methods cannot identify droplets containing multiple cells from the same individual (homogenic doublets) and, therefore, cannot identify all doublets in a single capture. If left in the dataset, those doublets could appear as transitional cells between two distinct cell types or a completely new cell type. Accordingly, additional methods have been developed to identify heterotypic doublets (droplets that contain two cells from different cell types) by comparing the transcriptional profile of each droplet to doublets simulated from the dataset [ 9 , 10 , 11 , 12 , 13 , 14 , 15 ]. It is important to recognise that demultiplexing methods achieve two functions—segregation of cells from different donors and separation of singlets from doublets—while doublet detecting methods solely classify singlets versus doublets.

Therefore, demultiplexing and transcription-based doublet detecting methods provide complementary information to improve doublet detection, providing a cleaner dataset and more robust scientific results. There are currently five genetic-based demultiplexing [ 2 , 3 , 4 , 5 , 6 , 7 , 16 ] and seven transcription-based doublet-detecting methods implemented in various languages [ 9 , 10 , 11 , 12 , 13 , 14 , 15 ]. Under different scenarios, each method is subject to varying performance and, in some instances, biases in their ability to accurately assign cells or detect doublets from certain conditions. The best combination of methods is currently unclear but will undoubtedly depend on the dataset and research question.

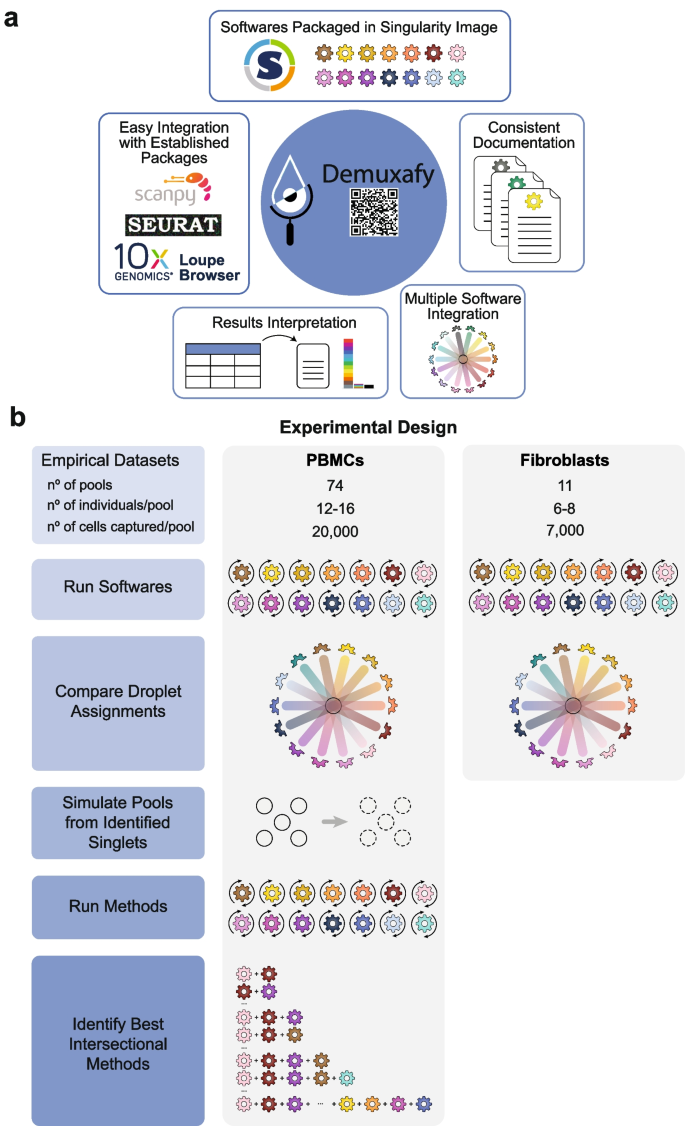

Therefore, we set out to identify the best combination of genetic-based demultiplexing and transcription-based doublet-detecting methods to remove doublets and partition singlets from different donors correctly. In addition, we have developed a software platform ( Demuxafy ) that performs these intersectional methods and provides additional commands to simplify the execution and interpretation of results for each method (Fig. 1 a).

Study design and qualitative method classifications. a Demuxafy is a platform to perform demultiplexing and doublet detecting with consistent documentation. Demuxafy also provides wrapper scripts to quickly summarize the results from each method and assign clusters to each individual with reference genotypes when a reference-free demultiplexing method is used. Finally, Demuxafy provides a script to easily combine the results from multiple different methods into a single data frame and it provides a final assignment for each droplet based on the combination of multiple methods. In addition, Demuxafy provides summaries of the number of droplets classified as singlets or doublets by each method and a summary of the number of droplets assigned to each individual by each of the demultiplexing methods. b Two datasets are included in this analysis - a PBMC dataset and a fibroblast dataset. The PBMC dataset contains 74 pools that captured approximately 20,000 droplets each with 12-16 donor cells multiplexed per pool. The fibroblast dataset contains 11 pools of roughly 7,000 droplets per pool with sizes ranging from six to eight donors per pool. All pools were processed by all demultiplexing and doublet detecting methods and the droplet and donor classifications were compared between the methods and between the PBMCs and fibroblasts. Then the PBMC droplets that were classified as singlets by all methods were taken as ‘true singlets’ and used to generate new pools in silico. Those pools were then processed by each of the demultiplexing and doublet detecting methods and intersectional combinations of demultiplexing and doublet detecting methods were tested for different experimental designs

To compare the demultiplexing and doublet detecting methods, we utilised two large, multiplexed datasets—one that contained ~1.4 million peripheral blood mononuclear cells (PBMCs) from 1,034 donors [ 17 ] and one with ~94,000 fibroblasts from 81 donors [ 18 ]. We used the true singlets from the PBMC dataset to generate new in silico pools to assess the performance of each method and the multi-method intersectional combinations (Fig. 1 b).

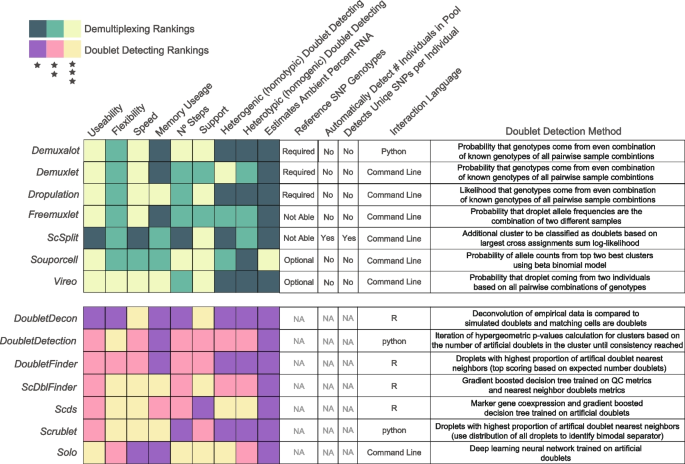

Here, we compare 14 demultiplexing and doublet detecting methods with different methodological approaches, capabilities, and intersectional combinations. Seven of those are demultiplexing methods ( Demuxalot [ 6 ], Demuxlet [ 3 ], Dropulation [ 5 ], Freemuxlet [ 16 ], ScSplit [ 7 ], Souporcell [ 4 ], and Vireo [ 2 ]) which leverage the common genetic variation between individuals to identify cells that came from each individual and to identify heterogenic doublets. The seven remaining methods ( DoubletDecon [ 9 ], DoubletDetection [ 14 ], DoubletFinder [ 10 ], ScDblFinder [ 11 ], Scds [ 12 ], Scrublet [ 13 ], and Solo [ 15 ]) identify doublets based on their similarity to simulated doublets generated by adding the transcriptional profiles of two randomly selected droplets in the dataset. These methods assume that the proportion of real doublets in the dataset is low, so combining any two droplets will likely represent the combination of two singlets.

We identify critical differences in the performance of demultiplexing and doublet detecting methods to classify droplets correctly. In the case of the demultiplexing techniques, their performance depends on their ability to identify singlets from doublets and assign a singlet to the correct individual. For doublet detecting methods, the performance is based solely on their ability to differentiate a singlet from a doublet. We identify limitations in identifying specific doublet types and cell types by some methods. In addition, we compare the intersectional combinations of these methods for multiple experimental designs and demonstrate that intersectional approaches significantly outperform all individual techniques. Thus, the intersectional methods provide enhanced singlet classification and doublet removal—a critical but often under-valued step of droplet-based scRNA-seq processing. Our results demonstrate that intersectional combinations of demultiplexing and doublet detecting software provide significant advantages in droplet-based scRNA-seq preprocessing that can alter results and conclusions drawn from the data. Finally, to provide easy implementation of our intersectional approach, we provide Demuxafy ( https://demultiplexing-doublet-detecting-docs.readthedocs.io/en/latest/index.html ) a complete platform to perform demultiplexing and doublet detecting intersectional methods (Fig. 1 a).

Study design

To evaluate demultiplexing and doublet detecting methods, we developed an experimental design that applies the different techniques to empirical pools and pools generated in silico from the combination of true singlets—droplets identified as singlets by every method (Fig. 1 a). For the first phase of this study, we used two empirical multiplexed datasets—the peripheral blood mononuclear cell (PBMC) dataset containing ~1.4 million cells from 1034 donors and a fibroblast dataset of ~94,000 cells from 81 individuals (Additional file 1 : Table S1). We chose these two cell systems to assess the methods in heterogeneous (PBMC) and homogeneous (fibroblast) cell types.

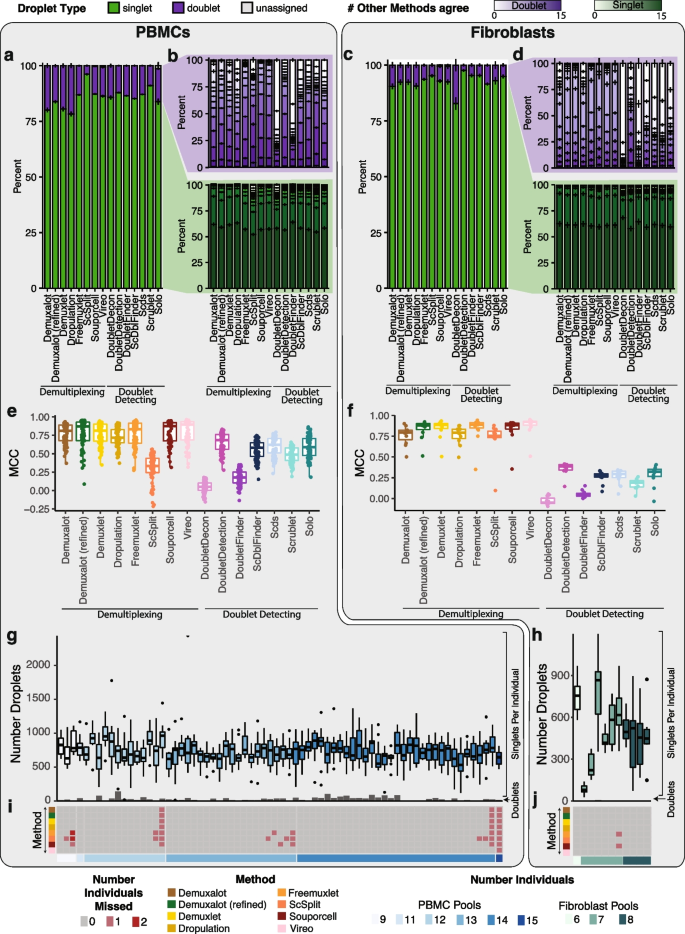

Demultiplexing and doublet detecting methods perform similarly for heterogeneous and homogeneous cell types

We applied the demultiplexing methods ( Demuxalot , Demuxlet , Dropulation , Freemuxlet , ScSplit , Souporcell , and Vireo ) and doublet detecting methods ( DoubletDecon , DoubletDetection , DoubletFinder , ScDblFinder , Scds , Scrublet , and Solo ) to the two datasets and assessed the results from each method. We first compared the droplet assignments by identifying the number of singlets and doublets identified by a given method that were consistently annotated by all methods (Fig. 2 a–d). We also identified the percentage of droplets that were annotated consistently between pairs of methods (Additional file 2 : Fig S1). In the cases where two demultiplexing methods were compared to one another, both the droplet type (singlet or doublet) and the assignment of the droplet to an individual had to match to be considered in agreement. In all other comparisons (i.e. demultiplexing versus doublet detecting and doublet detecting versus doublet detecting), only the droplet type (singlet or doublet) was considered for agreement since doublet detecting methods cannot annotate donor assignment. We found that the two method types were more similar to other methods of the same type (i.e., demultiplexing versus demultiplexing and doublet detecting versus doublet detecting) than they were to methods from a different type (demultiplexing methods versus doublet detecting methods; Supplementary Fig 1). We found that the similarity of the demultiplexing and doublet detecting methods was consistent in the PBMC and fibroblast datasets (Pearson correlation R = 0.78, P -value < 2×10 −16 ; Fig S1a-c). In addition, demultiplexing methods were more similar than doublet detecting methods for both the PBMC and fibroblast datasets (Wilcoxon rank-sum test: P < 0.01; Fig. 2 a–b and Additional file 2 : Fig S1).

Demultiplexing and Doublet Detecting Method Performance Comparison. a The proportion of droplets classified as singlets and doublets by each method in the PBMCs. b The number of other methods that classified the singlets and doublets identified by each method in the PBMCs. c The proportion of droplets classified as singlets and doublets by each method in the fibroblasts. d The number of other methods that classified the singlets and doublets identified by each method in the fibroblasts. e - f The performance of each method when the majority classification of each droplet is considered the correct annotation in the PBMCs ( e ) and fibroblasts ( f ). g - h The number of droplets classified as singlets (box plots) and doublets (bar plots) by all methods in the PBMC ( g ) and fibroblast ( h ) pools. i - j The number of donors that were not identified by each method in each pool for PBMCs ( i ) and fibroblasts ( j ). PBMC: peripheral blood mononuclear cell. MCC: Matthew’s correlationcoefficient

The number of unique molecular identifiers (UMIs) and genes decreased in droplets that were classified as singlets by a larger number of methods while the mitochondrial percentage increased in both PBMCs and fibroblasts (Additional file 2 : Fig S2).

We next interrogated the performance of each method using the Matthew’s correlation coefficient (MCC) to calculate the consistency between Demuxify and true droplet classification. We identified consistent trends in the MCC scores for each method between the PBMCs (Fig. 2 e) and fibroblasts (Fig. 2 f). These data indicate that the methods behave similarly, relative to one another, for heterogeneous and homogeneous datasets.

Next, we sought to identify the droplets concordantly classified by all demultiplexing and doublet detecting methods in the PBMC and fibroblast datasets. On average, 732 singlets were identified for each individual by all the methods in the PBMC dataset. Likewise, 494 droplets were identified as singlets for each individual by all the methods in the fibroblast pools. However, the concordance of doublets identified by all methods was very low for both datasets (Fig. 2 e–f). Notably, the consistency of classifying a droplet as a doublet by all methods was relatively low (Fig. 2 b,d,g, and h). This suggests that doublet identification is not consistent between all the methods. Therefore, further investigation is required to identify the reasons for these inconsistencies between methods. It also suggests that combining multiple methods for doublet classification may be necessary for more complete doublet removal. Further, some methods could not identify all the individuals in each pool (Fig. 2 i–j). The non-concordance between different methods demonstrates the need to effectively test each method on a dataset where the droplet types are known.

Computational resources vary for demultiplexing and doublet detecting methods

We recorded each method’s computational resources for the PBMC pools, with ~20,000 cells captured per pool (Additional file 1 : Table S1). Of the demultiplexing methods, ScSplit took the most time (multiple days) and required the most steps, but Demuxalot , Demuxlet , and Freemuxlet used the most memory. Solo took the longest time (median 13 h) and most memory to run for the doublet detecting methods but is the only method built to be run directly from the command line, making it easy to implement (Additional file 2 : Fig S3).

Generate pools with known singlets and doublets

However, there is no gold standard to identify which droplets are singlets or doublets. Therefore, in the second phase of our experimental design (Fig. 1 b), we used the PBMC droplets classified as singlets by all methods to generate new pools in silico. We chose to use the PBMC dataset since our first analyses indicated that method performance is similar for homogeneous (fibroblast) and heterogeneous (PBMC) cell types (Fig. 2 and Additional file 2 : Fig S1) and because we had many more individuals available to generate in silico pools from the PBMC dataset (Additional file 1 : Table S1).

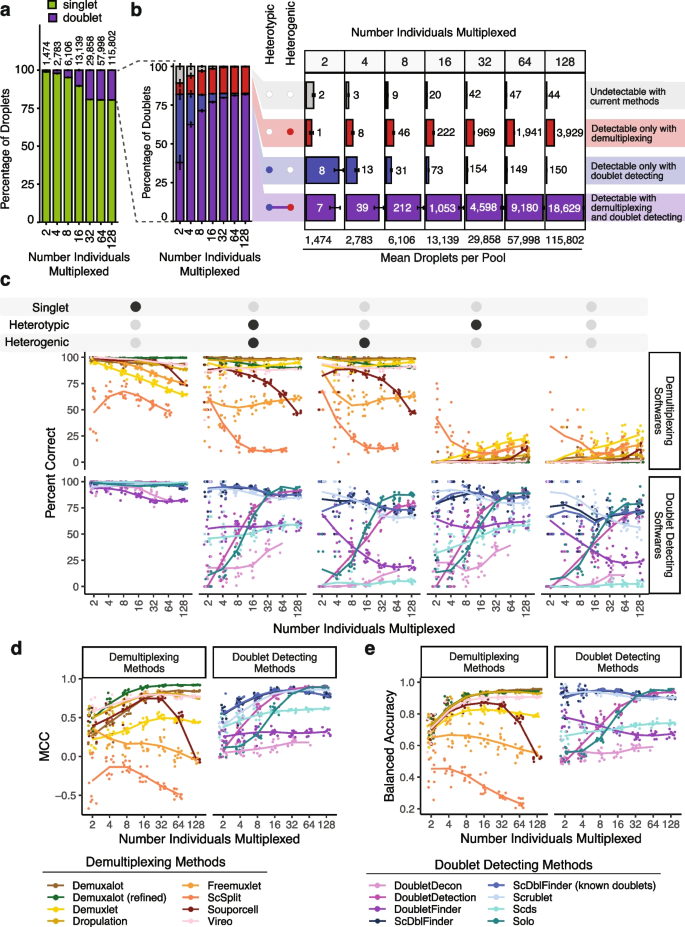

We generated 70 pools—10 each of pools that included 2, 4, 8, 16, 32, 64, or 128 individuals (Additional file 1 : Table S2). We assume a maximum 20% doublet rate as it is unlikely researchers would use a technology that has a higher doublet rate (Fig. 3 a).

In silico Pool Doublet Annotation and Method Performance. a The percent of singlets and doublets in the in -silico pools - separated by the number of multiplexed individuals per pool. b The percentage and number of doublets that are heterogenic (detectable by demultiplexing methods), heterotypic (detectable by doublet detecting methods), both (detectable by either method category) and neither (not detectable with current methods) for each multiplexed pool size. c Percent of droplets that each of the demultiplexing and doublet detecting methods classified correctly for singlets and doublet subtypes for different multiplexed pool sizes. d Matthew’s Correlation Coefficient (MCC) for each of the methods for each of the multiplexed pool sizes. e Balanced accuracy for each of the methods for each of the multiplexed pool sizes

We used azimuth to classify the PBMC cell types for each droplet used to generate the in silico pools [ 19 ] (Additional file 2 : Fig S4). As these pools have been generated in silico using empirical singlets that have been well annotated, we next identified the proportion of doublets in each pool that were heterogenic, heterotypic, both, and neither. This approach demonstrates that a significant percentage of doublets are only detectable by doublet detecting methods (homogenic and heterotypic) for pools with 16 or fewer donors multiplexed (Fig. 3 b).

While the total number of doublets that would be missed if only using demultiplexing methods appears small for fewer multiplexed individuals (Fig. 3 b), it is important to recognise that this is partly a function of the ~732 singlet cells per individual used to generate these pools. Hence, the in silico pools with fewer individuals also have fewer cells. Therefore, to obtain numbers of doublets that are directly comparable to one another, we calculated the number of each doublet type that would be expected to be captured with 20,000 cells when 2, 4, 8, 16, or 32 individuals were multiplexed (Additional file 2 : Fig S5). These results demonstrate that many doublets would be falsely classified as singlets since they are homogenic when just using demultiplexing methods for a pool of 20,000 cells captured with a 16% doublet rate (Additional file 2 : Fig S5). However, as more individuals are multiplexed, the number of droplets that would not be detectable by demultiplexing methods (homogenic) decreases. This suggests that typical workflows that use only one demultiplexing method to remove doublets from pools that capture 20,000 droplets with 16 or fewer multiplexed individuals fail to adequately remove between 173 (16 multiplexed individuals) and 1,325 (2 multiplexed individuals) doublets that are homogenic and heterotypic which could be detected by doublet detecting methods (Additional file 2 : Fig S5). Therefore, a technique that uses both demultiplexing and doublet detecting methods in parallel will complement more complete doublet removal methods. Consequently, we next set out to identify the demultiplexing and doublet detecting methods that perform the best on their own and in concert with other methods.

Doublet and singlet droplet classification effectiveness varies for demultiplexing and doublet detecting methods

Demultiplexing methods fail to classify homogenic doublets.

We next investigated the percentage of the droplets that were correctly classified by each demultiplexing and doublet detecting method. In addition to the seven demultiplexing methods, we also included Demuxalot with the additional steps to refine the genotypes that can then be used for demultiplexing— Demuxalot (refined). Demultiplexing methods correctly classify a large portion of the singlets and heterogenic doublets (Fig. 3 c). This pattern is highly consistent across different cell types, with the notable exceptions being decreased correct classifications for erythrocytes and platelets when greater than 16 individuals are multiplexed (Additional file 2 : Fig S6).

However, Demuxalot consistently demonstrates the highest correct heterogenic doublet classification. Further, the percentage of the heterogenic doublets classified correctly by Souporcell decreases when large numbers of donors are multiplexed. ScSplit is not as effective as the other demultiplexing methods at classifying heterogenic doublets, partly due to the unique doublet classification method, which assumes that the doublets will generate a single cluster separate from the donors (Table 1 ). Importantly, the demultiplexing methods identify almost none of the homogenic doublets for any multiplexed pool size—demonstrating the need to include doublet detecting methods to supplement the demultiplexing method doublet detection.

Doublet detecting method classification performances vary greatly

In addition to assessing each of the methods with default settings, we also evaluated ScDblFinder with ‘known doublets’ provided. This method can take already known doublets and use them when detecting doublets. For these cases, we used the droplets that were classified as doublets by all the demultiplexing methods as ‘known doublets’.

Most of the methods classified a similarly high percentage of singlets correctly, with the exceptions of DoubletDecon and DoubletFinder for all pool sizes (Fig. 3 c). However, unlike the demultiplexing methods, there are explicit cell-type-specific biases for many of the doublet detecting methods (Additional file 2 : Fig S7). These differences are most notable for cell types with fewer cells (i.e. ASDC and cDC2) and proliferating cells (i.e. CD4 Proliferating, CD8 Proliferating, and NK Proliferating). Further, all of the softwares demonstrate high correct percentages for some cell types including CD4 Naïve and CD8 Naïve (Additional file 2 : Fig S7).

As expected, all doublet detecting methods identified heterotypic doublets more effectively than homotypic doublets (Fig. 3 c). However, ScDblFinder and Scrublet classified the most doublets correctly across all doublet types for pools containing 16 individuals or fewer. Solo was more effective at identifying doublets than Scds for pools containing more than 16 individuals. It is also important to note that it was not feasible to run DoubletDecon for the largest pools containing 128 multiplexed individuals and an average of 115,802 droplets (range: 113,594–119,126 droplets). ScDblFinder performed similarly when executed with and without known doublets (Pearson correlation P = 2.5 × 10 -40 ). This suggests that providing known doublets to ScDblFinder does not offer an added benefit.

Performances vary between demultiplexing and doublet detecting method and across the number of multiplexed individuals

We assessed the overall performance of each method with two metrics: the balanced accuracy and the MCC. We chose to use balanced accuracy since, with unbalanced group sizes, it is a better measure of performance than accuracy itself. Further, the MCC has been demonstrated as a more reliable statistical measure of performance since it considers all possible categories—true singlets (true positives), false singlets (false positives), true doublets (true negatives), and false doublets (false negatives). Therefore, a high score on the MCC scale indicates high performance in each metric. However, we provide additional performance metrics for each method (Additional file 1 : Table S3). For demultiplexing methods, both the droplet type (singlet or doublet) and the individual assignment were required to be considered a ‘true singlet’. In contrast, only the droplet type (singlet or doublet) was needed for doublet detection methods.

The MCC and balanced accuracy metrics are similar (Spearman’s ⍴ = 0.87; P < 2.2 × 10 -308 ). Further, the performance of Souporcell decreases for pools with more than 32 individuals multiplexed for both metrics (Student’s t -test for MCC: P < 1.1 × 10 -9 and balanced accuracy: P < 8.1 × 10 -11 ). Scds , ScDblFinder , and Scrublet are among the top-performing doublet detecting methods Fig. 3 d–e).

Overall, between 0.4 and 78.8% of droplets were incorrectly classified by the demultiplexing or doublet detecting methods depending on the technique and the multiplexed pool size (Additional file 2 : Fig S8). Demuxalot (refined) and DoubletDetection demonstrated the lowest percentage of incorrect droplets with about 1% wrong in the smaller pools (two multiplexed individuals) and about 3% incorrect in pools with at least 16 multiplexed individuals. Since some transitional states and cell types are present in low percentages in total cell populations (i.e. ASDCs at 0.02%), incorrect classification of droplets could alter scientific interpretations of the data, and it is, therefore, ideal for decreasing the number of erroneous assignments as much as possible.

False singlets and doublets demonstrate different metrics than correctly classified droplets

We next asked whether specific cell metrics might contribute to false singlet and doublet classifications for different methods. Therefore, we compared the number of genes, number of UMIs, mitochondrial percentage and ribosomal percentage of the false singlets and doublets to equal numbers of correctly classified cells for each demultiplexing and doublet detecting method.

The number of UMIs (Additional file 2 : Fig S9 and Additional file 1 : Table S4) and genes (Additional file 2 : Fig S10 and Additional file 1 : Table S5) demonstrated very similar distributions for all comparisons and all methods (Spearman ⍴ = 0.99, P < 2.2 × 10 -308 ). The number of UMIs and genes were consistently higher in false singlets and lower in false doublets for most demultiplexing methods except some smaller pool sizes (Additional file 2 : Fig S9a and Additional file 2 : Fig S10a; Additional file 1 : Table S4 and Additional file 1 : Table S5). The number of UMIs and genes was consistently higher in droplets falsely classified as singlets by the doublet detecting methods than the correctly identified droplets (Additional file 2 : Fig S9b and Additional file 2 : Fig S10b; Additional file 1 : Table S4 and Additional file 1 : Table S5). However, there was less consistency in the number of UMIs and genes detected in false singlets than correctly classified droplets between the different doublet detecting methods (Additional file 2 : Fig S9b and Additional file 2 : Fig S10b; Additional file 1 : Table S4 and Additional file 1 : Table S5).

The ribosomal percentage of the droplets falsely classified as singlets or doublets is similar to the correctly classified droplets for most methods—although they are statistically different for larger pool sizes (Additional file 2 : Fig S11a and Additional file 1 : Table S6). However, the false doublets classified by some demultiplexing methods ( Demuxalot , Demuxalot (refined), Demuxlet , ScSplit , Souporcell , and Vireo ) demonstrated higher ribosomal percentages. Some doublet detecting methods ( ScDblFinder , ScDblFinder with known doublets and Solo) demonstrated higher ribosomal percentages for the false doublets while other demonstrated lower ribosomal percentages ( DoubletDecon , DoubletDetection , and DoubletFinder ; Additional file 2 : Fig S11b and Additional file 1 : Table S6).

Like the ribosomal percentage, the mitochondrial percentage in false singlets is also relatively similar to correctly classified droplets for both demultiplexing (Additional file 2 : Fig S12a and Additional file 1 : Table S7) and doublet detecting methods (Additional file 2 : Fig S12b). The mitochondrial percentage for false doublets is statistically lower than the correctly classified droplets for a few larger pools for Freemuxlet , ScSplit , and Souporcell . The doublet detecting method Solo also demonstrates a small but significant decrease in mitochondrial percentage in the false doublets compared to the correctly annotated droplets. However, other doublet detecting methods including DoubletFinder and the larger pools of most other methods demonstrated a significant increase in mitochondrial percent in the false doublets compared to the correctly annotated droplets (Additional file 2 : Fig S12b).

Overall, these results demonstrate a strong relationship between the number of genes and UMIs and limited influence of ribosomal or mitochondrial percentage in a droplet and false classification, suggesting that the number of genes and UMIs can significantly bias singlet and doublet classification by demultiplexing and doublet detecting methods.

Ambient RNA, number of reads per cell, and uneven pooling impact method performance

To further quantify the variables that impact the performance of each method, we simulated four conditions that could occur with single-cell RNA-seq experiments: (1) decreased number of reads (reduced 50%), (2) increased ambient RNA (10%, 20% and 50%), (3) increased mitochondrial RNA (5%, 10% and 25%) and 4) uneven donor pooling from single donor spiking (0.5 or 0.75 proportion of pool from one donor). We chose these scenarios because they are common technical effects that can occur.

We observed a consistent decrease in the demultiplexing method performance when the number of reads were decreased by 50% but the degree of the effect varied for each method and was larger in pools containing more multiplexed donors (Additional file 2 : Fig S13a and Additional file 1 : Table S8). Decreasing the number of reads did not have a detectable impact on the performance of the doublet detecting methods.

Simulating additional ambient RNA (10%, 20%, or 50%) decreased the performance of all the demultiplexing methods (Additional file 2 : Fig S13b and Additional file 1 : Table S9) but some were unimpacted in pools that had 16 or fewer individuals multiplexed ( Souporcell and Vireo ). The performance of some of the doublet detecting methods were impacted by the ambient RNA but the performance of most methods did not decrease. Scrublet and ScDblFinder were the doublet detecting methods most impacted by ambient RNA but only in pools with at least 32 multiplexed donors (Additional file 2 : Fig S13b and Additional file 1 : Table S9).

Increased mitochondrial percent did not impact the performance of demultiplexing or doublet detecting methods (Additional file 2 : Fig S13c and Additional file 1 : Table S10).

We also tested whether experimental designs that pooling uneven proportions of donors would alter performance. We tested scenarios where either half the pool was composed of a single donor (0.5 spiked donor proportion) or where three quarters of the pool was composed of a single donor. This experimental design significantly reduced the demultiplexing method performance (Additional file 2 : Fig S13d and Additional file 1 : Table S11) with the smallest influence on Freemuxlet . The performance of most of the doublet detecting methods were unimpacted except for DoubletDetection that demonstrated significant decreases in performance in pools where at least 16 donors were multiplexed. Intriguingly, the performance of Solo increased with the spiked donor pools when the pools consisted of 16 donors or less.

Our results demonstrate significant differences in overall performance between different demultiplexing and doublet detecting methods. We further noticed some differences in the use of the methods. Therefore, we have accumulated these results and each method’s unique characteristics and benefits in a heatmap for visual interpretation (Fig. 4 ).

Assessment of each of the demultiplexing and doublet detecting methods. Assessments of a variety of metrics for each of the demultiplexing (top) and doublet detecting (bottom) methods

Framework for improving singlet classifications via method combinations

After identifying the demultiplexing and doublet detecting methods that performed well individually, we next sought to test whether using intersectional combinations of multiple methods would enhance droplet classifications and provide a software platform— Demuxafy —capable of supporting the execution of these intersectional combinations.

We recognise that different experimental designs will be required for each project. As such, we considered this when testing combinations of methods. We considered multiple experiment designs and two different intersectional methods: (1) more than half had to classify a droplet as a singlet to be called a singlet and (2) at least half of the methods had to classify a droplet as a singlet to be called a singlet. Significantly, these two intersectional methods only differ when an even number of methods are being considered. For combinations that include demultiplexing methods, the individual called by the majority of the methods is the individual used for that droplet. When ties occur, the individual is considered ‘unassigned’.

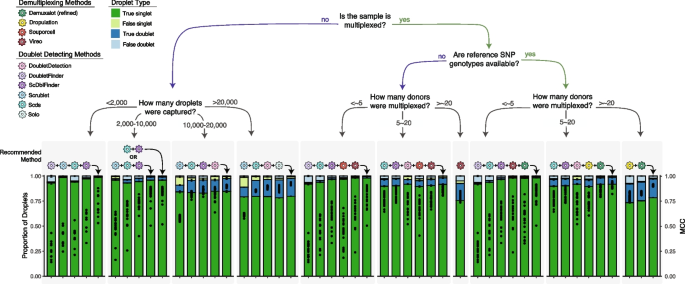

Combining multiple doublet detecting methods improve doublet removal for non-multiplexed experimental designs

For the non-multiplexed experimental design, we considered all possible method combinations (Additional file 1 : Table S12). We identified important differences depending on the number of droplets captured and have provided recommendations accordingly. We identified that DoubletFinder , Scrublet , ScDblFinder and Scds is the ideal combination for balanced droplet calling when less than 2,000 droplets are captured. Scds and ScDblFinder or Scrublet , Scds and ScDblFinder is the best combination when 2,000–10,000 droplets are captured. Scds , Scrublet, ScDblFinder and DoubletDetection is the best combination when 10,000–20,000 droplets are captured and Scrublet , Scds , DoubletDetection and ScDblFinder . It is important to note that even a slight increase in the MCC significantly impacts the number of true singlets and true doublets classified with the degree of benefit highly dependent on the original method performance. The combined method increases the MCC compared to individual doublet detecting methods on average by 0.11 and up to 0.33—a significant improvement in the MCC ( t -test FDR < 0.05 for 95% of comparisons). For all combinations, the intersectional droplet method requires more than half of the methods to consider the droplet a singlet to classify it as a singlet (Fig. 5 ).

Recommended Method Combinations Dependent on Experimental Design. Method combinations are provided for different experimental designs, including those that are not multiplexed (left) and multiplexed (right), including experiments that have reference SNP genotypes available vs those that do not and finally, multiplexed experiments with different numbers of individuals multiplexed. The each bar represents either a single method (shown with the coloured icon above the bar) or a combination of methods (shown with the addition of the methods and an arrow indicating the bar). The proportion of true singlets, true doublets, false singlets and false doublets for each method or combination of methods is shown with the filled barplot and the MCC is shown with the black points overlaid on the barplot. MCC: Matthew’s Correlation Coefficient

Demuxafy performs better than Chord

Chord is an ensemble machine learning doublet detecting method that uses Scds and DoubletFinder to identify doublets. We compared Demuxafy using Scds and DoubletFinder to Chord and identified that Demuxafy outperformed Chord in pools that contained at least eight donors and was equivalent in pools that contained less than eight donors (Additional file 2 : Fig S14). This is because Chord classifies more droplets as false singlets and false doublets than Demuxafy . In addition, Chord failed to complete for two of the pools that contained 128 multiplexed donors.

Combining multiple demultiplexing and doublet detecting methods improve doublet removal for multiplexed experimental designs

For experiments where 16 or fewer individuals are multiplexed with reference SNP genotypes available, we considered all possible combinations between the demultiplexing and doublet detecting methods except ScDblFinder with known doublets due to its highly similar performance to ScDblFinder (Fig 3 ; Additional file 1 : Table S13). The best combinations are DoubletFinder , Scds , ScDblFinder , Vireo and Demuxalot (refined) (<~5 donors) and Scrublet , ScDblFinder , DoubletDetection , Dropulation and Demuxalot (refined) (Fig. 5 ). These intersectional methods increase the MCC compared to the individual methods ( t -test FDR < 0.05), generally resulting in increased true singlets and doublets compared to the individual methods. The improvement in MCC depends on every single method’s performance but, on average, increases by 0.22 and up to 0.71. For experiments where the reference SNP genotypes are unknown, the individuals multiplexed in the pool with 16 or fewer individuals multiplexed, DoubletFinder , ScDblFinder, Souporcell and Vireo (<~5 donors) and Scds , ScDblFinder , DoubletDetection , Souporcell and Vireo are the ideal methods (Fig. 5 ). These intersectional methods again significantly increase the MCC up to 0.87 compared to any of the individual techniques that could be used for this experimental design ( t -test FDR < 0.05 for 94.2% of comparisons). In both cases, singlets should only be called if more than half of the methods in the combination classify the droplet as a singlet.

Combining multiple demultiplexing methods improves doublet removal for large multiplexed experimental designs

For experiments that multiplex more than 16 individuals, we considered the combinations between all demultiplexing methods (Additional file 1 : Table S14) since only a small proportion of the doublets would be undetectable by demultiplexing methods (droplets that are homogenic; Fig 3 b). To balance doublet removal and maintain true singlets, we recommend the combination of Demuxalot (refined) and Dropulation . These method combinations significantly increase the MCC by, on average, 0.09 compared to all the individual methods ( t -test FDR < 0.05). This substantially increases true singlets and true doublets relative to the individual methods. If reference SNP genotypes are not available for the individuals multiplexed in the pools, Vireo performs the best (≥ 16 multiplexed individuals; Fig. 5 ). This is the only scenario in which executing a single method is advantageous to a combination of methods. This is likely due to the fact that most of the methods perform poorly for larger pool sizes (Fig. 3 c).

These results collectively demonstrate that, regardless of the experimental design, demultiplexing and doublet detecting approaches that intersect multiple methods significantly enhance droplet classification. This is consistent across different pool sizes and will improve singlet annotation.

Demuxafy improves doublet removal and improves usability

To make our intersectional approaches accessible to other researchers, we have developed Demuxafy ( https://demultiplexing-doublet-detecting-docs.readthedocs.io/en/latest/index.html ) - an easy-to-use software platform powered by Singularity. This platform provides the requirements and instructions to execute each demultiplexing and doublet detecting methods. In addition, Demuxafy provides wrapper scripts that simplify method execution and effectively summarise results. We also offer tools that help estimate expected numbers of doublets and provide method combination recommendations based on scRNA-seq pool characteristics. Demuxafy also combines the results from multiple different methods, provides classification combination summaries, and provides final integrated combination classifications based on the intersectional techniques selected by the user. The significant advantages of Demuxafy include a centralised location to execute each of these methods, simplified ways to combine methods with an intersectional approach, and summary tables and figures that enable practical interpretation of multiplexed datasets (Fig. 1 a).

Demultiplexing and doublet detecting methods have made large-scale scRNA-seq experiments achievable. However, many demultiplexing and doublet detecting methods have been developed in the recent past, and it is unclear how their performances compare. Further, the demultiplexing techniques best detect heterogenic doublets while doublet detecting methods identify heterotypic doublets. Therefore, we hypothesised that demultiplexing and doublet detecting methods would be complementary and be more effective at removing doublets than demultiplexing methods alone.

Indeed, we demonstrated the benefit of utilising a combination of demultiplexing and doublet detecting methods. The optimal intersectional combination of methods depends on the experimental design and capture characteristics. Our results suggest super loaded captures—where a high percentage of doublets is expected—will benefit from multiplexing. Further, when many donors are multiplexed (>16), doublet detecting is not required as there are few doublets that are homogenic and heterotypic.

We have provided different method combination recommendations based on the experimental design. This decision is highly dependent on the research question.

Conclusions

Overall, our results provide researchers with important demultiplexing and doublet detecting performance assessments and combinatorial recommendations. Our software platform, Demuxafy ( https://demultiplexing-doublet-detecting-docs.readthedocs.io/en/latest/index.html ), provides a simple implementation of our methods in any research lab around the world, providing cleaner scRNA-seq datasets and enhancing interpretation of results.

PBMC scRNA-seq data

Blood samples were collected and processed as described previously [ 17 ]. Briefly, mononuclear cells were isolated from whole blood samples and stored in liquid nitrogen until thawed for scRNA-seq capture. Equal numbers of cells from 12 to 16 samples were multiplexed per pool and single-cell suspensions were super loaded on a Chromium Single Cell Chip A (10x Genomics) to capture 20,000 droplets per pool. Single-cell libraries were processed per manufacturer instructions and the 10× Genomics Cell Ranger Single Cell Software Suite (v 2.2.0) was used to process the data and map it to GRCh38. Cellbender v0.1.0 was used to identify empty droplets. Almost all droplets reported by Cell Ranger were identified to contain cells by Cellbender (mean: 99.97%). The quality control metrics of each pool are demonstrated in Additional file 2 : Fig S15.

PBMC DNA SNP genotyping

SNP genotype data were prepared as described previously [ 17 ]. Briefly, DNA was extracted from blood with the QIAamp Blood Mini kit and genotyped on the Illumina Infinium Global Screening Array. SNP genotypes were processed with Plink and GCTA before imputing on the Michigan Imputation Server using Eagle v2.3 for phasing and Minimac3 for imputation based on the Haplotype Reference Consortium panel (HRCr1.1). SNP genotypes were then lifted to hg38 and filtered for > 1% minor allele frequency (MAF) and an R 2 > 0.3.

Fibroblast scRNA-seq data

The fibroblast scRNA-seq data has been described previously [ 18 ]. Briefly, human skin punch biopsies from donors over the age of 18 were cultured in DMEM high glucose supplemented with 10% fetal bovine serum (FBS), L-glutamine, 100 U/mL penicillin and 100 μg/mL (Thermo Fisher Scientific, USA).

For scRNA-seq, viable cells were flow sorted and single cell suspensions were loaded onto a 10× Genomics Single Cell 3’ Chip and were processed per 10× instructions and the Cell Ranger Single Cell Software Suite from 10× Genomics was used to process the sequencing data into transcript count tables as previously described [ 18 ]. Cellbender v0.1.0 was used to identify empty droplets. Almost all droplets reported by Cell Ranger were identified to contain cells by Cellbender (mean: 99.65%). The quality control metrics of each pool are demonstrated in Additional file 2 : Fig S16.

Fibroblast DNA SNP genotyping

The DNA SNP genotyping for fibroblast samples has been described previously [ 18 ]. Briefly, DNA from each donor was genotyped on an Infinium HumanCore-24 v1.1 BeadChip (Illumina). GenomeStudioTM V2.0 (Illumina), Plink and GenomeStudio were used to process the SNP genotypes. Eagle V2.3.5 was used to phase the SNPs and it was imputed with the Michigan Imputation server using minimac3 and the 1000 genome phase 3 reference panel as described previously [ 18 ].

Demultiplexing methods

All the demultiplexing methods were built and run from a singularity image.

Demuxalot [ 6 ] is a genotype reference-based single cell demultiplexing method. Demualot v0.2.0 was used in python v3.8.5 to annotate droplets. The likelihoods, posterior probabilities and most likely donor for each droplet were estimated using the Demuxalot Demultiplexer.predict_posteriors function. We also used Demuxalot Demultiplexer.learn_genotypes function to refine the genotypes before estimating the likelihoods, posterior probabilities and likely donor of each droplet with the refined genotypes as well.

The Popscle v0.1-beta suite [ 16 ] for population genomics in single cell data was used for Demuxlet and Freemuxlet demultiplexing methods. The popscle dsc-pileup function was used to create a pileup of variant calls at known genomic locations from aligned sequence reads in each droplet with default arguments.

Demuxlet [ 3 ] is a SNP genotype reference-based single cell demultiplexing method. Demuxlet was run with a genotype error coefficient of 1 and genotype error offset rate of 0.05 and the other default parameters using the popscle demuxlet command from Popscle (v0.1-beta).

Freemuxlet [ 16 ] is a SNP genotype reference-free single cell demultiplexing method. Freemuxlet was run with default parameters including the number of samples included in the pool using the popscle freemuxlet command from Popscle (v0.1-beta).

Dropulation

Dropulation [ 5 ] is a SNP genotype reference-based single cell demultiplexing method that is part of the Drop-seq software. Dropulation from Drop-seq v2.5.1 was implemented for this manuscript. In addition, the method for calling singlets and doublets was provided by the Dropulation developer and implemented in a custom R script available on Github and Zenodo (see “Availability of data and materials”).

ScSplit v1.0.7 [ 7 ] was downloaded from the ScSplit github and the recommended steps for data filtering quality control prior to running ScSplit were followed. Briefly, reads that had read quality lower than 10, were unmapped, were secondary alignments, did not pass filters, were optical PCR duplicates or were duplicate reads were removed. The resulting bam file was then sorted and indexed followed by freebayes to identify single nucleotide variants (SNVs) in the dataset. The resulting SNVs were filtered for quality scores greater than 30 and for variants present in the reference SNP genotype vcf. The resulting filtered bam and vcf files were used as input for the s cSplit count command with default settings to count the number of reference and alternative alleles in each droplet. Next the allele matrices were used to demultiplex the pool and assign cells to different clusters using the scSplit run command including the number of individuals ( -n ) option and all other options set to default. Finally, the individual genotypes were predicted for each cluster using the scSplit genotype command with default parameters.

Souporcell [ 4 ] is a SNP genotype reference-free single cell demultiplexing method. The Souporcell v1.0 singularity image was downloaded via instructions from the gihtub page. The Souporcell pipeline was run using the souporcell_pipeline.py script with default options and the option to include known variant locations ( --common_variants ).

Vireo [ 2 ] is a single cell demultiplexing method that can be used with reference SNP genotypes or without them. For this assessment, Vireo was used with reference SNP genotypes. Per Vireo recommendations, we used model 1 of the cellSNP [ 20 ] version 0.3.2 to make a pileup of SNPs for each droplet with the recommended options using the genotyped reference genotype file as the list of common known SNP and filtered with SNP locations that were covered by at least 20 UMIs and had at least 10% minor allele frequency across all droplets. Vireo version 0.4.2 was then used to demultiplex using reference SNP genotypes and indicating the number of individuals in the pools.

Doublet detecting methods

All doublet detecting methods were built and run from a singularity image.

DoubletDecon

DoubletDecon [ 9 ] is a transcription-based deconvolution method for identifying doublets. DoubletDecon version 1.1.6 analysis was run in R version 3.6.3. SCTransform [ 21 ] from Seurat [ 22 ] version 3.2.2 was used to preprocess the scRNA-seq data and then the Improved_Seurat_Pre_Process function was used to process the SCTransformed scRNA-seq data. Clusters were identified using Seurat function FindClusters with resolution 0.2 and 30 principal components (PCs). Then the Main_Doublet_Decon function was used to deconvolute doublets from singlets for six different rhops—0.6, 0.7, 0.8, 0.9, 1.0 and 1.1. We used a range of rhop values since the doublet annotation by DoubletDecon is dependent on the rhop parameter which is selected by the user. The rhop that resulted in the closest number of doublets to the expected number of doublets was selected on a per-pool basis and used for all subsequent analysis. Expected number of doublets were estimated with the following equation:

where N is the number of droplets captured and D is the number of expected doublets.

DoubletDetection

DoubletDetection [ 14 ] is a transcription-based method for identifying doublets. DoubletDetection version 2.5.2 analysis was run in python version 3.6.8. Droplets without any UMIs were removed before analysis with DoubletDetection . Then the doubletdetection.BoostClassifier function was run with 50 iterations with use_phenograph set to False and standard_scaling set to True. The predicted number of doublets per iteration was visualised across all iterations and any pool that did not converge after 50 iterations, it was run again with increasing numbers of iterations until they reached convergence.

DoubletFinder

DoubletFinder [ 10 ] is a transcription-based doublet detecting method. DoubletFinder version 2.0.3 was implemented in R version 3.6.3. First, droplets that were more than 3 median absolute deviations (mad) away from the median for mitochondrial per cent, ribosomal per cent, number of UMIs or number of genes were removed per developer recommendations. Then the data was normalised with SCTransform followed by cluster identification using FindClusters with resolution 0.3 and 30 principal components (PCs). Then, pKs were selected by the pK that resulted in the largest BC MVN as recommended by DoubletFinder. The pK vs BC MVN relationship was visually inspected for each pool to ensure an effective BC MVN was selected for each pool. Finally, the homotypic doublet proportions were calculated and the number of expected doublets with the highest doublet proportion were classified as doublets per the following equation:

ScDblFinder

ScDblFinder [ 11 ] is a transcription-based method for detecting doublets from scRNA-seq data. ScDblFinder 1.3.25 was implemented in R version 4.0.3. ScDblFinder was implemented with two sets of options. The first included implementation with the expected doublet rate as calculated by:

where N is the number of droplets captured and R is the expected doublet rate. The second condition included the same expected number of doublets and included the doublets that had already been identified by all the demultiplexing methods.

Scds [ 12 ] is a transcription-based doublet detecting method. Scds version 1.1.2 analysis was completed in R version 3.6.3. Scds was implemented with the cxds function and bcds functions with default options followed by the cxds_bcds_hybrid with estNdbl set to TRUE so that doublets will be estimated based on the values from the cxds and bcds functions.

Scrublet [ 13 ] is a transcription-based doublet detecting method for single-cell RNA-seq data. Scrublet was implemented in python version 3.6.3. Scrublet was implemented per developer recommendations with at least 3 counts per droplet, 3 cells expressing a given gene, 30 PCs and a doublet rate based on the following equation:

where N is the number of droplets captured and R is the expected doublet rate. Four different minimum number of variable gene percentiles: 80, 85, 90 and 95. Then, the best variable gene percentile was selected based on the distribution of the simulated doublet scores and the location of the doublet threshold selection. In the case that the selected threshold does not fall between a bimodal distribution, those pools were run again with a manual threshold set.

Solo [ 15 ] is a transcription-based method for detecting doublets in scRNA-seq data. Solo was implemented with default parameters and an expected number of doublets based on the following equation:

where N is the number of droplets captured and D is the number of expected doublets. Solo was additionally implemented in a second run for each pool with the doublets that were identified by all the demultiplexing methods as known doublets to initialize the model.

In silico pool generation

Cells that were identified as singlets by all methods were used to simulate pools. Ten pools containing 2, 4, 8, 16, 32, 64 and 128 individuals were simulated assuming a maximum 20% doublet rate as it is unlikely researchers would use a technology that has a higher doublet rate. The donors for each simulated pool were randomly selected using a custom R script which is available on Github and Zenodo (see ‘Availability of data and materials’). A separate bam for the cell barcodes for each donor was generated using the filterbarcodes function from the sinto package (v0.8.4). Then, the GenerateSyntheticDoublets function provided by the Drop-seq [ 5 ] package was used to simulate new pools containing droplets with known singlets and doublets.

Twenty-one total pools—three pools from each of the different simulated pool sizes (2, 4, 8, 16, 32, 64 and 128 individuals) —were used to simulate different experimental scenarios that may be more challenging for demultiplexing and doublet detecting methods. These include simulating higher ambient RNA, higher mitochondrial percent, decreased read coverage and imbalanced donor proportions as described subsequently.

High ambient RNA simulations