The Journal of Artificial Intelligence Research (JAIR) is dedicated to the rapid dissemination of important research results to the global artificial intelligence (AI) community. The journal’s scope encompasses all areas of AI, including agents and multi-agent systems, automated reasoning, constraint processing and search, knowledge representation, machine learning, natural language, planning and scheduling, robotics and vision, and uncertainty in AI.

Current Issue

Vol. 79 (2024)

Published: 2024-01-10

Bt-GAN: Generating Fair Synthetic Healthdata via Bias-transforming Generative Adversarial Networks

Collision avoiding max-sum for mobile sensor teams, usn: a robust imitation learning method against diverse action noise, structure in deep reinforcement learning: a survey and open problems, a map of diverse synthetic stable matching instances, digcn: a dynamic interaction graph convolutional network based on learnable proposals for object detection, iterative train scheduling under disruption with maximum satisfiability, removing bias and incentivizing precision in peer-grading, cultural bias in explainable ai research: a systematic analysis, learning to resolve social dilemmas: a survey, a principled distributional approach to trajectory similarity measurement and its application to anomaly detection, multi-modal attentive prompt learning for few-shot emotion recognition in conversations, condense: conditional density estimation for time series anomaly detection, performative ethics from within the ivory tower: how cs practitioners uphold systems of oppression, learning logic specifications for policy guidance in pomdps: an inductive logic programming approach, multi-objective reinforcement learning based on decomposition: a taxonomy and framework, can fairness be automated guidelines and opportunities for fairness-aware automl, practical and parallelizable algorithms for non-monotone submodular maximization with size constraint, exploring the tradeoff between system profit and income equality among ride-hailing drivers, on mitigating the utility-loss in differentially private learning: a new perspective by a geometrically inspired kernel approach, an algorithm with improved complexity for pebble motion/multi-agent path finding on trees, weighted, circular and semi-algebraic proofs, reinforcement learning for generative ai: state of the art, opportunities and open research challenges, human-in-the-loop reinforcement learning: a survey and position on requirements, challenges, and opportunities, boolean observation games, detecting change intervals with isolation distributional kernel, query-driven qualitative constraint acquisition, visually grounded language learning: a review of language games, datasets, tasks, and models, right place, right time: proactive multi-robot task allocation under spatiotemporal uncertainty, principles and their computational consequences for argumentation frameworks with collective attacks, the ai race: why current neural network-based architectures are a poor basis for artificial general intelligence, undesirable biases in nlp: addressing challenges of measurement.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- NATURE INDEX

- 12 October 2022

Growth in AI and robotics research accelerates

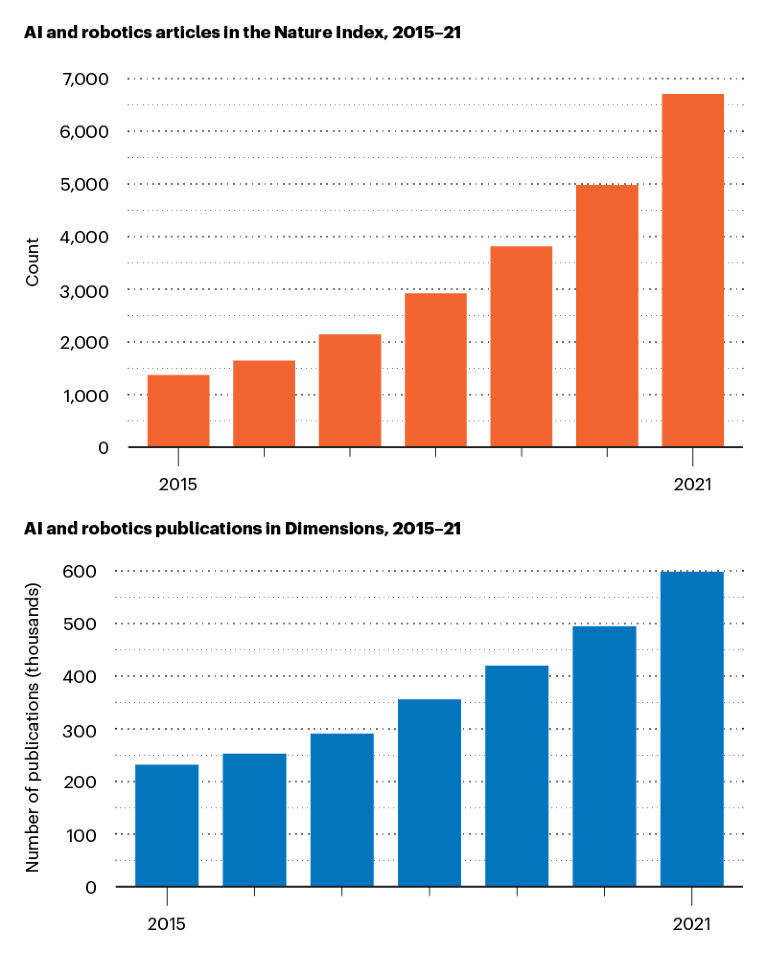

It may not be unusual for burgeoning areas of science, especially those related to rapid technological changes in society, to take off quickly, but even by these standards the rise of artificial intelligence (AI) has been impressive. Together with robotics, AI is representing an increasingly significant portion of research volume at various levels, as these charts show.

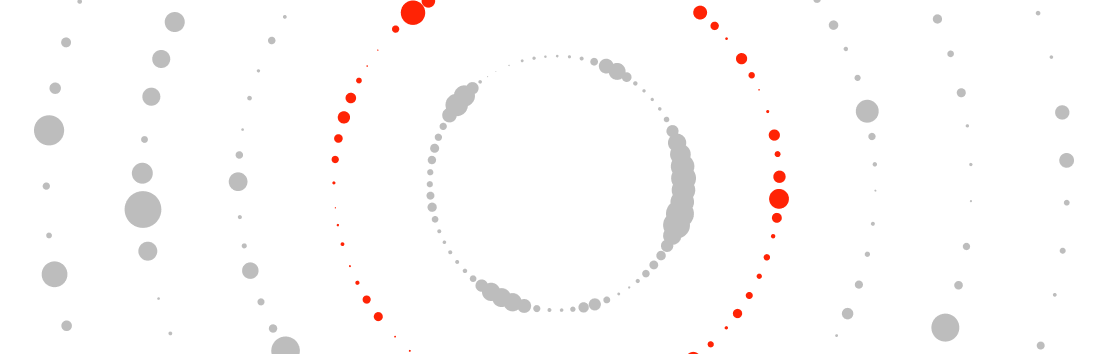

Across the field

The number of AI and robotics papers published in the 82 high-quality science journals in the Nature Index (Count) has been rising year-on-year — so rapidly that it resembles an exponential growth curve. A similar increase is also happening more generally in journals and proceedings not included in the Nature Index, as is shown by data from the Dimensions database of research publications.

Source: Nature Index, Dimensions. Data analysis by Catherine Cheung; infographic by Simon Baker, Tanner Maxwell and Benjamin Plackett

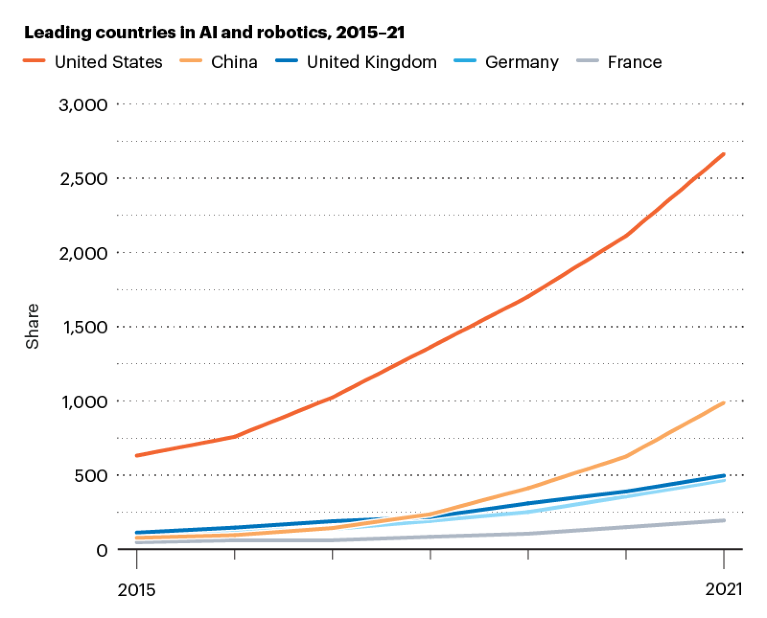

Leading countries

Five countries — the United States, China, the United Kingdom, Germany and France — had the highest AI and robotics Share in the Nature Index from 2015 to 2021, with the United States leading the pack. China has seen the largest percentage change (1,174%) in annual Share over the period among the five nations.

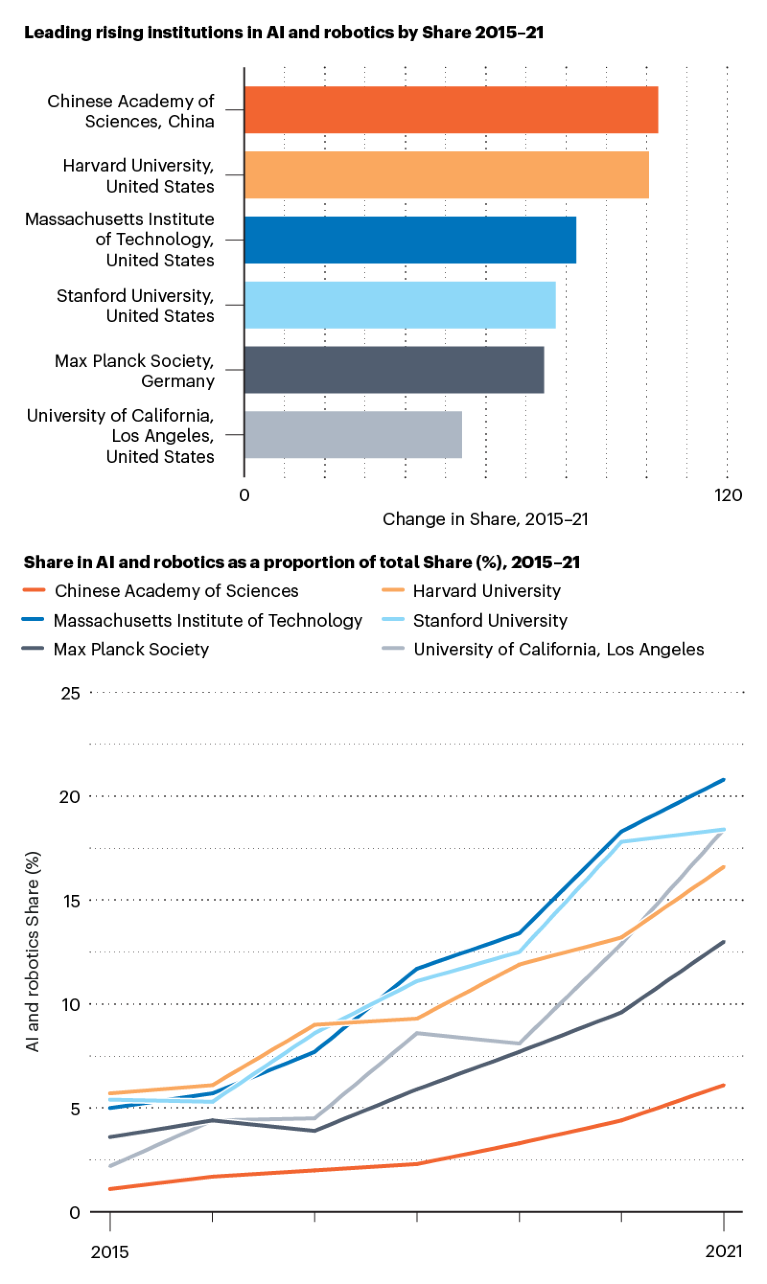

AI and robotics infiltration

As the field of AI and robotics research grows in its own right, leading institutions such as Harvard University in the United States have increased their Share in this area since 2015. But such leading institutions have also seen an expansion in the proportion of their overall index Share represented by research in AI and robotics. One possible explanation for this is that AI and robotics is expanding into other fields, creating interdisciplinary AI and robotics research.

Nature 610 , S9 (2022)

doi: https://doi.org/10.1038/d41586-022-03210-9

This article is part of Nature Index 2022 AI and robotics , an editorially independent supplement. Advertisers have no influence over the content.

Related Articles

Partner content: AI helps computers to see and hear more efficiently

Partner content: Canada's welcoming artificial intelligence research ecosystem

Partner content: TINY robots inspired by insects

Partner content: Pioneering a new era of drug development

Partner content: New tool promises smarter approach to big data and AI

Partner content: Intelligent robots offer service with a smile

Partner content: Hong Kong’s next era fuelled by innovation

Partner content: Getting a grip on mass-produced artificial muscles with control engineering tools

Partner content: A blueprint for AI-powered smart speech technology

Partner content: All in the mind’s AI

Partner content: How artificial intelligence could turn thoughts into actions

Partner content: AI-powered start-up puts protein discovery on the fast track

Partner content: Intelligent tech takes on drone safety

- Computer science

- Mathematics and computing

AI now beats humans at basic tasks — new benchmarks are needed, says major report

News 15 APR 24

High-threshold and low-overhead fault-tolerant quantum memory

Article 27 MAR 24

Three reasons why AI doesn’t model human language

Correspondence 19 MAR 24

The US Congress is taking on AI —this computer scientist is helping

News Q&A 09 MAY 24

Powerful ‘nanopore’ DNA sequencing method tackles proteins too

Technology Feature 08 MAY 24

Who’s making chips for AI? Chinese manufacturers lag behind US tech giants

News 03 MAY 24

The dream of electronic newspapers becomes a reality — in 1974

News & Views 07 MAY 24

3D genomic mapping reveals multifocality of human pancreatic precancers

Article 01 MAY 24

AI’s keen diagnostic eye

Outlook 18 APR 24

Staff Scientist

A Staff Scientist position is available in the laboratory of Drs. Elliot and Glassberg to study translational aspects of lung injury, repair and fibro

Maywood, Illinois

Loyola University Chicago - Department of Medicine

W3-Professorship (with tenure) in Inorganic Chemistry

The Institute of Inorganic Chemistry in the Faculty of Mathematics and Natural Sciences at the University of Bonn invites applications for a W3-Pro...

53113, Zentrum (DE)

Rheinische Friedrich-Wilhelms-Universität

Principal Investigator Positions at the Chinese Institutes for Medical Research, Beijing

Studies of mechanisms of human diseases, drug discovery, biomedical engineering, public health and relevant interdisciplinary fields.

Beijing, China

The Chinese Institutes for Medical Research (CIMR), Beijing

Research Associate - Neural Development Disorders

Houston, Texas (US)

Baylor College of Medicine (BCM)

Staff Scientist - Mitochondria and Surgery

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

THE AI INDEX REPORT

Measuring trends in AI

ai iNDEX anNUAL rEPORT

Welcome to the 2024 AI Index Report

Welcome to the seventh edition of the AI Index report. The 2024 Index is our most comprehensive to date and arrives at an important moment when AI’s influence on society has never been more pronounced. This year, we have broadened our scope to more extensively cover essential trends such as technical advancements in AI, public perceptions of the technology, and the geopolitical dynamics surrounding its development. Featuring more original data than ever before, this edition introduces new estimates on AI training costs, detailed analyses of the responsible AI landscape, and an entirely new chapter dedicated to AI’s impact on science and medicine. The AI Index report tracks, collates, distills, and visualizes data related to artificial intelligence (AI). Our mission is to provide unbiased, rigorously vetted, broadly sourced data in order for policymakers, researchers, executives, journalists, and the general public to develop a more thorough and nuanced understanding of the complex field of AI.

TOP TAKEAWAYS

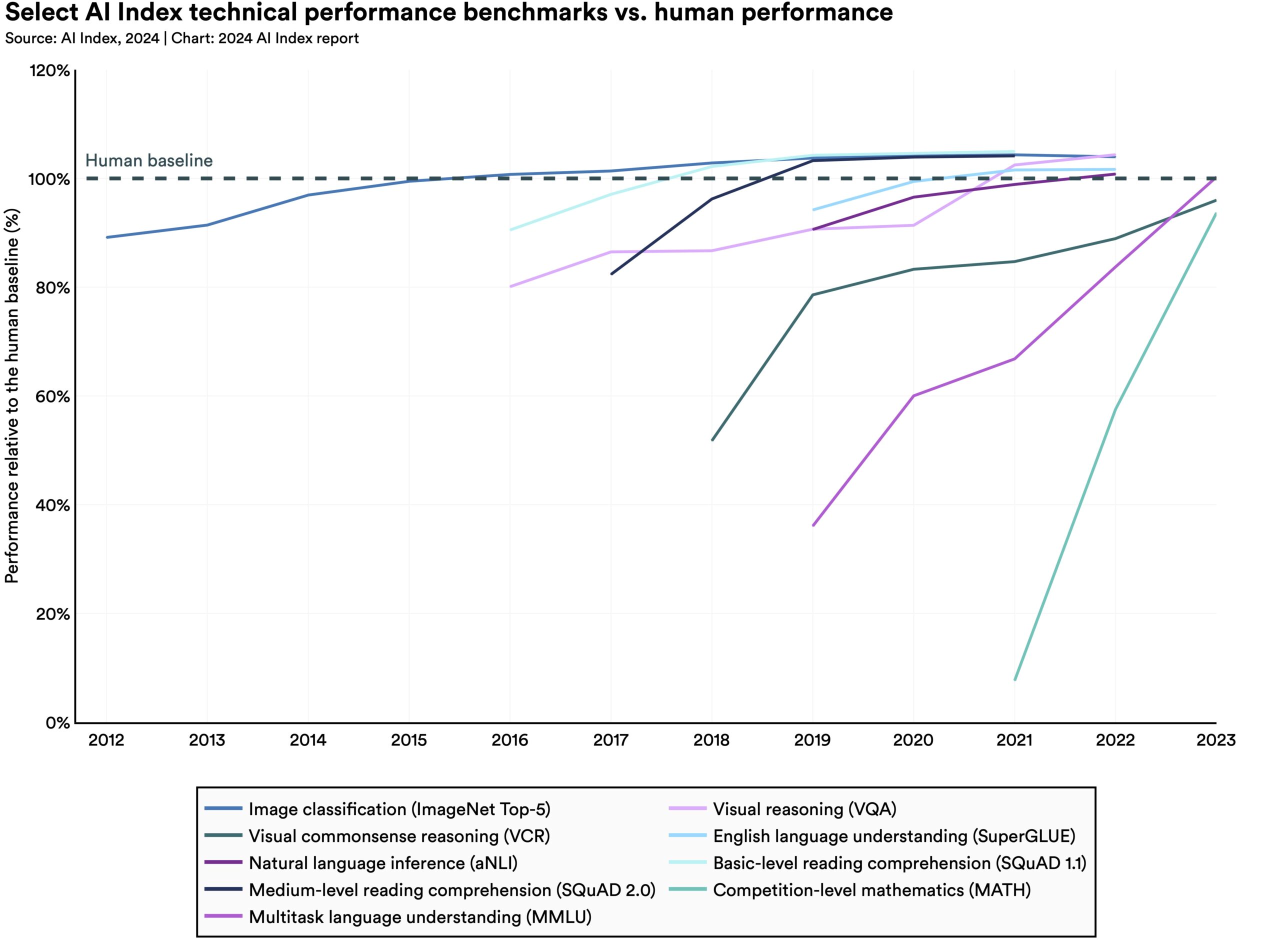

1. a i beats humans on some tasks, but not on all..

AI has surpassed human performance on several benchmarks, including some in image classification, visual reasoning, and English understanding. Yet it trails behind on more complex tasks like competition-level mathematics, visual commonsense reasoning and planning.

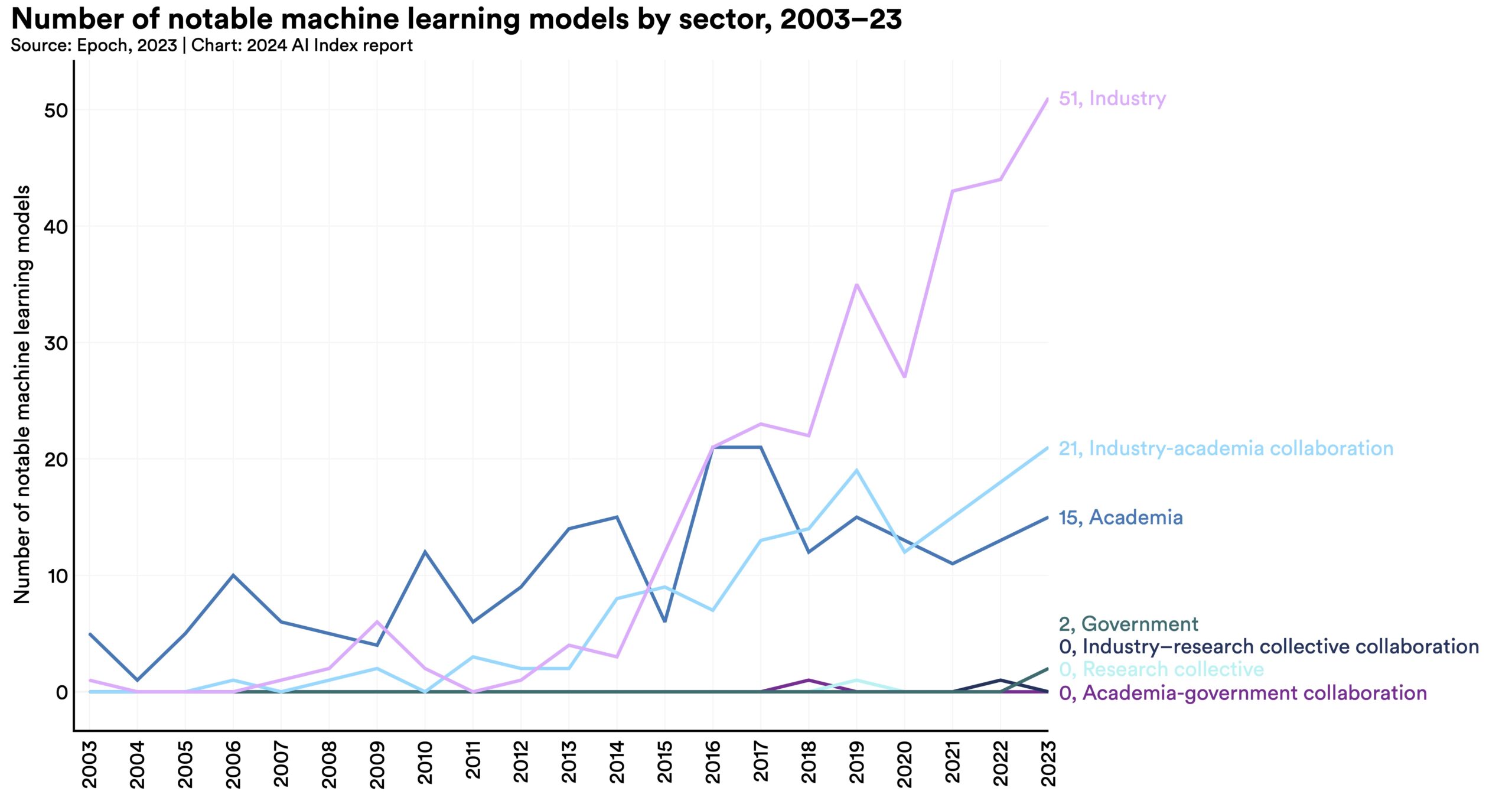

2. Industry continues to dominate frontier AI research .

In 2023, industry produced 51 notable machine learning models, while academia contributed only 15. There were also 21 notable models resulting from industry-academia collaborations in 2023, a new high.

3. Frontier models get way more expensive .

According to AI Index estimates, the training costs of state-of-the-art AI models have reached unprecedented levels. For example, OpenAI’s GPT-4 used an estimated $78 million worth of compute to train, while Google’s Gemini Ultra cost $191 million for compute.

- 4. The United States leads China, the EU, and the U.K. as the leading source of top AI models.

In 2023, 61 notable AI models originated from U.S.-based institutions, far outpacing the European Union’s 21 and China’s 15.

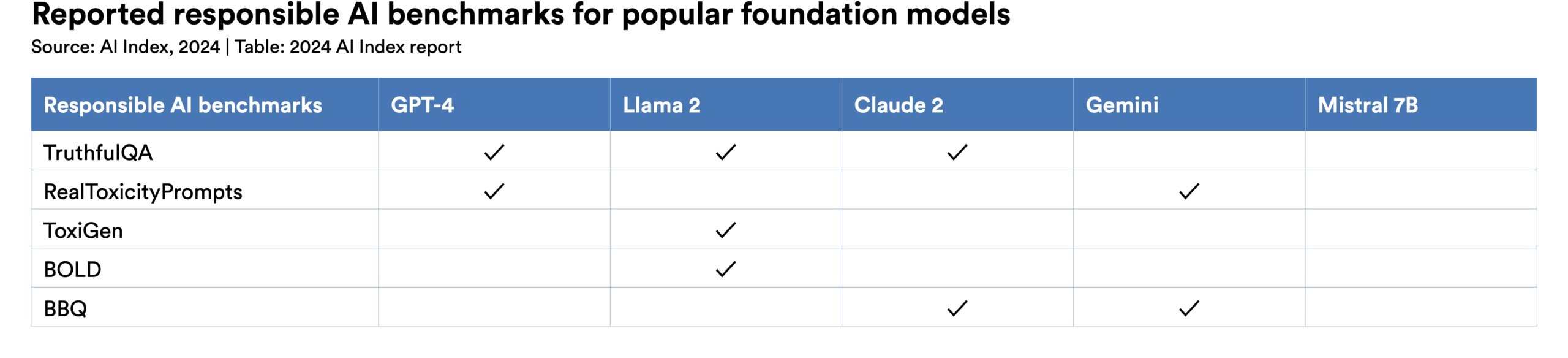

5. Robust and standardized evaluations for LLM responsibility are seriously lacking.

New research from the AI Index reveals a significant lack of standardization in responsible AI reporting. Leading developers, including OpenAI, Google, and Anthropic, primarily test their models against different responsible AI benchmarks. This practice complicates efforts to systematically compare the risks and limitations of top AI models.

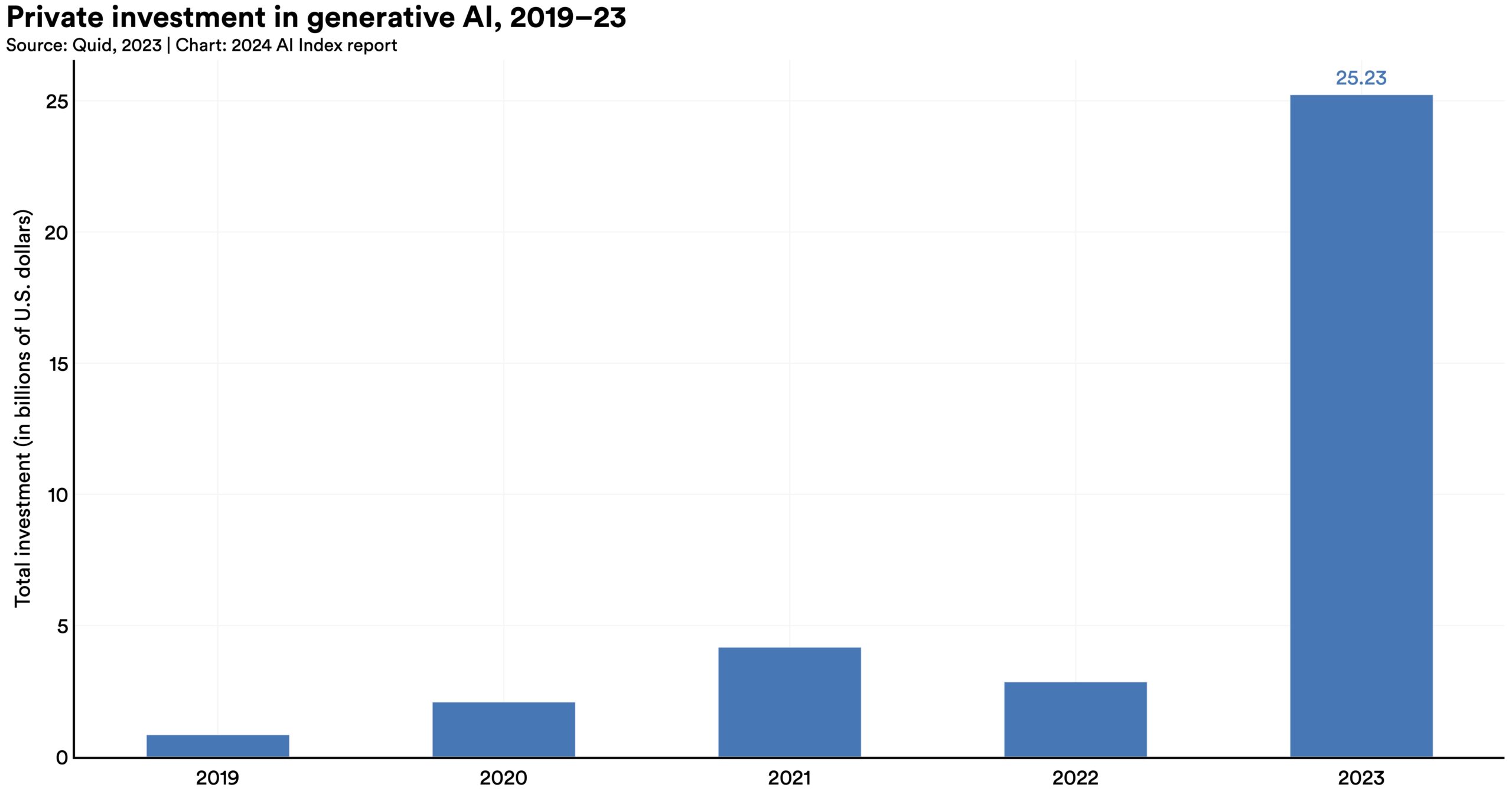

6. Generative AI investment skyrockets.

Despite a decline in overall AI private investment last year, funding for generative AI surged, nearly octupling from 2022 to reach $25.2 billion. Major players in the generative AI space, including OpenAI, Anthropic, Hugging Face, and Inflection, reported substantial fundraising rounds.

7. The data is in: AI makes workers more productive and leads to higher quality work.

In 2023, several studies assessed AI’s impact on labor, suggesting that AI enables workers to complete tasks more quickly and to improve the quality of their output. These studies also demonstrated AI’s potential to bridge the skill gap between low- and high-skilled workers. Still other studies caution that using AI without proper oversight can lead to diminished performance.

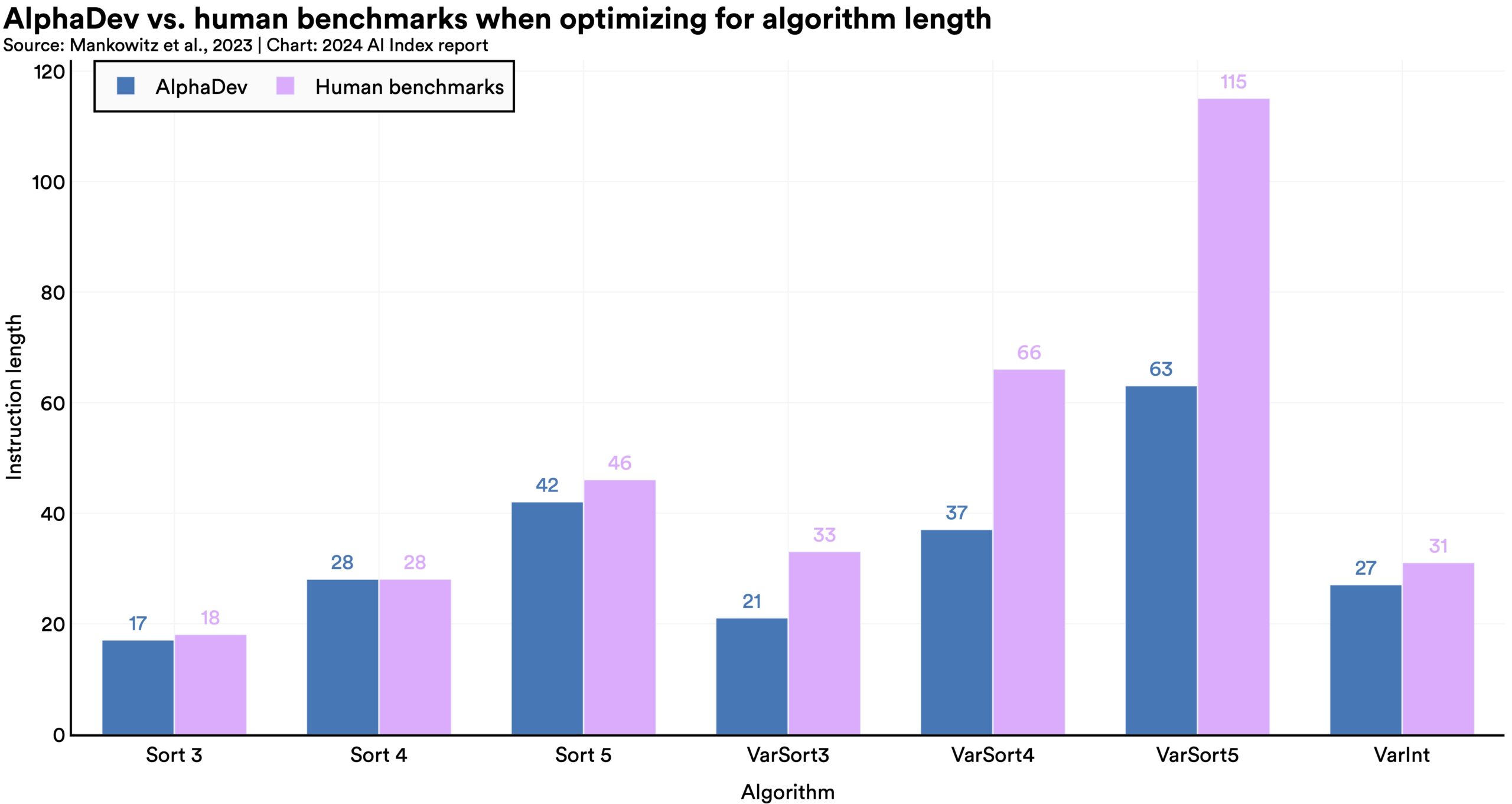

8. Scientific progress accelerates even further, thanks to AI.

In 2022, AI began to advance scientific discovery. 2023, however, saw the launch of even more significant science-related AI applications—from AlphaDev, which makes algorithmic sorting more efficient, to GNoME, which facilitates the process of materials discovery.

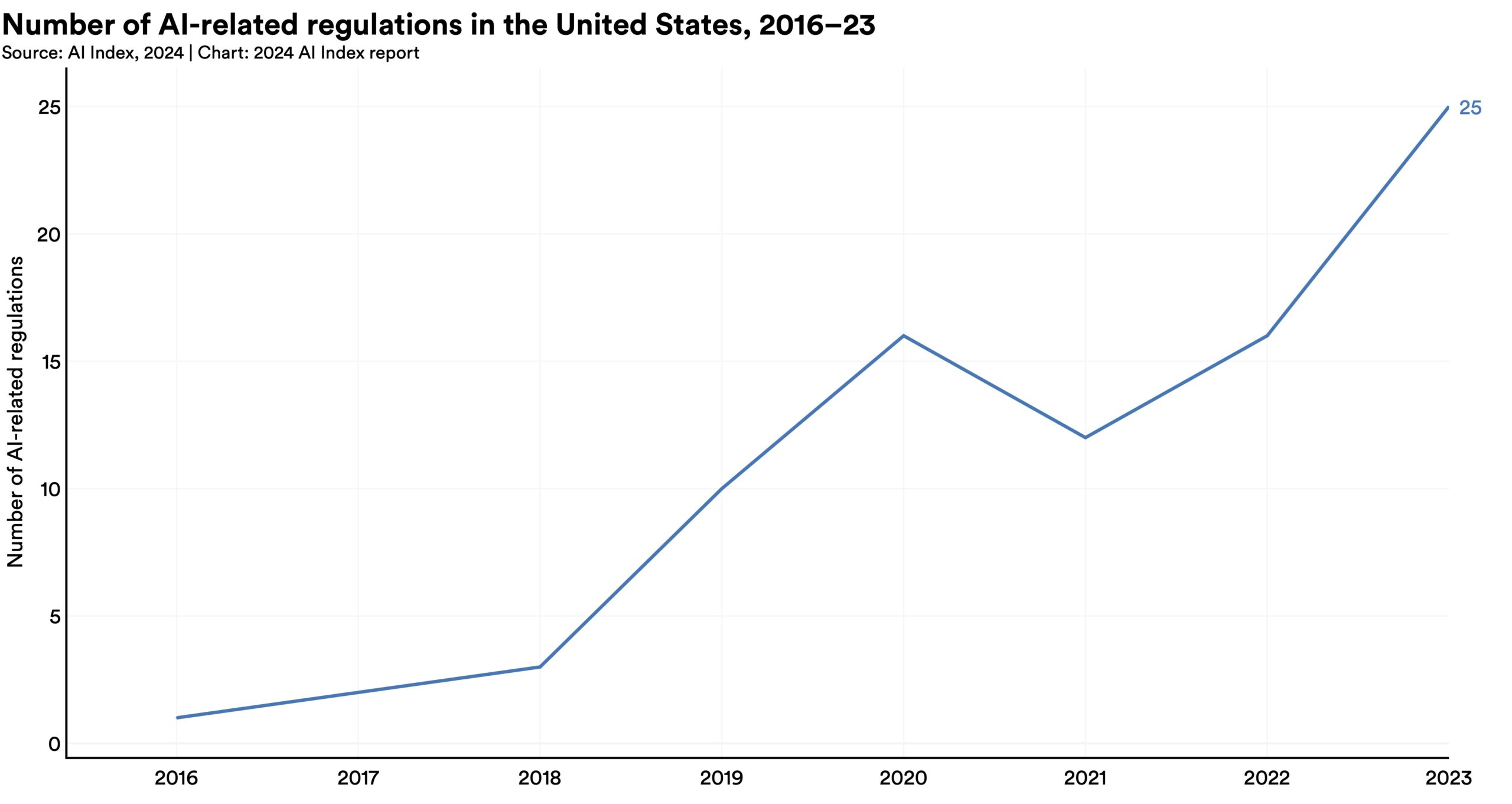

9. The number of AI regulations in the United States sharply increases.

The number of AI-related regulations in the U.S. has risen significantly in the past year and over the last five years. In 2023, there were 25 AI-related regulations, up from just one in 2016. Last year alone, the total number of AI-related regulations grew by 56.3%.

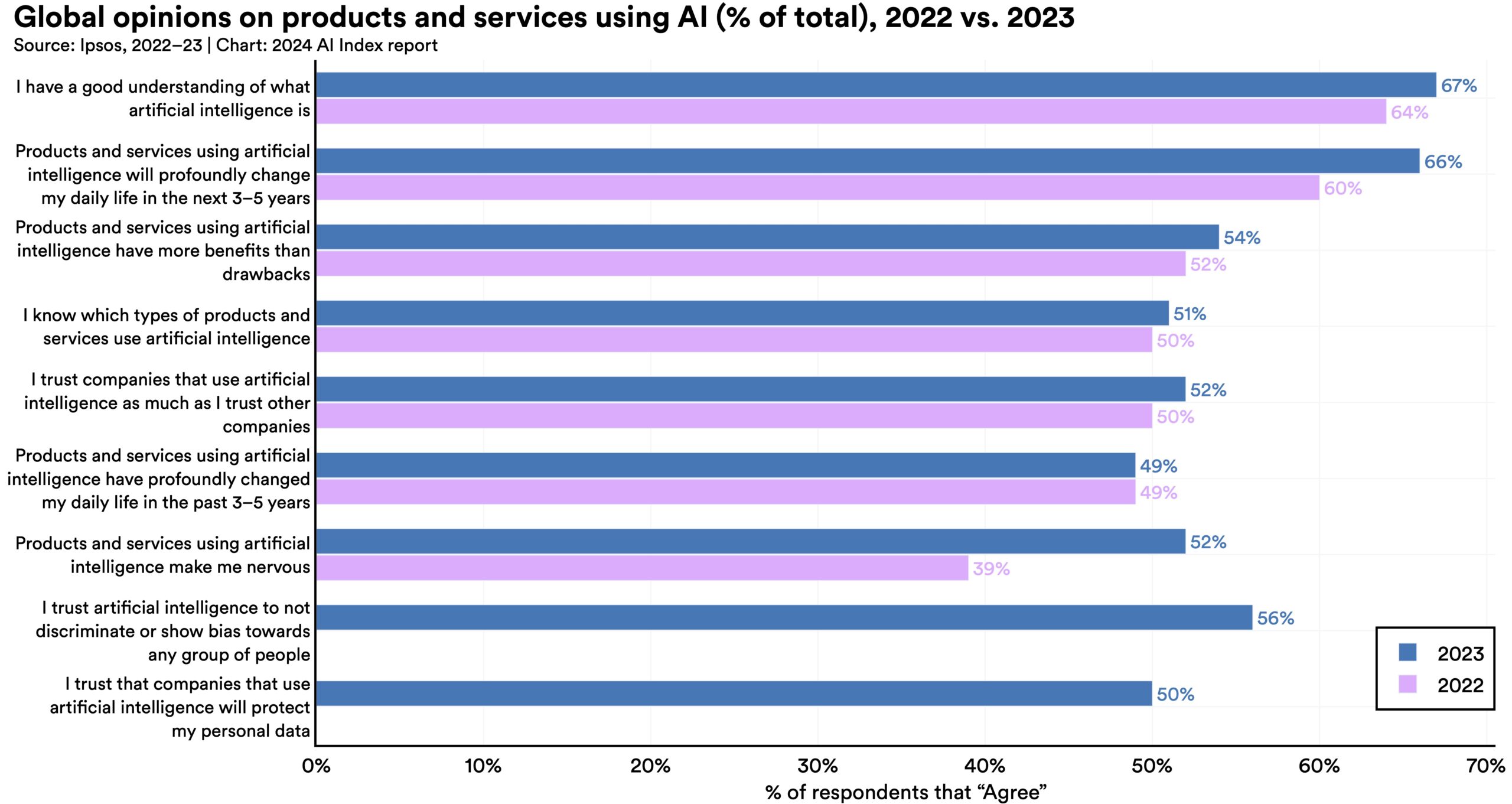

10. People across the globe are more cognizant of AI’s potential impact—and more nervous.

A survey from Ipsos shows that, over the last year, the proportion of those who think AI will dramatically affect their lives in the next three to five years has increased from 60% to 66%. Moreover, 52% express nervousness toward AI products and services, marking a 13 percentage point rise from 2022. In America, Pew data suggests that 52% of Americans report feeling more concerned than excited about AI, rising from 38% in 2022.

Chapter 1: Research and Development

This chapter studies trends in AI research and development. It begins by examining trends in AI publications and patents, and then examines trends in notable AI systems and foundation models. It concludes by analyzing AI conference attendance and open-source AI software projects.

- 1. Industry continues to dominate frontier AI research.

- 2. More foundation models and more open foundation models.

- 3. Frontier models get way more expensive.

- 5. The number of AI patents skyrockets.

- 6. China dominates AI patents.

- 7. Open-source AI research explodes.

- 8. The number of AI publications continues to rise.

Chapter 2: Technical Performance

The technical performance section of this year’s AI Index offers a comprehensive overview of AI advancements in 2023. It starts with a high-level overview of AI technical performance, tracing its broad evolution over time. The chapter then examines the current state of a wide range of AI capabilities, including language processing, coding, computer vision (image and video analysis), reasoning, audio processing, autonomous agents, robotics, and reinforcement learning. It also shines a spotlight on notable AI research breakthroughs from the past year, exploring methods for improving LLMs through prompting, optimization, and fine-tuning, and wraps up with an exploration of AI systems’ environmental footprint.

- 1. AI beats humans on some tasks, but not on all.

- 2. Here comes multimodal AI.

- 3. Harder benchmarks emerge.

- 4. Better AI means better data which means … even better AI.

- 5. Human evaluation is in.

- 6. Thanks to LLMs, robots have become more flexible.

- 7. More technical research in agentic AI.

- 8. Closed LLMs significantly outperform open ones.

Chapter 3: Responsible AI

AI is increasingly woven into nearly every facet of our lives. This integration is occurring in sectors such as education, finance, and healthcare, where critical decisions are often based on algorithmic insights. This trend promises to bring many advantages; however, it also introduces potential risks. Consequently, in the past year, there has been a significant focus on the responsible development and deployment of AI systems. The AI community has also become more concerned with assessing the impact of AI systems and mitigating risks for those affected. This chapter explores key trends in responsible AI by examining metrics, research, and benchmarks in four key responsible AI areas: privacy and data governance, transparency and explainability, security and safety, and fairness. Given that 4 billion people are expected to vote globally in 2024, this chapter also features a special section on AI and elections and more broadly explores the potential impact of AI on political processes.

- 1. Robust and standardized evaluations for LLM responsibility are seriously lacking.

- 2. Political deepfakes are easy to generate and difficult to detect.

- 3. Researchers discover more complex vulnerabilities in LLMs.

- 4. Risks from AI are a concern for businesses across the globe.

- 5. LLMs can output copyrighted material.

- 6. AI developers score low on transparency, with consequences for research.

- 7. Extreme AI risks are difficult to analyze.

- 8. The number of AI incidents continues to rise.

- 9. ChatGPT is politically biased.

Chapter 4: Economy

The integration of AI into the economy raises many compelling questions. Some predict that AI will drive productivity improvements, but the extent of its impact remains uncertain. A major concern is the potential for massive labor displacement—to what degree will jobs be automated versus augmented by AI? Companies are already utilizing AI in various ways across industries, but some regions of the world are witnessing greater investment inflows into this transformative technology. Moreover, investor interest appears to be gravitating toward specific AI subfields like natural language processing and data management. This chapter examines AI-related economic trends using data from Lightcast, LinkedIn, Quid, McKinsey, Stack Overflow, and the International Federation of Robotics (IFR). It begins by analyzing AI-related occupations, covering labor demand, hiring trends, skill penetration, and talent availability. The chapter then explores corporate investment in AI, introducing a new section focused specifically on generative AI. It further examines corporate adoption of AI, assessing current usage and how developers adopt these technologies. Finally, it assesses AI’s current and projected economic impact and robot installations across various sectors.

- 1. Generative AI investment skyrockets.

- 2. Already a leader, the United States pulls even further ahead in AI private investment.

- 3. Fewer AI jobs, in the United States and across the globe.

- 4. AI decreases costs and increases revenues.

- 5. Total AI private investment declines again, while the number of newly funded AI companies increases.

- 6. AI organizational adoption ticks up.

- 7. China dominates industrial robotics.

- 8. Greater diversity in robot installations.

- 9. The data is in: AI makes workers more productive and leads to higher quality work.

- 10. Fortune 500 companies start talking a lot about AI, especially generative AI.

Chapter 5: Science and Medicine

This year’s AI Index introduces a new chapter on AI in science and medicine in recognition of AI’s growing role in scientific and medical discovery. It explores 2023’s standout AI-facilitated scientific achievements, including advanced weather forecasting systems like GraphCast and improved material discovery algorithms like GNoME. The chapter also examines medical AI system performance, important 2023 AI-driven medical innovations like SynthSR and ImmunoSEIRA, and trends in the approval of FDA AI-related medical devices.

- 1. Scientific progress accelerates even further, thanks to AI.

- 2. AI helps medicine take significant strides forward.

- 3. Highly knowledgeable medical AI has arrived.

- 4. The FDA approves more and more AI-related medical devices.

Chapter 6: Education

This chapter examines trends in AI and computer science (CS) education, focusing on who is learning, where they are learning, and how these trends have evolved over time. Amid growing concerns about AI’s impact on education, it also investigates the use of new AI tools like ChatGPT by teachers and students. The analysis begins with an overview of the state of postsecondary CS and AI education in the United States and Canada, based on the Computing Research Association’s annual Taulbee Survey. It then reviews data from Informatics Europe regarding CS education in Europe. This year introduces a new section with data from Studyportals on the global count of AI-related English-language study programs. The chapter wraps up with insights into K–12 CS education in the United States from Code.org and findings from the Walton Foundation survey on ChatGPT’s use in schools.

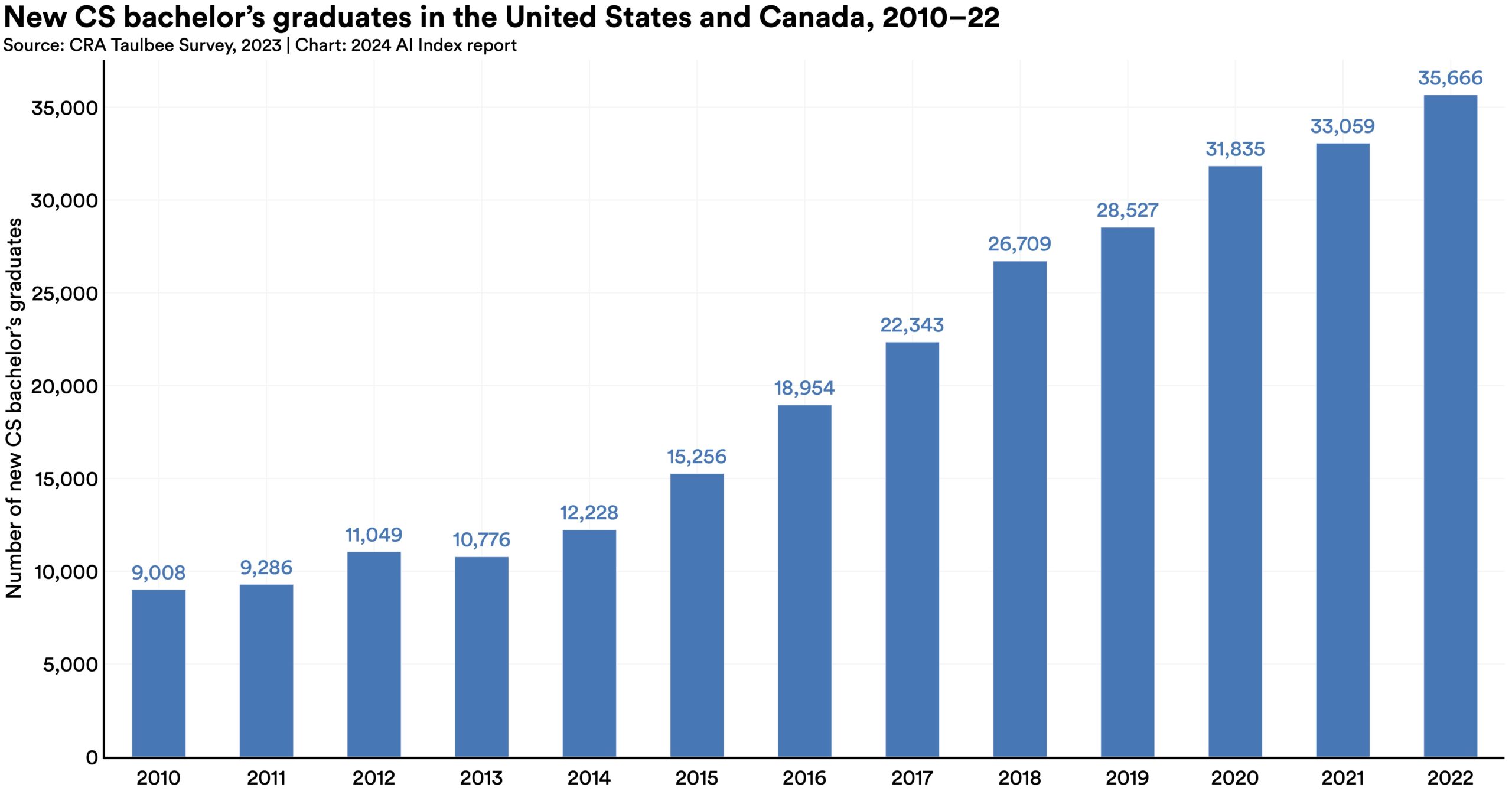

- 1. The number of American and Canadian CS bachelor’s graduates continues to rise, new CS master’s graduates stay relatively flat, and PhD graduates modestly grow.

- 2. The migration of AI PhDs to industry continues at an accelerating pace.

- 3. Less transition of academic talent from industry to academia.

- 4. CS education in the United States and Canada becomes less international.

- 5. More American high school students take CS courses, but access problems remain.

- 6. AI-related degree programs are on the rise internationally.

- 7. The United Kingdom and Germany lead in European informatics, CS, CE, and IT graduate production.

Chapter 7: Policy and Governance

AI’s increasing capabilities have captured policymakers’ attention. Over the past year, several nations and political bodies, such as the United States and the European Union, have enacted significant AI-related policies. The proliferation of these policies reflect policymakers’ growing awareness of the need to regulate AI and improve their respective countries’ ability to capitalize on its transformative potential. This chapter begins examining global AI governance starting with a timeline of significant AI policymaking events in 2023. It then analyzes global and U.S. AI legislative efforts, studies AI legislative mentions, and explores how lawmakers across the globe perceive and discuss AI. Next, the chapter profiles national AI strategies and regulatory efforts in the United States and the European Union. Finally, it concludes with a study of public investment in AI within the United States.

- 1. The number of AI regulations in the United States sharply increases.

- 2. The United States and the European Union advance landmark AI policy action.

- 3. AI captures U.S. policymaker attention.

- 4. Policymakers across the globe cannot stop talking about AI.

- 5. More regulatory agencies turn their attention toward AI.

Chapter 8: Diversity

The demographics of AI developers often differ from those of users. For instance, a considerable number of prominent AI companies and the datasets utilized for model training originate from Western nations, thereby reflecting Western perspectives. The lack of diversity can perpetuate or even exacerbate societal inequalities and biases. This chapter delves into diversity trends in AI. The chapter begins by drawing on data from the Computing Research Association (CRA) to provide insights into the state of diversity in American and Canadian computer science (CS) departments. A notable addition to this year’s analysis is data sourced from Informatics Europe, which sheds light on diversity trends within European CS education. Next, the chapter examines participation rates at the Women in Machine Learning (WiML) workshop held annually at NeurIPS. Finally, the chapter analyzes data from Code.org, offering insights into the current state of diversity in secondary CS education across the United States. The AI Index is dedicated to enhancing the coverage of data shared in this chapter. Demographic data regarding AI trends, particularly in areas such as sexual orientation, remains scarce. The AI Index urges other stakeholders in the AI domain to intensify their endeavors to track diversity trends associated with AI and hopes to comprehensively cover such trends in future reports.

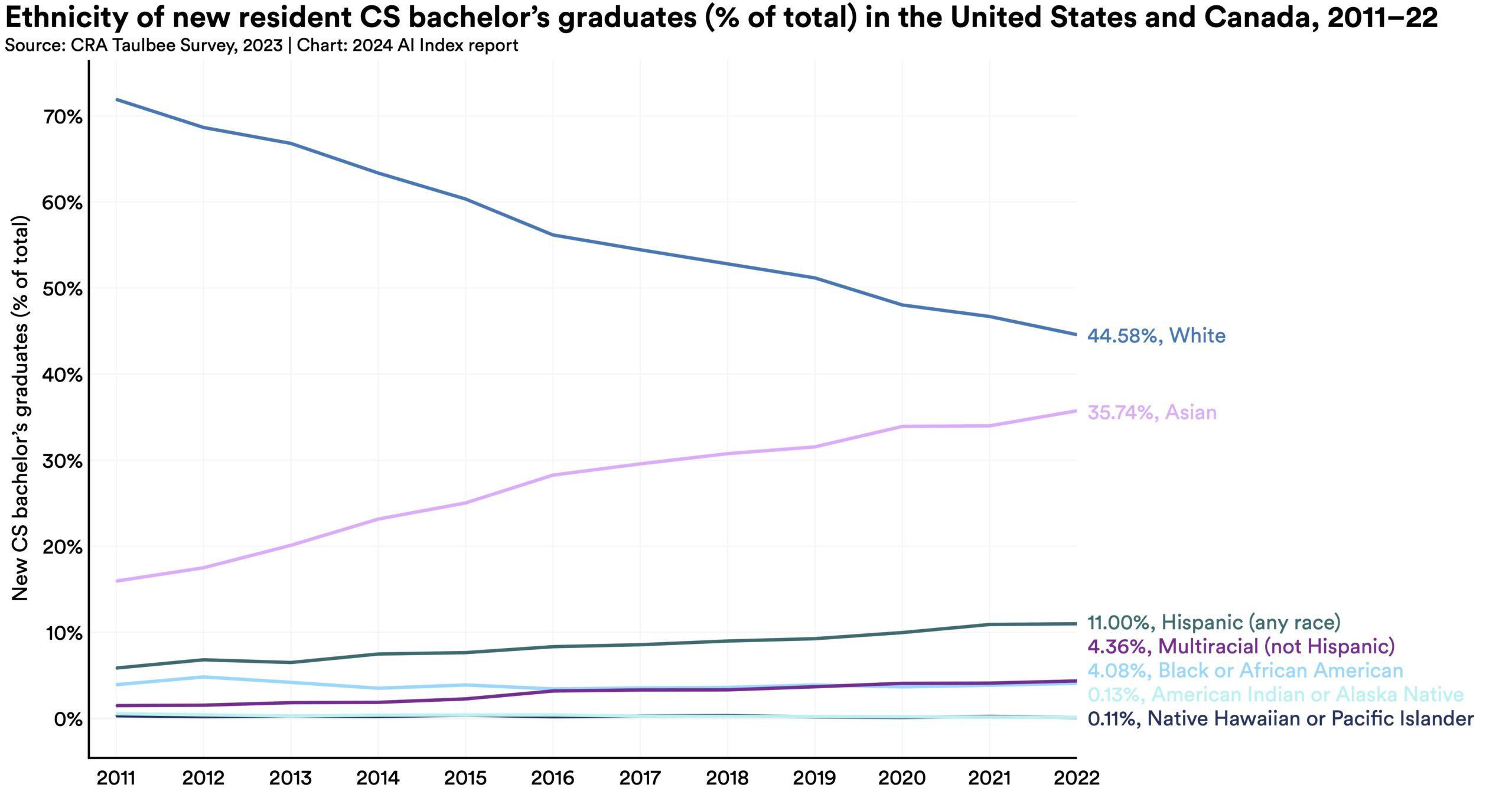

- 1. U.S. and Canadian bachelor’s, master’s, and PhD CS students continue to grow more ethnically diverse.

- 2. Substantial gender gaps persist in European informatics, CS, CE, and IT graduates at all educational levels.

- 3. U.S. K–12 CS education is growing more diverse, reflecting changes in both gender and ethnic representation.

Chapter 9: Public Opinion

As AI becomes increasingly ubiquitous, it is important to understand how public perceptions regarding the technology evolve. Understanding this public opinion is vital in better anticipating AI’s societal impacts and how the integration of the technology may differ across countries and demographic groups. This chapter examines public opinion on AI through global, national, demographic, and ethnic perspectives. It draws upon several data sources: longitudinal survey data from Ipsos profiling global AI attitudes over time, survey data from the University of Toronto exploring public perception of ChatGPT, and data from Pew examining American attitudes regarding AI. The chapter concludes by analyzing mentions of significant AI models on Twitter, using data from Quid.

- 1. People across the globe are more cognizant of AI’s potential impact—and more nervous.

- 2. AI sentiment in Western nations continues to be low, but is slowly improving.

- 3. The public is pessimistic about AI’s economic impact.

- 4. Demographic differences emerge regarding AI optimism.

- 5. ChatGPT is widely known and widely used.

Past Reports

- Stanford Home

- Maps & Directions

- Search Stanford

- Emergency Info

- Terms of Use

- Non-Discrimination

- Accessibility

Artificial Intelligence in the 21st Century

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- Search entire site

- Search for a course

- Browse study areas

Analytics and Data Science

- Data Science and Innovation

- Postgraduate Research Courses

- Business Research Programs

- Undergraduate Business Programs

- Entrepreneurship

- MBA Programs

- Postgraduate Business Programs

Communication

- Animation Production

- Business Consulting and Technology Implementation

- Digital and Social Media

- Media Arts and Production

- Media Business

- Media Practice and Industry

- Music and Sound Design

- Social and Political Sciences

- Strategic Communication

- Writing and Publishing

- Postgraduate Communication Research Degrees

Design, Architecture and Building

- Architecture

- Built Environment

- DAB Research

- Public Policy and Governance

- Secondary Education

- Education (Learning and Leadership)

- Learning Design

- Postgraduate Education Research Degrees

- Primary Education

Engineering

- Civil and Environmental

- Computer Systems and Software

- Engineering Management

- Mechanical and Mechatronic

- Systems and Operations

- Telecommunications

- Postgraduate Engineering courses

- Undergraduate Engineering courses

- Sport and Exercise

- Palliative Care

- Public Health

- Nursing (Undergraduate)

- Nursing (Postgraduate)

- Health (Postgraduate)

- Research and Honours

- Health Services Management

- Child and Family Health

- Women's and Children's Health

Health (GEM)

- Coursework Degrees

- Clinical Psychology

- Genetic Counselling

- Good Manufacturing Practice

- Physiotherapy

- Speech Pathology

- Research Degrees

Information Technology

- Business Analysis and Information Systems

- Computer Science, Data Analytics/Mining

- Games, Graphics and Multimedia

- IT Management and Leadership

- Networking and Security

- Software Development and Programming

- Systems Design and Analysis

- Web and Cloud Computing

- Postgraduate IT courses

- Postgraduate IT online courses

- Undergraduate Information Technology courses

- International Studies

- Criminology

- International Relations

- Postgraduate International Studies Research Degrees

- Sustainability and Environment

- Practical Legal Training

- Commercial and Business Law

- Juris Doctor

- Legal Studies

- Master of Laws

- Intellectual Property

- Migration Law and Practice

- Overseas Qualified Lawyers

- Postgraduate Law Programs

- Postgraduate Law Research

- Undergraduate Law Programs

- Life Sciences

- Mathematical and Physical Sciences

- Postgraduate Science Programs

- Science Research Programs

- Undergraduate Science Programs

Transdisciplinary Innovation

- Creative Intelligence and Innovation

- Diploma in Innovation

- Transdisciplinary Learning

- Postgraduate Research Degree

IJCAI 2024 Success

AAII students Zihe Liu, Wei Duan and Zhihong Deng have had papers accepted for IJCAI 2024.

IJCAI 2024 will be held in Jeju Island, South Korea, 03 August to 09 August 2024.

IJCAI 2024: AAII Success

The International Joint Conference on Artificial Intelligence (IJCAI) is the premier international gathering of researchers in AI. The 33rd iteration of the IJCAI conference will take place in August this year in Jeju, with the following papers by AAII members accepted for presentation:

- 'A Behavior-Aware Approach for Deep Reinforcement Learning in Non-stationary Environments without Known Change Points,' Zihe Liu, Jie Lu, Guangquan Zhang & Junyu Xuan.

- ' Group-Aware Coordination Graph for Multi-Agent Reinforcement Learning ', Wei Duan, Jie Lu, & Junyu Xuan.

- ' What Hides behind Unfairness? Exploring Dynamics Fairness in Reinforcement Learning ,' Zhihong Deng, Jing Jiang, Guodong Long & Chengqi Zhang.

AAII researchers look forward to IJCAI 2024 as a chance dive deeper into their research findings and advance the next generation of AI agents that are not only intelligent, but also reliable, fair and safe.

UTS acknowledges the Gadigal people of the Eora Nation, the Boorooberongal people of the Dharug Nation, the Bidiagal people and the Gamaygal people, upon whose ancestral lands our university stands. We would also like to pay respect to the Elders both past and present, acknowledging them as the traditional custodians of knowledge for these lands.

Help | Advanced Search

Computer Science > Artificial Intelligence

Title: capabilities of gemini models in medicine.

Abstract: Excellence in a wide variety of medical applications poses considerable challenges for AI, requiring advanced reasoning, access to up-to-date medical knowledge and understanding of complex multimodal data. Gemini models, with strong general capabilities in multimodal and long-context reasoning, offer exciting possibilities in medicine. Building on these core strengths of Gemini, we introduce Med-Gemini, a family of highly capable multimodal models that are specialized in medicine with the ability to seamlessly use web search, and that can be efficiently tailored to novel modalities using custom encoders. We evaluate Med-Gemini on 14 medical benchmarks, establishing new state-of-the-art (SoTA) performance on 10 of them, and surpass the GPT-4 model family on every benchmark where a direct comparison is viable, often by a wide margin. On the popular MedQA (USMLE) benchmark, our best-performing Med-Gemini model achieves SoTA performance of 91.1% accuracy, using a novel uncertainty-guided search strategy. On 7 multimodal benchmarks including NEJM Image Challenges and MMMU (health & medicine), Med-Gemini improves over GPT-4V by an average relative margin of 44.5%. We demonstrate the effectiveness of Med-Gemini's long-context capabilities through SoTA performance on a needle-in-a-haystack retrieval task from long de-identified health records and medical video question answering, surpassing prior bespoke methods using only in-context learning. Finally, Med-Gemini's performance suggests real-world utility by surpassing human experts on tasks such as medical text summarization, alongside demonstrations of promising potential for multimodal medical dialogue, medical research and education. Taken together, our results offer compelling evidence for Med-Gemini's potential, although further rigorous evaluation will be crucial before real-world deployment in this safety-critical domain.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

This paper is in the following e-collection/theme issue:

Published on 7.5.2024 in Vol 26 (2024)

This is a member publication of National University of Singapore

Effectiveness of an Artificial Intelligence-Assisted App for Improving Eating Behaviors: Mixed Methods Evaluation

Authors of this article:

Original Paper

- Han Shi Jocelyn Chew 1 , PhD ;

- Nicholas WS Chew 2 , MBBS ;

- Shaun Seh Ern Loong 3 , MBBS ;

- Su Lin Lim 4 , PhD ;

- Wai San Wilson Tam 1 , PhD ;

- Yip Han Chin 3 , MBBS ;

- Ariana M Chao 5 , PhD ;

- Georgios K Dimitriadish 6 , MBBS, MSc ;

- Yujia Gao 7 ;

- Jimmy Bok Yan So 8 , MB ChB, FRCS, MPH ;

- Asim Shabbir 8 , MBBS, MMed, FRCS ;

- Kee Yuan Ngiam 9 , MBBS, FRCS

1 Alice Lee Centre for Nursing Studies, Yong Loo Lin School of Medicine, National University of Singapore, Singapore, Singapore

2 Department of Cardiology, National University Hospital, Singapore, Singapore

3 Yong Loo Lin School of Medicine, National University of Singapore, Singapore, Singapore

4 Department of Dietetics, National University Hospital, Singapore, Singapore

5 School of Nursing, Johns Hopkins University, Baltimore, MD, United States

6 Department of Endocrinology ASO/EASO COM, King's College Hospital NHS Foundation Trust, London, United Kingdom

7 Division of Hepatobiliary & Pancreatic Surgery, Department of Surgery, National University Hospital, Singapore, Singapore

8 Division of General Surgery (Upper Gastrointestinal Surgery), Department of Surgery, National University Hospital, Singapore, Singapore

9 Division of Thyroid & Endocrine Surgery, Department of Surgery, National University Hospital, Singapore, Singapore

Corresponding Author:

Han Shi Jocelyn Chew, PhD

Alice Lee Centre for Nursing Studies

Yong Loo Lin School of Medicine

National University of Singapore

Level 3, Clinical Research Centre

Block MD11, 10 Medical Drive

Singapore, 117597

Phone: 65 65168687

Email: [email protected]

Background: A plethora of weight management apps are available, but many individuals, especially those living with overweight and obesity, still struggle to achieve adequate weight loss. An emerging area in weight management is the support for one’s self-regulation over momentary eating impulses.

Objective: This study aims to examine the feasibility and effectiveness of a novel artificial intelligence–assisted weight management app in improving eating behaviors in a Southeast Asian cohort.

Methods: A single-group pretest-posttest study was conducted. Participants completed the 1-week run-in period of a 12-week app-based weight management program called the Eating Trigger-Response Inhibition Program (eTRIP). This self-monitoring system was built upon 3 main components, namely, (1) chatbot-based check-ins on eating lapse triggers, (2) food-based computer vision image recognition (system built based on local food items), and (3) automated time-based nudges and meal stopwatch. At every mealtime, participants were prompted to take a picture of their food items, which were identified by a computer vision image recognition technology, thereby triggering a set of chatbot-initiated questions on eating triggers such as who the users were eating with. Paired 2-sided t tests were used to compare the differences in the psychobehavioral constructs before and after the 7-day program, including overeating habits, snacking habits, consideration of future consequences, self-regulation of eating behaviors, anxiety, depression, and physical activity. Qualitative feedback were analyzed by content analysis according to 4 steps, namely, decontextualization, recontextualization, categorization, and compilation.

Results: The mean age, self-reported BMI, and waist circumference of the participants were 31.25 (SD 9.98) years, 28.86 (SD 7.02) kg/m 2 , and 92.60 (SD 18.24) cm, respectively. There were significant improvements in all the 7 psychobehavioral constructs, except for anxiety. After adjusting for multiple comparisons, statistically significant improvements were found for overeating habits (mean –0.32, SD 1.16; P <.001), snacking habits (mean –0.22, SD 1.12; P <.002), self-regulation of eating behavior (mean 0.08, SD 0.49; P =.007), depression (mean –0.12, SD 0.74; P =.007), and physical activity (mean 1288.60, SD 3055.20 metabolic equivalent task-min/day; P <.001). Forty-one participants reported skipping at least 1 meal (ie, breakfast, lunch, or dinner), summing to 578 (67.1%) of the 862 meals skipped. Of the 230 participants, 80 (34.8%) provided textual feedback that indicated satisfactory user experience with eTRIP. Four themes emerged, namely, (1) becoming more mindful of self-monitoring, (2) personalized reminders with prompts and chatbot, (3) food logging with image recognition, and (4) engaging with a simple, easy, and appealing user interface. The attrition rate was 8.4% (21/251).

Conclusions: eTRIP is a feasible and effective weight management program to be tested in a larger population for its effectiveness and sustainability as a personalized weight management program for people with overweight and obesity.

Trial Registration: ClinicalTrials.gov NCT04833803; https://classic.clinicaltrials.gov/ct2/show/NCT04833803

Introduction

Overweight and obesity remain a public health concern that affects slightly more than half of the global adult population [ 1 ]. Across 52 Organization for Economic Co-operation and Development, Group of Twenty, and European Union 28 countries, treating conditions related to overweight and obesity costs US $425 billion per year, based on purchasing power parity. Each US dollar used to prevent obesity results in a 6-fold return in economic benefits [ 2 ]. Strategies for maintaining a healthy weight range from policy mandates on nutritional food labeling [ 3 ] to clinical treatments focused on lifestyle modifications, pharmacotherapy, and bariatric surgery [ 4 ]. However, the effectiveness of such strategies is limited by insurance coverage [ 5 ] and challenges with weight loss maintenance [ 6 - 9 ]. Some participants have been reported to regain up to 100% of their initial weight loss within 5 years [ 9 , 10 ].

With the rapid digitalization and smartphone penetration worldwide, weight loss apps have been gaining popularity, as they help overcome the temporospatial challenges of in-person weight loss programs [ 11 ]. For instance, participants enrolled in conventional weight management programs typically attend multiple face-to-face sessions at designated facilities, which could be burdensome and inconvenient as one needs to schedule appointments and travel to the facility that may be beyond one’s usual mobility pattern. Moreover, such programs are resource-intensive, requiring a multidisciplinary team of trained health care professionals (eg, physicians, dietitians, physiotherapists, nurses), infrastructure (eg, counselling room), and equipment (eg, weighing scale, stadiometer) to maintain. Well-known apps that support weight loss in the market include MyFitnessPal [ 12 ], MyPlate Calorie Tracker [ 13 ], and Fitbit [ 14 ]. In Singapore, Healthy 365 [ 15 ] is available for the public, while nBuddy [ 16 ] is used for the clinical population. These apps mostly focus on calorie tracking, health status tracking, and progress monitoring. Increasingly, apps are enhanced with features that allow intuitive synchronization of health metrics across apps to provide a more holistic progress monitoring experience. With a fee, some apps even match users to a health coach who would provide personalized weight management plans to support weight loss. However, there is a need for apps that include monitoring and support for one’s self-regulation over momentary eating impulses, which are often triggered and influenced by dietary lapse triggers such as visual food cues, eating out, negative affect, and sleep deprivation [ 17 - 20 ]. Self-regulation of eating behaviors during weight loss treatment commonly includes portion control, increasing fruit and vegetable consumption, reducing unhealthy food (sugar-sweetened beverages and high-fat food items) consumption, and reducing overall caloric consumption [ 17 ]. Therefore, we aimed to examine the feasibility and effectiveness of a novel artificial intelligence (AI)-assisted weight management app on improving eating behaviors and to explore the mechanism by which this app influences eating behaviors, as hypothesized in our earlier work [ 21 ].

Study Design

A single-group pretest-posttest study was conducted and reported according to the TREND (Transparent Reporting of Evaluations with Nonrandomized Designs) checklist ( Multimedia Appendix 1 ) [ 22 ]. Despite the limitations of the study design, it was deemed the most appropriate and feasible experimental study design for a preliminary understanding of the usability, acceptability, and effectiveness of the app [ 23 ].

Participant Recruitment

Participants older than 21 years with BMI ≥23 kg/m 2 and not undergoing a commercial weight loss program were recruited from January 2022 to October 2022 through social media platforms and physical recruitment at a local tertiary hospital’s specialist weight management clinic in Singapore. Using G*Power (version 3.1.9.7) [ 24 ], to detect a small effect size of 0.2 at .05 significance level and 80% power while accounting for an attrition rate of 20%, 248 participants are required. To be conservative, 250 participants were recruited.

Intervention

Immediately after completing the pretest questionnaire, participants were onboarded to the Eating Trigger-Response Inhibition Program (eTRIP) app by a trained research assistant to complete the 1-week run-in of the program. During the onboarding, participants were invited to enter their anthropometric details, desired weight loss goals, and motivation. They were also encouraged to personalize certain app functions such as the timing of the check-in prompts and preferred name for interaction with a chatbot. At every mealtime (at least 3 times a day), the participants were prompted to take a picture of their food, which was immediately recognized by a food-based computer vision image recognition technology, which then triggered a set of chatbot-initiated questions on eating triggers (eg, how they are feeling). These questions were developed based on our past work on eating behaviors [ 25 - 30 ]. Participants were able to view their image-based food log and eating habits on a dashboard, reflect upon their eating habits throughout the day, and set their goals and action plans for the next day. On the 8th day, all participants’ user accounts were locked, and they were unable to make any changes but were able to still view their check-in logs. Participants could also provide feedback on the app by filling out the comments section in one of the app pages. Participants were reimbursed SGD 25 (SGD 1=US $0.74) for completing this program.

The eTRIP app was developed as a 12-week AI-assisted, app-based, self-regulation program targeted at improving weight loss through healthy eating. eTRIP was developed largely based on a modified temporal self-regulation theory [ 31 , 32 ], behavioral change taxonomy [ 33 ], and our previous work on healthy eating and weight loss [ 27 - 29 , 34 , 35 ]. This includes studies on people with overweight and obesity in the areas of personal motivators, self-regulation facilitators, and barriers [ 27 ]; the potential of AI, apps, and chatbots in improving weight loss [ 6 , 25 , 29 ]; perceptions and needs of AI to increase its adoption in weight management [ 26 ]; and the essential elements of a weight loss app [ 28 ]. The development of eTRIP was split into 2 phases: (1) development of an AI-assisted self-monitoring system and (2) development of an AI-assisted behavioral nudging system. In this paper, we report the feasibility and effectiveness of an AI-assisted self-monitoring system after a 1-week run-in. The self-monitoring system is built upon 3 main components, namely, (1) chatbot-based check-ins on eating lapse triggers, (2) food-based computer vision image recognition (system built based on local food items), and (3) automated time-based nudges and meal stopwatch.

All participants completed the same self-report questionnaire before and after the 1-week run-in of the app, which reflected their sociodemographic profile, BMI, waist circumference, intention to improve eating behaviors, habits of overeating (Self-Report Habit Index) [ 36 ], habits of snacking [ 36 ], consideration of future consequences (Consideration of Future Consequences Scale-6 items) [ 37 ], self-regulation of eating behavior (Self-Regulation of Eating Behavior Questionnaire) [ 38 ], physical activity (International Physical Activity Questionnaire-Short Form) [ 39 ], anxiety symptoms (Generalized Anxiety Disorder-2 items) [ 40 ], and depressive symptoms (Patient Health Questionnaire-2 items) [ 41 ]. Details are reported in Multimedia Appendix 2 . The primary outcomes were overeating habits, snacking habits, immediate thinking, self-regulation of eating habits, depression, anxiety, and physical activity. The secondary outcomes were their subscale scores.

Data Analysis

SPSS statistical software (version 27; IBM Corp) [ 42 ] was used for the analyses. The baseline characteristics of the participants were presented in mean (SD) and frequency (%). Paired 2-sided t tests were used to compare the differences in the psychobehavioral constructs before and after the 7-day program, including overeating habits, snacking habits, consideration of future consequences, self-regulation of eating behaviors, anxiety, depression, and physical activity. To account for the increased risk of a type 1 error due to multiple comparisons [ 43 ], the Bonferroni-corrected significant level was set to P ≤.007. Qualitative feedback were analyzed using content analysis according to 4 steps, namely, decontextualization, recontextualization, categorization, and compilation [ 44 ]. Feedback was first consolidated verbatim and read iteratively by 2 coders (Nagadarshini Nicole Rajasegaran and HSJC). The verbatim feedback was then analyzed independently by 2 reviewers into meaning units. Meaning units were then reconstituted, categorized, and reported as themes and subthemes.

Ethics Approval

This single-group pretest-posttest study was approved by the National Healthcare Group Domain Specific Review Board (ref 2020/01439), registered with the ClinicalTrials.gov (ref NCT04833803) on April 6, 2021.

Baseline Characteristics of the Participants

A total of 251 participants were enrolled in this study (Chew HSJ, unpublished data, 2023); 20 (7.9%) participants dropped out of the 1-week program due to the inability to perform check-ins every day. Among those who completed the program (n=231), 1 participant was removed from the analyses due to ineligibility. The mean age, self-reported BMI, and waist circumference of the participants was 31.25 (SD 9.98) years, 28.86 (SD 7.02) kg/m 2 , and 92.6 (SD 18.24) cm, respectively ( Table 1 ). Approximately 47.8% (111/230) of the participants were males, indicating a good mix of participants from both sexes, and most of the participants were single (169/230, 73.6%), Chinese (181/230, 78.7%), and had a university education (148/230, 64.1%).

a SGD 1=US $0.74.

Mean Baseline Scores on Each Outcome Variable

The mean baseline scores on each outcome variable of the participants who completed and who dropped out from the 1-week program were calculated ( Table 2 ). As the dropout rate was only 8.4% (20/251), statistical comparisons between those who dropped out and those who completed the program was not necessary.

a SRHI: Self-Report Habit Index.

b CFCS-6: Consideration of Future Consequences Scale-6 items.

c SREBQ: Self-Regulation of Eating Behavior Questionnaire.

d IPAQ-SF: International Physical Activity Questionnaire-Short Form.

e MET: metabolic equivalent task.

Pretest and Posttest Mean Differences

There were significant improvements in all the 7 psychobehavioral constructs, except for anxiety. After adjusting for multiple comparisons, there were only statistically significant improvements in the overeating habit, snacking habit, self-regulation of eating behavior, depression, and physical activity ( Table 3 ). Forty-one participants reported skipping at least 1 meal (ie, breakfast, lunch, or dinner), summing to a total of 578 (67.1%) of the 862 meals skipped.

b Significant at P <.007.

c CFCS-6: Consideration of Future Consequences Scale-6 items.

d Consideration of Future Consequences Scale-6 immediate subscale.

e Consideration of Future Consequences Scale-6 future subscale.

f SREBQ: Self-Regulation of Eating Behavior Questionnaire.

g IPAQ-SF: International Physical Activity Questionnaire-Short Form.

User Engagement

Among those who completed the program, 97% (46,867/48,316) chatbot-based questions were completed. As participants were given the option to add additional check-ins for snacks, the percentage of completed check-ins could not be accurately computed.

Qualitative Feedback

Of the 230 participants, 80 (34.8%) provided textual feedback that indicated satisfactory experience with eTRIP. Four themes emerged, namely, (1) becoming more mindful of self-monitoring, (2) personalized reminders with prompts and chatbot, (3) food logging with image recognition, and (4) engaging with a simple, easy, and appealing user interface.

Becoming More Mindful of Self-Monitoring

By checking in with the app for every meal, the participants mentioned being more aware of their unhealthy eating habits and more mindful of their next meal. One participant said, “It (eTRIP) incentivizes me to stick to my diet plan because I am reminded of my diet plan daily. Ticking the box that indicates ‘I did not meet my diet plan’ made me guilty and it motivates me to opt for healthier food choice the next time round” (Female, Chinese, 22 years old). Another participant said, “I really liked the eTRIP app! Has a lot of potential for further expansion and use by more people. I like how it sends prompts during selected times of the day to be careful of what we see on social media. The rating of our mood before meals also helps me know how mood can affect my eating patterns. Lastly, the stopwatch function is great because it reminds me to eat more mindfully” (Male, Chinese, 27 years old).

Personalized Reminders With Prompts and Chatbot

Some participants mentioned the appreciation for reminders to check in with themselves in terms of the triggers of overeating. One participant said, “I like that there’s a reminder to check in for every meal and users get to decide what time the app should prompt!” (Female, Indian, 27 years old). Some also suggested to develop the prompting system to prompt based on the user’s previous check-in timings to optimize the prediction of mealtimes and prompt the check-in sessions intuitively. One participant suggested, “I think what would make this better is if you could aggregate the time the meals are entered from the past few days and estimate the time the user will normally eat and auto-adjust the timing…” (Female, Chinese, 23 years old). Others suggested to include reminders of how to make their meal options healthier, “Might be good to have reminders that reminds us to eat healthy with some tips on how to choose food” (Male, Chinese, 25 years old).

Food Logging With Image Recognition

Many participants highlighted their appreciation for the image recognition-based food logging, as it was accurate and convenient for food logging. One participant said, “It is very accurate in determining the food I’ve eaten just from the picture, and this saved me a lot of time from typing out the food I’ve eaten” (Male, Chinese, 21 years old).

Engaging With a Simple, Easy, and Appealing User Interface

All the participants who commented on the user experience expressed being impressed with the user interface and structure. One participant said, “the flow was smooth, quite clear. Graphics were cute. Very easy to input my info (information) especially from the homepage, I like how there’s the ability to skip a meal” (Female, Malay, 25 years old). Another participant said, “The app is very smart, … yes it’s very easy to fill and I loss (lost) like 0.5kg?” (Female, Chinese, 25 years old).

Participants’ Suggestions

In terms of the areas for improvement, the participants preferred to have (1) more options and rating scales for each domain of eating trigger instead of typing out in the “others” field (although there was a stored text for repeated entries); (2) summary of the instances where one was able to achieve the goal of the day, which the user sets daily for the next day (based on a user preset list of goals); (3) examples of standard portions and frequency of meals; and (4) feedback on how to improve upon the unhealthy meals logged.

Real-time interventions that can effectively address eating lapse triggers and improve eating behavior self-regulation, lapse events, weight loss, and weight maintenance remain unclear [ 45 ]. OnTrack, a just-in-time adaptive intervention that has been tested, is a smartphone app that uses machine learning to predict dietary lapses based on the repeated assessments of lapse triggers (ecological momentary assessment). OnTrack is used in conjunction with existing weight loss apps such as WeightWatchers app and provides personalized recommendations to prevent dietary lapses. The compliance rate for completing the lapse trigger survey in OnTrack was 62.9% over 3 months, and the studied sample was mostly females who were Whites [ 46 ]. Evidence has shown that factors influencing obesity and overweight are population-specific, influenced by socioeconomic, cultural, and genetic factors among others [ 45 , 47 ]. Singapore is a multiethnic society with a unique food culture influenced by various racial beliefs and traditions [ 48 ]. The differences in geographical, social, environmental, and genetic characteristics could define a different set of triggers and response to such weight loss apps.

Principal Findings

In this paper, we report the effectiveness of a weeklong AI-assisted weight loss app for improving overeating habits, snacking habits, immediate thinking, self-regulation of eating habits, depression, and physical activity. Interestingly, there were no significant improvements in the anxiety symptoms before and after using eTRIP, potentially due to the already low level of anxiety in those who completed the program (ie, ceiling effect). We also report corresponding qualitative user feedback on the experience with using eTRIP, where the users appreciated the app for enabling them to become more mindful of self-monitoring; personalized reminders with prompts and chatbot; food logging with image recognition; and engaging with a simple, easy, and appealing user interface. The significant improvements observed among the participants in this study reveal the potential of this app to influence weight loss in the context of a Southeast Asian cohort with overweight and obesity. The qualitative feedback also informs future app development to enhance user engagement and reduce dropout rates.

Eating habits contribute to overweight and obesity [ 49 , 50 ]. Encouraged by an obesogenic environment, overeating is commonly triggered by situational factors such as food novelty or variety, social company (eg, eating with certain people), affect emotional states (which trigger emotional eating), and distractions (eg, concurrent tasks) [ 30 , 50 - 53 ]. Other studies have suggested that people at risk for obesity exhibit hyperresponsivity in the neural reward system to calorie-dense foods, which is associated with increased food consumption [ 54 ]. Alongside users’ feedback that the app made them more mindful of their eating patterns, the significant improvement in overeating and snacking habits could have been due to an increased awareness of one’ maladaptive eating habits and subsequently, the motivation to change. This coincides with a review that reported the effectiveness of mindful eating interventions on reducing food consumption in people with overweight and obesity [ 55 ]. Our qualitative findings showed that by self-monitoring one’s eating behavior through chatbot-initiated check-ins, one could enhance mindful eating and reduce overeating without the need for undergoing mindful eating training. This could eventually lead to a reduction in total food consumption and weight loss. However, more quantitative evidence is needed to support this point.

It is noteworthy that some participants reported skipping meals as planned, which might have led to reduced energy consumption. However, this has to be examined further, as studies have shown that the calories avoided during a skipped meal may be compensated by an increase in snacking or overeating during mealtimes [ 56 ]. One additional element that can be explored in future studies is the effectiveness of promoting healthy snacking, which includes snacking on foods rich in proteins, fruits, vegetables, and whole grains, as opposed to nutrient-poor and energy-dense foods [ 57 , 58 ]. These healthier alternatives have been found not only to be associated with earlier satiety but also to be more nutritious, with their contents being more consistent with the established dietary recommendations and guidelines [ 59 , 60 ]. This strategy can be explored in conjunction with the current approach to decrease participants’ overall snacking habits.

The improvement in the self-regulation of eating habits could be attributed to several factors, including the app content focused on reminding participants of their weight loss goals and to adopt healthier eating habits of less snacking and overeating during mealtimes. In particular, in commonly stigmatized populations like those with overweight and obesity, personalization of interventions enhances one’s feeling of being taken care of, nurtured, and respected, providing them with a sense of confidence [ 61 ]. This may have improved individuals’ willingness to engage with the eTRIP content, knowing that they would be well-respected and seen as individuals through personalized chatbot conversations and reminders [ 62 ]. Other studies have shown that personalized eHealth interventions are more effective than conventional programs in enhancing weight loss maintenance, BMI, waist circumference, and various other metabolic indicators [ 63 ].

In conjunction with improvements in eating habits, participants also engaged in greater levels of physical activity by the end of this study. Increased health consciousness and self-education about the impacts and types of physical activity are factors that may explain the observed increased level of physical activity among the participants [ 64 ]. Various studies have found that a combination of diet and exercise is superior to diet-only interventions in inducing weight loss [ 65 ]. The level of physical activity is also an important factor for improving long-term weight loss [ 66 - 68 ]. Moderate amounts of physical activity were observed to prevent weight regain after weight loss [ 65 , 68 , 69 ]. In addition, the American College of Sports Medicine recommends 200-300 minutes of moderate physical activity a week to prevent similar weight regain [ 70 ]. In our study, low and moderate levels of exercise were seen to increase significantly among the participants. Although the sustainability of the increase in the exercise levels is still unknown, the preliminary data are encouraging to show the potential of the app in impacting physical activity. The amounts of high levels of exercise were, however, not impacted significantly. Additional interventions, including the provision of educational materials about the benefits of and types of exercise, along with personalized reminders for exercise can potentially further increase the success of the app in increasing moderate and high levels of exercise among its participants [ 71 , 72 ].

In addition to improvements in the eating habits and levels of physical activity, there were changes in the psychological factors among the participants. The mean depressive symptoms were significantly decreased at the end of the weeklong program. Various studies have shown that healthy living characterized by various factors such as healthy eating and sufficient levels of physical activity have the potential to positively impact psychological factors such as mood and emotions [ 73 ]. Healthy eating with adherence to dietary recommendations has been found to reduce the levels of inflammation, increase the levels of various micronutrients such as vitamins, and regulate the levels of simple sugars, all of which are protective against mental illnesses, especially depression [ 73 - 76 ]. Studies have also shown that the use of chatbots in the app may decrease depressive symptoms among some participants. Chatbots provide individuals the ability to provide self-care in an environment that is neither costly nor stigmatizing [ 77 ]. This may enable participants to be more open with their emotions, as well as to have an outlet to gain relief through their interaction with the chatbot as a proxy of human interaction [ 77 ]. Studies have shown that improvements in psychological factors such as depressive symptoms have been positively associated with weight loss and maintenance, further increasing the effectiveness of weight loss efforts among participants with overweight and obesity [ 45 ].

Strengths and Limitations

This study was the first to characterize the effectiveness of an AI-assisted weight loss app in the context of a Southeast Asian cohort. One strength of this study was the demographics of the participants, which was generally representative of the Singaporean population in terms of sex and ethnicity. The consideration of population-specific determinants of obesity and overweight during the design of the app would have also increased its applicability in this population [ 78 ], having considered the various nuances and practical needs of its target demographic. For example, the food image recognition system, which was built based on local food items, reduced the amount of time and effort required for the logging of food, improved the usability of the intervention, and enhanced user experience. The success of this app thus provides evidence that the consideration of population-specific underpinnings and practical requirements were essential toward the successful design and implementation of a weight loss intervention [ 79 ].

Although the app presents significant potential in this weeklong trial, this study is limited due to its short time frame. This presents with difficulties in understanding the midterm to long-term impacts of using the app. However, it is reassuring that despite the limited time frame of this study, the various behavioral and psychological indicators were observed to be significantly improved. Through the feedback gathered from the participants, the app may be improved in specific aspects, including (1) refining choices available for the various survey fields such as the provision of a drop-down menu for the selection of weight loss goals; (2) providing additional feedback and weekly summaries to the participants for knowledge of their progress in various aspects; and (3) providing educational materials to provide participants with the means to improve, especially for what to do after eating lapses and suggestions for healthy snacking. Another limitation was the lack of feedback quotes from older individuals as opposed to those from younger individuals. This could be due to various reasons, of which decreased media literacy among older individuals might present additional obstacles for the provision of feedback [ 80 ]. Lastly, we did not collect information on the participants’ medical and pharmacological history, where certain diseases and drugs are known to influence weight gain through various metabolic and neural pathways. Weight and waist circumference were also self-reported, and thus, data from these measures should be interpreted cautiously.

This study was the first to characterize the effectiveness of an AI-assisted weight loss app in the context of a Southeast Asian cohort. The positive findings of this study show the feasibility of implementing this app and the large potential it has in impacting weight loss efforts, especially among individuals with overweight and obesity. Efforts should be made to lengthen and upscale this program for a greater understanding of the midterm to long-term effects of this app.

Conflicts of Interest

AMC has served on advisory boards to Eli Lilly and Boehringer Ingelheim and received grant support, on behalf of the University of Pennsylvania, from Eli Lilly and WW (Weight Watchers). No other authors declare conflicts of interest.

TREND (Transparent Reporting of Evaluations with Nonrandomized Designs) checklist.

Details on outcome measures.

- Abdelaal M, le Roux CW, Docherty NG. Morbidity and mortality associated with obesity. Ann Transl Med. Apr 2017;5(7):161. [ FREE Full text ] [ CrossRef ] [ Medline ]

- OECD. The heavy burden of obesity: the economics of prevention. OECD Health Policy Studies. Oct 10, 2019.:1-100. [ FREE Full text ] [ CrossRef ]

- Hawkes C, Smith TG, Jewell J, Wardle J, Hammond RA, Friel S, et al. Smart food policies for obesity prevention. The Lancet. Jun 2015;385(9985):2410-2421. [ CrossRef ]

- Johns DJ, Hartmann-Boyce J, Jebb SA, Aveyard P, Behavioural Weight Management Review Group. Diet or exercise interventions vs combined behavioral weight management programs: a systematic review and meta-analysis of direct comparisons. J Acad Nutr Diet. Oct 2014;114(10):1557-1568. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Clarke B, Kwon J, Swinburn B, Sacks G. Understanding the dynamics of obesity prevention policy decision-making using a systems perspective: A case study of Healthy Together Victoria. PLoS One. 2021;16(1):e0245535. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Chew HSJ, Koh WL, Ng JSHY, Tan KK. Sustainability of weight loss through smartphone apps: systematic review and meta-analysis on anthropometric, metabolic, and dietary outcomes. J Med Internet Res. Sep 21, 2022;24(9):e40141. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Booth HP, Prevost TA, Wright AJ, Gulliford MC. Effectiveness of behavioural weight loss interventions delivered in a primary care setting: a systematic review and meta-analysis. Fam Pract. Dec 2014;31(6):643-653. [ FREE Full text ] [ CrossRef ] [ Medline ]

- LeBlanc ES, Patnode CD, Webber EM, Redmond N, Rushkin M, O'Connor EA. Behavioral and pharmacotherapy weight loss interventions to prevent obesity-related morbidity and mortality in adults: updated evidence report and systematic review for the US Preventive Services Task Force. JAMA. Sep 18, 2018;320(11):1172-1191. [ CrossRef ] [ Medline ]

- MacLean PS, Wing RR, Davidson T, Epstein L, Goodpaster B, Hall KD, et al. NIH working group report: Innovative research to improve maintenance of weight loss. Obesity (Silver Spring). Jan 2015;23(1):7-15. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Daley A, Jolly K, Madigan C, et al. A brief behavioural intervention to promote regular self-weighing to prevent weight regain after weight loss: a RCT. Public Health Research. 2019.:7. [ CrossRef ] [ Medline ]

- Hadžiabdić MO, Mucalo I, Hrabač P, Matić T, Rahelić D, Božikov V. Factors predictive of drop-out and weight loss success in weight management of obese patients. J Hum Nutr Diet. Feb 2015;28 Suppl 2:24-32. [ CrossRef ] [ Medline ]

- Evans D. MyFitnessPal. Br J Sports Med. Jan 27, 2016;51(14):1101-1102. [ CrossRef ]

- Garcia R. Utilization, Integration & Evaluation of LIVESTRONG's MyPlate Telehealth Technology. URL: https://www.researchgate.net/publication/273635099_Utilization_Integration_Evaluation_of_LIVESTRONG's_MyPlate_Telehealth_Technology [accessed 2023-04-01]

- Hartman SJ, Nelson SH, Weiner LS. Patterns of Fitbit use and activity levels throughout a physical activity intervention: exploratory analysis from a randomized controlled trial. JMIR Mhealth Uhealth. Feb 05, 2018;6(2):e29. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Yao J, Tan CS, Chen C, Tan J, Lim N, Müller-Riemenschneider F. Bright spots, physical activity investments that work: National Steps Challenge, Singapore: a nationwide mHealth physical activity programme. Br J Sports Med. Sep 2020;54(17):1047-1048. [ CrossRef ] [ Medline ]

- Lim SL, Johal J, Ong KW, Han CY, Chan YH, Lee YM, et al. Lifestyle intervention enabled by mobile technology on weight loss in patients with nonalcoholic fatty liver disease: randomized controlled trial. JMIR Mhealth Uhealth. Apr 13, 2020;8(4):e14802. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Birkett D. Disinhibition. In: The Psychiatry of Stroke. London, UK. Routledge; 2012;150-161.

- Carels RA, Hoffman J, Collins A, Raber AC, Cacciapaglia H, O'Brien WH. Ecological momentary assessment of temptation and lapse in dieting. Eat Behav. 2001;2(4):307-321. [ CrossRef ] [ Medline ]

- Kwasnicka D, Dombrowski SU, White M, Sniehotta FF. N-of-1 study of weight loss maintenance assessing predictors of physical activity, adherence to weight loss plan and weight change. Psychol Health. Jun 2017;32(6):686-708. [ FREE Full text ] [ CrossRef ] [ Medline ]

- McKee HC, Ntoumanis N, Taylor IM. An ecological momentary assessment of lapse occurrences in dieters. Ann Behav Med. Dec 2014;48(3):300-310. [ CrossRef ] [ Medline ]

- Chew HSJ, Loong SSE, Lim SL, Tam WSW, Chew NWS, Chin YH, et al. Socio-demographic, behavioral and psychological factors associated with high BMI among adults in a Southeast Asian multi-ethnic society: a structural equation model. Nutrients. Apr 10, 2023;15(8):1826. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Des Jarlais DC, Lyles C, Crepaz N, TREND Group. Improving the reporting quality of nonrandomized evaluations of behavioral and public health interventions: the TREND statement. Am J Public Health. Mar 2004;94(3):361-366. [ CrossRef ] [ Medline ]

- Knapp T. Why is the one-group pretest-posttest design still used? Clin Nurs Res. Oct 2016;25(5):467-472. [ CrossRef ] [ Medline ]

- Faul F, Erdfelder E, Lang AG, Buchner A. G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods. May 2007;39(2):175-191. [ CrossRef ] [ Medline ]

- Chew HSJ. The use of artificial intelligence-based conversational agents (chatbots) for weight loss: scoping review and practical recommendations. JMIR Med Inform. Apr 13, 2022;10(4):e32578. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Chew HSJ, Achananuparp P. Perceptions and needs of artificial intelligence in health care to increase adoption: scoping review. J Med Internet Res. Jan 14, 2022;24(1):e32939. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Chew HSJ, Gao Y, Shabbir A, Lim SL, Geetha K, Kim G, et al. Personal motivation, self-regulation barriers and strategies for weight loss in people with overweight and obesity: a thematic framework analysis. Public Health Nutr. Feb 22, 2022;25(9):2426-2435. [ CrossRef ]

- Chew H, Lim S, Kim G, Kayambu G, So BYJ, Shabbir A, et al. Essential elements of weight loss apps for a multi-ethnic population with high BMI: a qualitative study with practical recommendations. Transl Behav Med. Apr 03, 2023;13(3):140-148. [ CrossRef ] [ Medline ]

- Chew HSJ, Ang WHD, Lau Y. The potential of artificial intelligence in enhancing adult weight loss: a scoping review. Public Health Nutr. Feb 17, 2021;24(8):1993-2020. [ CrossRef ]

- Chew HSJ, Lau ST, Lau Y. Weight-loss interventions for improving emotional eating among adults with high body mass index: A systematic review with meta-analysis and meta-regression. Eur Eat Disord Rev. Jul 2022;30(4):304-327. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Chew HSJ, Sim KLD, Choi KC, Chair SY. Effectiveness of a nurse-led temporal self-regulation theory-based program on heart failure self-care: A randomized controlled trial. Int J Nurs Stud. Mar 2021;115:103872. [ CrossRef ] [ Medline ]

- Hall PA, Fong GT. Temporal self-regulation theory: A model for individual health behavior. Health Psychology Review. Mar 2007;1(1):6-52. [ CrossRef ]

- Abraham C, Michie S. A taxonomy of behavior change techniques used in interventions. Health Psychol. May 2008;27(3):379-387. [ CrossRef ] [ Medline ]

- Chew HSJ, Li J, Chng S. Improving adult eating behaviours by manipulating time perspective: a systematic review and meta-analysis. Psychol Health. Jan 24, 2023.:1-17. [ CrossRef ] [ Medline ]

- Chew HSJ, Rajasegaran NN, Chng S. Effectiveness of interactive technology-assisted interventions on promoting healthy food choices: a scoping review and meta-analysis. Br J Nutr. Jan 25, 2023;130(7):1250-1259. [ CrossRef ]

- Verplanken B, Orbell S. Reflections on past behavior: a self‐report index of habit strength. J Applied Social Pyschol. Jul 31, 2006;33(6):1313-1330. [ CrossRef ]

- Chng S, Chew HSJ, Joireman J. When time is of the essence: Development and validation of brief consideration of future (and immediate) consequences scales. Personality and Individual Differences. Feb 2022;186:111362. [ CrossRef ]

- Kliemann N, Beeken RJ, Wardle J, Johnson F. Development and validation of the Self-Regulation of Eating Behaviour Questionnaire for adults. Int J Behav Nutr Phys Act. Aug 02, 2016;13:87. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Craig CL, Marshall AL, Sjöström M, Bauman AE, et al. International Physical Activity Questionnaire: 12-country reliability and validity. Medicine & Science in Sports & Exercise. 2003;35(8):1381-1395. [ CrossRef ]

- Kroenke K, Spitzer RL, Williams JB, Monahan PO, Löwe B. Anxiety disorders in primary care: prevalence, impairment, comorbidity, and detection. Ann Intern Med. Mar 06, 2007;146(5):317-325. [ CrossRef ] [ Medline ]

- Kroenke K, Spitzer RL, Williams JBW. The Patient Health Questionnaire-2: validity of a two-item depression screener. Med Care. Nov 2003;41(11):1284-1292. [ CrossRef ] [ Medline ]

- IBM SPSS statistics for Windows. IBM Corp. May 21, 2021. URL: https://www.ibm.com/products/spss-statistics [accessed 2024-03-28]

- VanderWeele T, Mathur M. Some desirable properties of the Bonferroni correction: is the Bonferroni correction really so bad? Am J Epidemiol. Mar 01, 2019;188(3):617-618. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Bengtsson M. How to plan and perform a qualitative study using content analysis. NursingPlus Open. 2016;2:8-14. [ CrossRef ]

- Varkevisser RDM, van Stralen MM, Kroeze W, Ket JCF, Steenhuis IHM. Determinants of weight loss maintenance: a systematic review. Obes Rev. Feb 2019;20(2):171-211. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Forman EM, Goldstein SP, Crochiere RJ, Butryn ML, Juarascio AS, Zhang F, et al. Randomized controlled trial of OnTrack, a just-in-time adaptive intervention designed to enhance weight loss. Transl Behav Med. Nov 25, 2019;9(6):989-1001. [ CrossRef ] [ Medline ]

- Qasim A, Turcotte M, de Souza RJ, Samaan MC, Champredon D, Dushoff J, et al. On the origin of obesity: identifying the biological, environmental and cultural drivers of genetic risk among human populations. Obes Rev. Feb 2018;19(2):121-149. [ CrossRef ] [ Medline ]

- Executive summary on National Population Health Survey 2016/17. Singapore MoH. URL: https://www.moh.gov.sg/docs/librariesprovider5/resources-statistics/reports/executive-summary-nphs-2016_17.pdf [accessed 2023-04-01]

- McCrory MA, Suen VM, Roberts SB. Biobehavioral influences on energy intake and adult weight gain. The Journal of Nutrition. Dec 2002;132(12):3830S-3834S. [ CrossRef ]

- Davis C, Levitan RD, Muglia P, Bewell C, Kennedy JL. Decision-making deficits and overeating: a risk model for obesity. Obes Res. Jun 2004;12(6):929-935. [ FREE Full text ] [ CrossRef ] [ Medline ]

- Hetherington MM. Cues to overeat: psychological factors influencing overconsumption. Proc. Nutr. Soc. Feb 28, 2007;66(1):113-123. [ CrossRef ]

- Borer K. Understanding human physiological limitations and societal pressures in favor of overeating helps to avoid obesity. Nutrients. Jan 22, 2019;11(2):227. [ CrossRef ]

- Borer KT. Why we eat too much, have an easier time gaining than losing weight, and expend too little energy: suggestions for counteracting or mitigating these problems. Nutrients. Oct 26, 2021;13(11):3812. [ CrossRef ]