[SOLVED] Local Variable Referenced Before Assignment

Python treats variables referenced only inside a function as global variables. Any variable assigned to a function’s body is assumed to be a local variable unless explicitly declared as global.

Why Does This Error Occur?

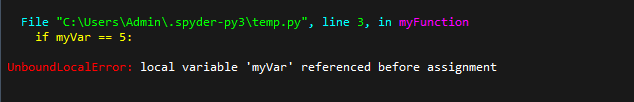

Unboundlocalerror: local variable referenced before assignment occurs when a variable is used before its created. Python does not have the concept of variable declarations. Hence it searches for the variable whenever used. When not found, it throws the error.

Before we hop into the solutions, let’s have a look at what is the global and local variables.

Local Variable Declarations vs. Global Variable Declarations

| Local Variables | Global Variables |

|---|---|

| A variable is declared primarily within a Python function. | Global variables are in the global scope, outside a function. |

| A local variable is created when the function is called and destroyed when the execution is finished. | A Variable is created upon execution and exists in memory till the program stops. |

| Local Variables can only be accessed within their own function. | All functions of the program can access global variables. |

| Local variables are immune to changes in the global scope. Thereby being more secure. | Global Variables are less safer from manipulation as they are accessible in the global scope. |

![local variable 'id_length' referenced before assignment scvelo [Fixed] typeerror can’t compare datetime.datetime to datetime.date](https://www.pythonpool.com/wp-content/uploads/2024/01/typeerror-cant-compare-datetime.datetime-to-datetime.date_-300x157.webp)

Local Variable Referenced Before Assignment Error with Explanation

Try these examples yourself using our Online Compiler.

Let’s look at the following function:

Explanation

The variable myVar has been assigned a value twice. Once before the declaration of myFunction and within myFunction itself.

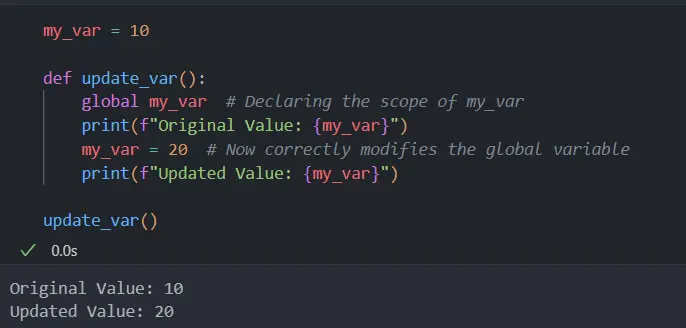

Using Global Variables

Passing the variable as global allows the function to recognize the variable outside the function.

Create Functions that Take in Parameters

Instead of initializing myVar as a global or local variable, it can be passed to the function as a parameter. This removes the need to create a variable in memory.

UnboundLocalError: local variable ‘DISTRO_NAME’

This error may occur when trying to launch the Anaconda Navigator in Linux Systems.

Upon launching Anaconda Navigator, the opening screen freezes and doesn’t proceed to load.

Try and update your Anaconda Navigator with the following command.

If solution one doesn’t work, you have to edit a file located at

After finding and opening the Python file, make the following changes:

In the function on line 159, simply add the line:

DISTRO_NAME = None

Save the file and re-launch Anaconda Navigator.

DJANGO – Local Variable Referenced Before Assignment [Form]

The program takes information from a form filled out by a user. Accordingly, an email is sent using the information.

Upon running you get the following error:

We have created a class myForm that creates instances of Django forms. It extracts the user’s name, email, and message to be sent.

A function GetContact is created to use the information from the Django form and produce an email. It takes one request parameter. Prior to sending the email, the function verifies the validity of the form. Upon True , .get() function is passed to fetch the name, email, and message. Finally, the email sent via the send_mail function

Why does the error occur?

We are initializing form under the if request.method == “POST” condition statement. Using the GET request, our variable form doesn’t get defined.

Local variable Referenced before assignment but it is global

This is a common error that happens when we don’t provide a value to a variable and reference it. This can happen with local variables. Global variables can’t be assigned.

This error message is raised when a variable is referenced before it has been assigned a value within the local scope of a function, even though it is a global variable.

Here’s an example to help illustrate the problem:

In this example, x is a global variable that is defined outside of the function my_func(). However, when we try to print the value of x inside the function, we get a UnboundLocalError with the message “local variable ‘x’ referenced before assignment”.

This is because the += operator implicitly creates a local variable within the function’s scope, which shadows the global variable of the same name. Since we’re trying to access the value of x before it’s been assigned a value within the local scope, the interpreter raises an error.

To fix this, you can use the global keyword to explicitly refer to the global variable within the function’s scope:

However, in the above example, the global keyword tells Python that we want to modify the value of the global variable x, rather than creating a new local variable. This allows us to access and modify the global variable within the function’s scope, without causing any errors.

Local variable ‘version’ referenced before assignment ubuntu-drivers

This error occurs with Ubuntu version drivers. To solve this error, you can re-specify the version information and give a split as 2 –

Here, p_name means package name.

With the help of the threading module, you can avoid using global variables in multi-threading. Make sure you lock and release your threads correctly to avoid the race condition.

When a variable that is created locally is called before assigning, it results in Unbound Local Error in Python. The interpreter can’t track the variable.

Therefore, we have examined the local variable referenced before the assignment Exception in Python. The differences between a local and global variable declaration have been explained, and multiple solutions regarding the issue have been provided.

Trending Python Articles

![local variable 'id_length' referenced before assignment scvelo [Fixed] nameerror: name Unicode is not defined](https://www.pythonpool.com/wp-content/uploads/2024/01/Fixed-nameerror-name-Unicode-is-not-defined-300x157.webp)

Local variable referenced before assignment in Python

Last updated: Apr 8, 2024 Reading time · 4 min

# Local variable referenced before assignment in Python

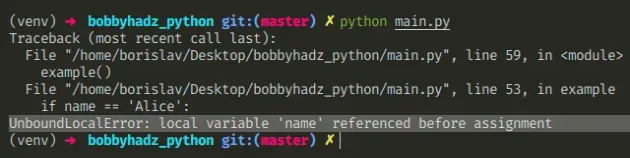

The Python "UnboundLocalError: Local variable referenced before assignment" occurs when we reference a local variable before assigning a value to it in a function.

To solve the error, mark the variable as global in the function definition, e.g. global my_var .

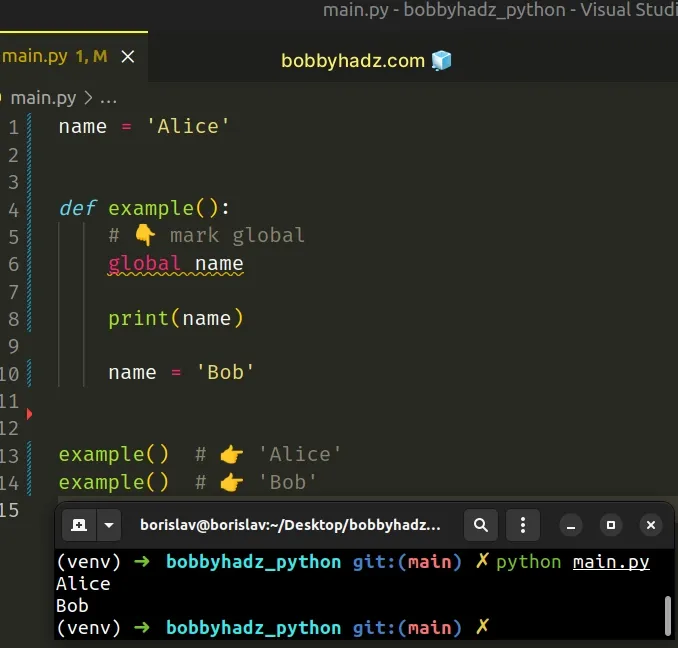

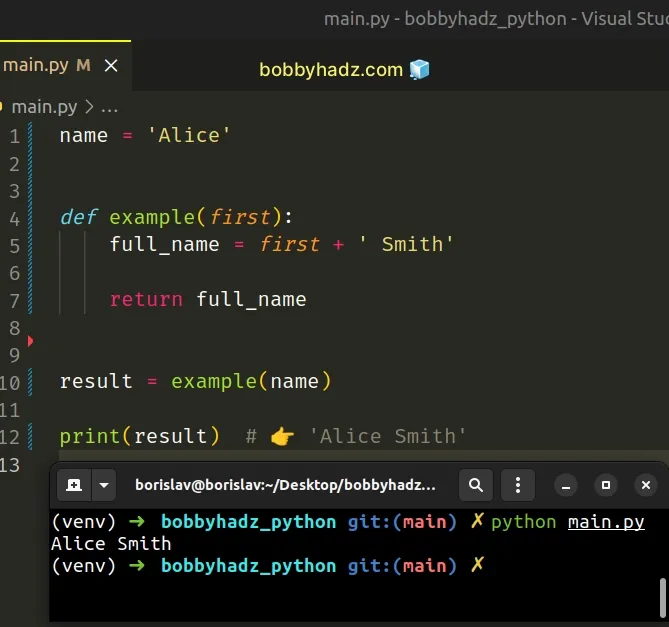

Here is an example of how the error occurs.

We assign a value to the name variable in the function.

# Mark the variable as global to solve the error

To solve the error, mark the variable as global in your function definition.

If a variable is assigned a value in a function's body, it is a local variable unless explicitly declared as global .

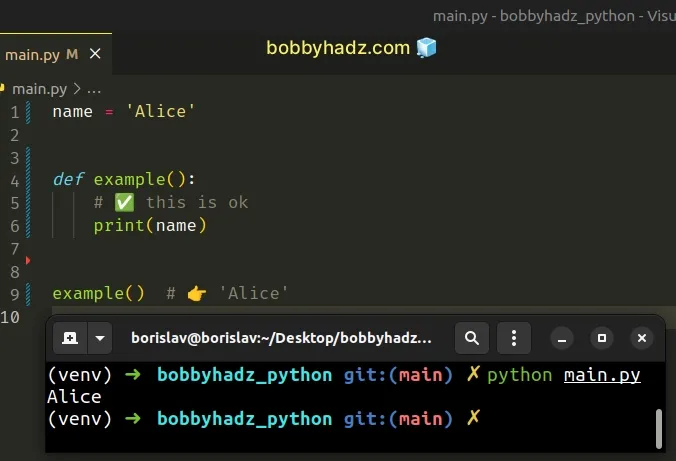

# Local variables shadow global ones with the same name

You could reference the global name variable from inside the function but if you assign a value to the variable in the function's body, the local variable shadows the global one.

Accessing the name variable in the function is perfectly fine.

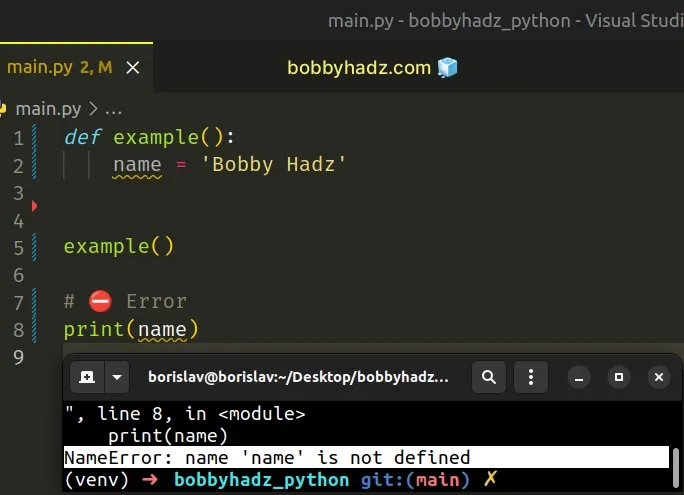

On the other hand, variables declared in a function cannot be accessed from the global scope.

The name variable is declared in the function, so trying to access it from outside causes an error.

Make sure you don't try to access the variable before using the global keyword, otherwise, you'd get the SyntaxError: name 'X' is used prior to global declaration error.

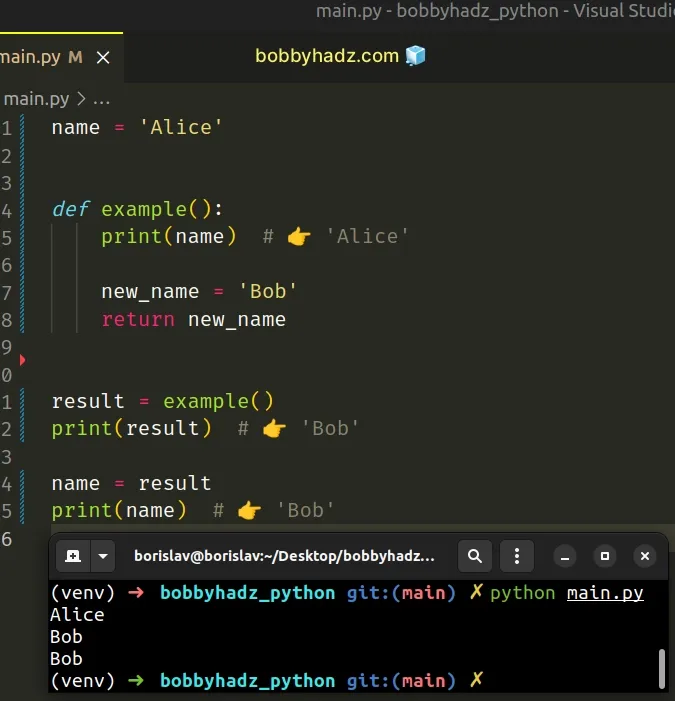

# Returning a value from the function instead

An alternative solution to using the global keyword is to return a value from the function and use the value to reassign the global variable.

We simply return the value that we eventually use to assign to the name global variable.

# Passing the global variable as an argument to the function

You should also consider passing the global variable as an argument to the function.

We passed the name global variable as an argument to the function.

If we assign a value to a variable in a function, the variable is assumed to be local unless explicitly declared as global .

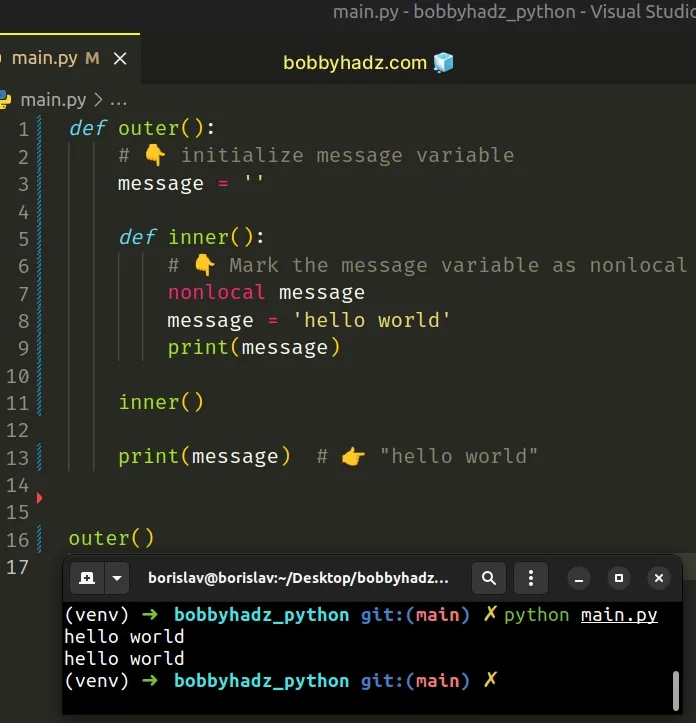

# Assigning a value to a local variable from an outer scope

If you have a nested function and are trying to assign a value to the local variables from the outer function, use the nonlocal keyword.

The nonlocal keyword allows us to work with the local variables of enclosing functions.

Had we not used the nonlocal statement, the call to the print() function would have returned an empty string.

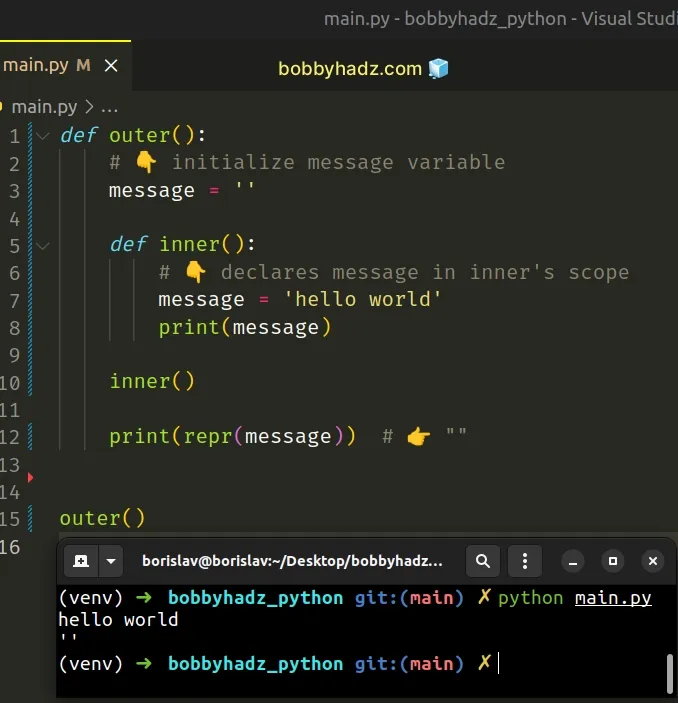

Printing the message variable on the last line of the function shows an empty string because the inner() function has its own scope.

Changing the value of the variable in the inner scope is not possible unless we use the nonlocal keyword.

Instead, the message variable in the inner function simply shadows the variable with the same name from the outer scope.

# Discussion

As shown in this section of the documentation, when you assign a value to a variable inside a function, the variable:

- Becomes local to the scope.

- Shadows any variables from the outer scope that have the same name.

The last line in the example function assigns a value to the name variable, marking it as a local variable and shadowing the name variable from the outer scope.

At the time the print(name) line runs, the name variable is not yet initialized, which causes the error.

The most intuitive way to solve the error is to use the global keyword.

The global keyword is used to indicate to Python that we are actually modifying the value of the name variable from the outer scope.

- If a variable is only referenced inside a function, it is implicitly global.

- If a variable is assigned a value inside a function's body, it is assumed to be local, unless explicitly marked as global .

If you want to read more about why this error occurs, check out [this section] ( this section ) of the docs.

# Additional Resources

You can learn more about the related topics by checking out the following tutorials:

- SyntaxError: name 'X' is used prior to global declaration

Borislav Hadzhiev

Web Developer

Copyright © 2024 Borislav Hadzhiev

How to Fix Local Variable Referenced Before Assignment Error in Python

Table of Contents

Fixing local variable referenced before assignment error.

In Python , when you try to reference a variable that hasn't yet been given a value (assigned), it will throw an error.

That error will look like this:

In this post, we'll see examples of what causes this and how to fix it.

Let's begin by looking at an example of this error:

If you run this code, you'll get

The issue is that in this line:

We are defining a local variable called value and then trying to use it before it has been assigned a value, instead of using the variable that we defined in the first line.

If we want to refer the variable that was defined in the first line, we can make use of the global keyword.

The global keyword is used to refer to a variable that is defined outside of a function.

Let's look at how using global can fix our issue here:

Global variables have global scope, so you can referenced them anywhere in your code, thus avoiding the error.

If you run this code, you'll get this output:

In this post, we learned at how to avoid the local variable referenced before assignment error in Python.

The error stems from trying to refer to a variable without an assigned value, so either make use of a global variable using the global keyword, or assign the variable a value before using it.

Thanks for reading!

- Privacy Policy

- Terms of Service

- Python Basics

- Interview Questions

- Python Quiz

- Popular Packages

- Python Projects

- Practice Python

- AI With Python

- Learn Python3

- Python Automation

- Python Web Dev

- DSA with Python

- Python OOPs

- Dictionaries

How to Fix – UnboundLocalError: Local variable Referenced Before Assignment in Python

Developers often encounter the UnboundLocalError Local Variable Referenced Before Assignment error in Python. In this article, we will see what is local variable referenced before assignment error in Python and how to fix it by using different approaches.

What is UnboundLocalError: Local variable Referenced Before Assignment?

This error occurs when a local variable is referenced before it has been assigned a value within a function or method. This error typically surfaces when utilizing try-except blocks to handle exceptions, creating a puzzle for developers trying to comprehend its origins and find a solution.

Below, are the reasons by which UnboundLocalError: Local variable Referenced Before Assignment error occurs in Python :

Nested Function Variable Access

Global variable modification.

In this code, the outer_function defines a variable ‘x’ and a nested inner_function attempts to access it, but encounters an UnboundLocalError due to a local ‘x’ being defined later in the inner_function.

In this code, the function example_function tries to increment the global variable ‘x’, but encounters an UnboundLocalError since it’s treated as a local variable due to the assignment operation within the function.

Solution for Local variable Referenced Before Assignment in Python

Below, are the approaches to solve “Local variable Referenced Before Assignment”.

In this code, example_function successfully modifies the global variable ‘x’ by declaring it as global within the function, incrementing its value by 1, and then printing the updated value.

In this code, the outer_function defines a local variable ‘x’, and the inner_function accesses and modifies it as a nonlocal variable, allowing changes to the outer function’s scope from within the inner function.

Please Login to comment...

Similar reads.

- Python Programs

- Python Errors

- Python How-to-fix

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- API »

- scvelo.utils.merge

- Edit on GitHub

scvelo.utils.merge ¶

Merge two annotated data matrices.

Annotated data matrix (reference data set).

Annotated data matrix (to be merged into adata).

Boolean flag to manipulate original AnnData or a copy of it.

AnnData , None

Optional[ anndata.AnnData ] – Returns a AnnData object

Fix "local variable referenced before assignment" in Python

Introduction

If you're a Python developer, you've probably come across a variety of errors, like the "local variable referenced before assignment" error. This error can be a bit puzzling, especially for beginners and when it involves local/global variables.

Today, we'll explain this error, understand why it occurs, and see how you can fix it.

The "local variable referenced before assignment" Error

The "local variable referenced before assignment" error in Python is a common error that occurs when a local variable is referenced before it has been assigned a value. This error is a type of UnboundLocalError , which is raised when a local variable is referenced before it has been assigned in the local scope.

Here's a simple example:

Running this code will throw the "local variable 'x' referenced before assignment" error. This is because the variable x is referenced in the print(x) statement before it is assigned a value in the local scope of the foo function.

Even more confusing is when it involves global variables. For example, the following code also produces the error:

But wait, why does this also produce the error? Isn't x assigned before it's used in the say_hello function? The problem here is that x is a global variable when assigned "Hello ". However, in the say_hello function, it's a different local variable, which has not yet been assigned.

We'll see later in this Byte how you can fix these cases as well.

Fixing the Error: Initialization

One way to fix this error is to initialize the variable before using it. This ensures that the variable exists in the local scope before it is referenced.

Let's correct the error from our first example:

In this revised code, we initialize x with a value of 1 before printing it. Now, when you run the function, it will print 1 without any errors.

Fixing the Error: Global Keyword

Another way to fix this error, depending on your specific scenario, is by using the global keyword. This is especially useful when you want to use a global variable inside a function.

No spam ever. Unsubscribe anytime. Read our Privacy Policy.

Here's how:

In this snippet, we declare x as a global variable inside the function foo . This tells Python to look for x in the global scope, not the local one . Now, when you run the function, it will increment the global x by 1 and print 1 .

Similar Error: NameError

An error that's similar to the "local variable referenced before assignment" error is the NameError . This is raised when you try to use a variable or a function name that has not been defined yet.

Running this code will result in a NameError :

In this case, we're trying to print the value of y , but y has not been defined anywhere in the code. Hence, Python raises a NameError . This is similar in that we are trying to use an uninitialized/undefined variable, but the main difference is that we didn't try to initialize y anywhere else in our code.

Variable Scope in Python

Understanding the concept of variable scope can help avoid many common errors in Python, including the main error of interest in this Byte. But what exactly is variable scope?

In Python, variables have two types of scope - global and local. A variable declared inside a function is known as a local variable, while a variable declared outside a function is a global variable.

Consider this example:

In this code, x is a global variable, and y is a local variable. x can be accessed anywhere in the code, but y can only be accessed within my_function . Confusion surrounding this is one of the most common causes for the "variable referenced before assignment" error.

In this Byte, we've taken a look at the "local variable referenced before assignment" error and another similar error, NameError . We also delved into the concept of variable scope in Python, which is an important concept to understand to avoid these errors. If you're seeing one of these errors, check the scope of your variables and make sure they're being assigned before they're being used.

Monitor with Ping Bot

Reliable monitoring for your app, databases, infrastructure, and the vendors they rely on. Ping Bot is a powerful uptime and performance monitoring tool that helps notify you and resolve issues before they affect your customers.

© 2013- 2024 Stack Abuse. All rights reserved.

- scVelo - RNA velocity generalized through dynamical modeling

- Edit on GitHub

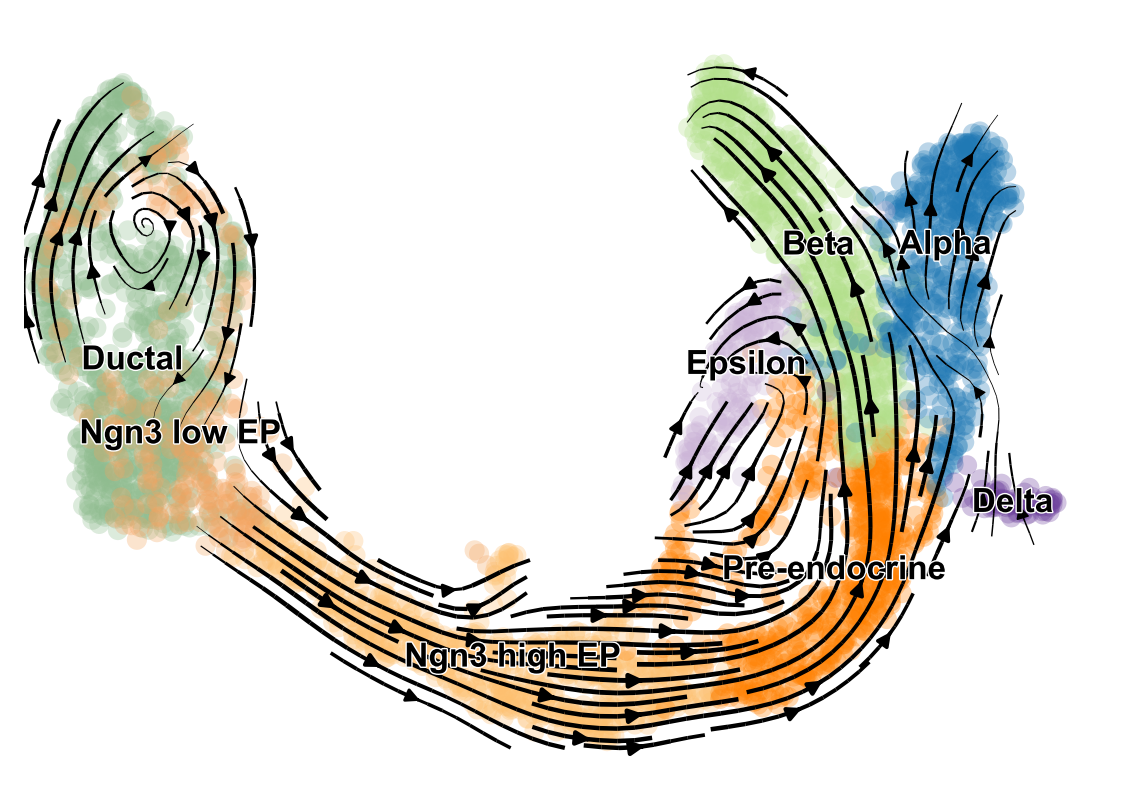

scVelo - RNA velocity generalized through dynamical modeling ¶

Key Contributors

Philipp Weiler : lead developer since 2021, maintainer

Volker Bergen : lead developer 2018-2021, initial conception

scVelo is a scalable toolkit for RNA velocity analysis in single cells; RNA velocity enables the recovery of directed dynamic information by leveraging splicing kinetics [ Manno et al. , 2018 ] . scVelo collects different methods for inferring RNA velocity using an expectation-maximization framework [ Bergen et al. , 2020 ] , deep generative modeling [ Gayoso et al. , 2023 ] , or metabolically labeled transcripts [ Weiler et al. , 2023 ] .

scVelo’s key applications ¶

estimate RNA velocity to study cellular dynamics.

identify putative driver genes and regimes of regulatory changes.

infer a latent time to reconstruct the temporal sequence of transcriptomic events.

estimate reaction rates of transcription, splicing and degradation.

use statistical tests, e.g., to detect different kinetics regimes.

Citing scVelo ¶

If you include or rely on scVelo when publishing research, please adhere to the following citation guide:

EM and steady-state model

If you use the EM ( dynamical ) or steady-state model , cite

If you use veloVI ( VI model ), cite

RNA velocity inference through metabolic labeling information

If you use the implemented method for estimating RNA velocity from metabolic labeling information, cite

Found a bug or would like to see a feature implemented? Feel free to submit an issue . Have a question or would like to start a new discussion? Head over to GitHub discussions . Your help to improve scVelo is highly appreciated.

Python UnboundLocalError: local variable referenced before assignment

by Suf | Programming , Python , Tips

If you try to reference a local variable before assigning a value to it within the body of a function, you will encounter the UnboundLocalError: local variable referenced before assignment.

The preferable way to solve this error is to pass parameters to your function, for example:

Alternatively, you can declare the variable as global to access it while inside a function. For example,

This tutorial will go through the error in detail and how to solve it with code examples .

Table of contents

What is scope in python, unboundlocalerror: local variable referenced before assignment, solution #1: passing parameters to the function, solution #2: use global keyword, solution #1: include else statement, solution #2: use global keyword.

Scope refers to a variable being only available inside the region where it was created. A variable created inside a function belongs to the local scope of that function, and we can only use that variable inside that function.

A variable created in the main body of the Python code is a global variable and belongs to the global scope. Global variables are available within any scope, global and local.

UnboundLocalError occurs when we try to modify a variable defined as local before creating it. If we only need to read a variable within a function, we can do so without using the global keyword. Consider the following example that demonstrates a variable var created with global scope and accessed from test_func :

If we try to assign a value to var within test_func , the Python interpreter will raise the UnboundLocalError:

This error occurs because when we make an assignment to a variable in a scope, that variable becomes local to that scope and overrides any variable with the same name in the global or outer scope.

var +=1 is similar to var = var + 1 , therefore the Python interpreter should first read var , perform the addition and assign the value back to var .

var is a variable local to test_func , so the variable is read or referenced before we have assigned it. As a result, the Python interpreter raises the UnboundLocalError.

Example #1: Accessing a Local Variable

Let’s look at an example where we define a global variable number. We will use the increment_func to increase the numerical value of number by 1.

Let’s run the code to see what happens:

The error occurs because we tried to read a local variable before assigning a value to it.

We can solve this error by passing a parameter to increment_func . This solution is the preferred approach. Typically Python developers avoid declaring global variables unless they are necessary. Let’s look at the revised code:

We have assigned a value to number and passed it to the increment_func , which will resolve the UnboundLocalError. Let’s run the code to see the result:

We successfully printed the value to the console.

We also can solve this error by using the global keyword. The global statement tells the Python interpreter that inside increment_func , the variable number is a global variable even if we assign to it in increment_func . Let’s look at the revised code:

Let’s run the code to see the result:

Example #2: Function with if-elif statements

Let’s look at an example where we collect a score from a player of a game to rank their level of expertise. The variable we will use is called score and the calculate_level function takes in score as a parameter and returns a string containing the player’s level .

In the above code, we have a series of if-elif statements for assigning a string to the level variable. Let’s run the code to see what happens:

The error occurs because we input a score equal to 40 . The conditional statements in the function do not account for a value below 55 , therefore when we call the calculate_level function, Python will attempt to return level without any value assigned to it.

We can solve this error by completing the set of conditions with an else statement. The else statement will provide an assignment to level for all scores lower than 55 . Let’s look at the revised code:

In the above code, all scores below 55 are given the beginner level. Let’s run the code to see what happens:

We can also create a global variable level and then use the global keyword inside calculate_level . Using the global keyword will ensure that the variable is available in the local scope of the calculate_level function. Let’s look at the revised code.

In the above code, we put the global statement inside the function and at the beginning. Note that the “default” value of level is beginner and we do not include the else statement in the function. Let’s run the code to see the result:

Congratulations on reading to the end of this tutorial! The UnboundLocalError: local variable referenced before assignment occurs when you try to reference a local variable before assigning a value to it. Preferably, you can solve this error by passing parameters to your function. Alternatively, you can use the global keyword.

If you have if-elif statements in your code where you assign a value to a local variable and do not account for all outcomes, you may encounter this error. In which case, you must include an else statement to account for the missing outcome.

For further reading on Python code blocks and structure, go to the article: How to Solve Python IndentationError: unindent does not match any outer indentation level .

Go to the online courses page on Python to learn more about Python for data science and machine learning.

Have fun and happy researching!

Share this:

- Click to share on Facebook (Opens in new window)

- Click to share on LinkedIn (Opens in new window)

- Click to share on Reddit (Opens in new window)

- Click to share on Pinterest (Opens in new window)

- Click to share on Telegram (Opens in new window)

- Click to share on WhatsApp (Opens in new window)

- Click to share on Twitter (Opens in new window)

- Click to share on Tumblr (Opens in new window)

How to Solve Error - Local Variable Referenced Before Assignment in Python

- Python How-To's

- How to Solve Error - Local Variable …

Check the Variable Scope to Fix the local variable referenced before assignment Error in Python

Initialize the variable before use to fix the local variable referenced before assignment error in python, use conditional assignment to fix the local variable referenced before assignment error in python.

This article delves into various strategies to resolve the common local variable referenced before assignment error. By exploring methods such as checking variable scope, initializing variables before use, conditional assignments, and more, we aim to equip both novice and seasoned programmers with practical solutions.

Each method is dissected with examples, demonstrating how subtle changes in code can prevent this frequent error, enhancing the robustness and readability of your Python projects.

The local variable referenced before assignment occurs when some variable is referenced before assignment within a function’s body. The error usually occurs when the code is trying to access the global variable.

The primary purpose of managing variable scope is to ensure that variables are accessible where they are needed while maintaining code modularity and preventing unexpected modifications to global variables.

We can declare the variable as global using the global keyword in Python. Once the variable is declared global, the program can access the variable within a function, and no error will occur.

The below example code demonstrates the code scenario where the program will end up with the local variable referenced before assignment error.

In this example, my_var is a global variable. Inside update_var , we attempt to modify it without declaring its scope, leading to the Local Variable Referenced Before Assignment error.

We need to declare the my_var variable as global using the global keyword to resolve this error. The below example code demonstrates how the error can be resolved using the global keyword in the above code scenario.

In the corrected code, we use the global keyword to inform Python that my_var references the global variable.

When we first print my_var , it displays the original value from the global scope.

After assigning a new value to my_var , it updates the global variable, not a local one. This way, we effectively tell Python the scope of our variable, thus avoiding any conflicts between local and global variables with the same name.

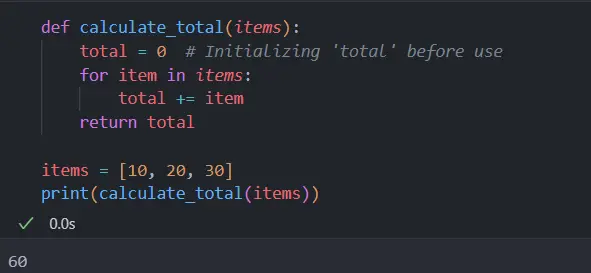

Ensure that the variable is initialized with some value before using it. This can be done by assigning a default value to the variable at the beginning of the function or code block.

The main purpose of initializing variables before use is to ensure that they have a defined state before any operations are performed on them. This practice is not only crucial for avoiding the aforementioned error but also promotes writing clear and predictable code, which is essential in both simple scripts and complex applications.

In this example, the variable total is used in the function calculate_total without prior initialization, leading to the Local Variable Referenced Before Assignment error. The below example code demonstrates how the error can be resolved in the above code scenario.

In our corrected code, we initialize the variable total with 0 before using it in the loop. This ensures that when we start adding item values to total , it already has a defined state (in this case, 0).

This initialization is crucial because it provides a starting point for accumulation within the loop. Without this step, Python does not know the initial state of total , leading to the error.

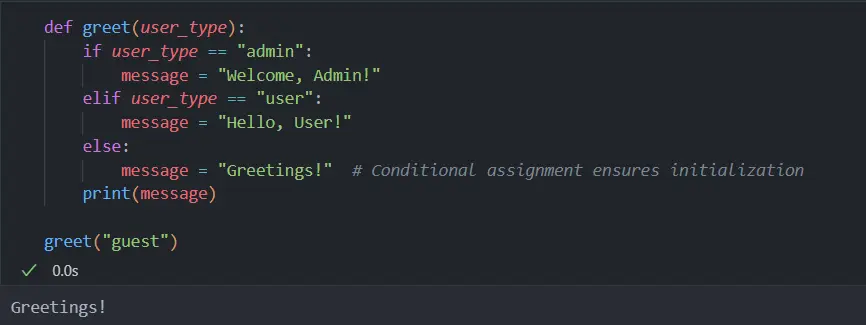

Conditional assignment allows variables to be assigned values based on certain conditions or logical expressions. This method is particularly useful when a variable’s value depends on certain prerequisites or states, ensuring that a variable is always initialized before it’s used, thereby avoiding the common error.

In this example, message is only assigned within the if and elif blocks. If neither condition is met (as with guest ), the variable message remains uninitialized, leading to the Local Variable Referenced Before Assignment error when trying to print it.

The below example code demonstrates how the error can be resolved in the above code scenario.

In the revised code, we’ve included an else statement as part of our conditional logic. This guarantees that no matter what value user_type holds, the variable message will be assigned some value before it is used in the print function.

This conditional assignment ensures that the message is always initialized, thereby eliminating the possibility of encountering the Local Variable Referenced Before Assignment error.

Throughout this article, we have explored multiple approaches to address the Local Variable Referenced Before Assignment error in Python. From the nuances of variable scope to the effectiveness of initializations and conditional assignments, these strategies are instrumental in developing error-free code.

The key takeaway is the importance of understanding variable scope and initialization in Python. By applying these methods appropriately, programmers can not only resolve this specific error but also enhance the overall quality and maintainability of their code, making their programming journey smoother and more rewarding.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement . We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

UnboundLocalError: local variable 'a' referenced before assignment #6

linbinbin92 commented Apr 7, 2020

| Whenever I use smogn.smoter for my data set, the unbounderror pops up: ~\Anaconda3\lib\site-packages\smogn\smoter.py in smoter(data, y, k, pert, samp_method, under_samp, drop_na_col, drop_na_row, replace, rel_thres, rel_method, rel_xtrm_type, rel_coef, rel_ctrl_pts_rg) ~\Anaconda3\lib\site-packages\smogn\over_sampling.py in over_sampling(data, index, perc, pert, k) UnboundLocalError: local variable 'a' referenced before assignment any ideas? |

| The text was updated successfully, but these errors were encountered: |

- 👍 1 reaction

nickkunz commented Apr 8, 2020

| Thank you for opening this issue . I am not able to immediately identify what might be causing this issue. However, I will look into it further and respond back to you here. |

Sorry, something went wrong.

hsezhiyan commented Apr 11, 2020

| I'm also getting this error , did you make any progress? |

linbinbin92 commented Apr 11, 2020

| Not yet, sorry. Seems that in the over_sampling.py file in line 275 when a is referenced and summed before it has been assigned... I couldnt really find in the file where a is initialized. |

linehammer commented Jan 11, 2021

| All variable assignments in a function store the value in the local symbol table; whereas variable references first look in the local symbol table, then in the global symbol table, and then in the table of built-in names. Thus, global variables cannot be directly assigned a value within a function (unless named in a global statement), although they may be referenced. The unboundlocalerror: local variable referenced is raised when you try to use a variable before it has been assigned in the local context. Python doesn't have variable declarations , so it has to figure out the scope of variables itself. It does so by a simple rule: If there is an assignment to a variable inside a function, that variable is considered local . |

Umanskyt commented May 24, 2022

| Reseting the index fixed this issue for me! |

No branches or pull requests

- Stack Overflow for Teams Where developers & technologists share private knowledge with coworkers

- Advertising & Talent Reach devs & technologists worldwide about your product, service or employer brand

- OverflowAI GenAI features for Teams

- OverflowAPI Train & fine-tune LLMs

- Labs The future of collective knowledge sharing

- About the company Visit the blog

Collectives™ on Stack Overflow

Find centralized, trusted content and collaborate around the technologies you use most.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

Get early access and see previews of new features.

Getting error "local variable referenced before assignment - how to fix?

I'm making a gambling program (i know this shouldn't be incredibly hard), and want to have multiple games which will be in subroutines. However, python seems to think my variables are being assigned in strange places.

I am semi-new to subroutines, and still have some issues here and there. Here is what I am working with:

And here is how it is called:

I expect it to just go through, add a tally into win or loss, then update the money. However, I am getting this error: UnboundLocalError: local variable 'losses' referenced before assignment If I win, it says the same thing with local variable 'wins' .

As shown all variables are assigned at the top, then referenced below in subroutines. I am completely unsure on how python thinks I referenced it before assignment?

I would appreciate any help, thank you in advance!

- Can you post the whole code? – Manuel Fedele Commented Mar 23, 2019 at 14:55

- pastebin.com/WkrPRPfb - check this pastebin for the whole code – xupaii Commented Mar 23, 2019 at 14:57

2 Answers 2

The reason is that losses is defined as a global variable. Within functions (local scope), you can, loosely speaking, read from global variables but not modify them.

This will work:

This won't:

You should assign to your variables within your function body if you want them to have local scope. If you explicitly want to modify global variables, you need to declare them with, for example, global losses in your function body.

The variables wins , money and losses were declared outside the scope of the fiftyfifty() function, so you cannot update them from inside the function unless you explicitly declare them as global variables like this:

Your Answer

Reminder: Answers generated by artificial intelligence tools are not allowed on Stack Overflow. Learn more

Sign up or log in

Post as a guest.

Required, but never shown

By clicking “Post Your Answer”, you agree to our terms of service and acknowledge you have read our privacy policy .

Not the answer you're looking for? Browse other questions tagged python subroutine or ask your own question .

- Featured on Meta

- We spent a sprint addressing your requests — here’s how it went

- Upcoming initiatives on Stack Overflow and across the Stack Exchange network...

- What makes a homepage useful for logged-in users

Hot Network Questions

- How can I fix this rust on spokes?

- Continued calibration of atomic clocks

- Why is this outlet required to be installed on at least 10 meters of wire?

- What's the price of banana?

- Can a festival or a celebration like Halloween be "invented"?

- How does this problem relate to the 50 & 75 move rules?

- Using grout that had hardened in the bag

- Car stalls when coming to a stop except when in neutral

- Fill the triangular grid using the digits 1-9 subject to the constraints provided

- How does turning a follower into a demon work?

- How can I write a std::apply on a std::expected?

- How to choose between 3/4 and 6/8 time?

- Pattern on a PCB

- Align enumerate label with left margin

- Paradox in Prisoner's dilemma

- Trouble understanding the classic approximation of a black body as a hole on a cavity

- Will this over-voltage protection circuit work?

- Cost Sharing Application in Python

- Story about Jesus being kidnapped by the church

- Working with character tables

- Why doesn't sed have a j command?

- Why does RBF rule #3 exist?

- How can I learn how to solve hard problems like this Example?

- Is this a potentially more intuitive approach to MergeSort?

IMAGES

VIDEO

COMMENTS

scv.utils.merge UnboundLocalError: local variable 'id_length' referenced before assignment #1031 Closed danli349 opened this issue Mar 29, 2023 · 1 comment

But right now, it shows "local variable 'id_length' referenced before assignment". And after scv.utils.clean_obs_names(), the number is not right! @WeilerP I read related issue and the pandas is 1.5.3

adata = scv.utils.merge (adata, ldata) 👍 1. This was referenced on Jan 29, 2023. Fix core/_anndata.py:merge #990. Merged. calling scv.utils.merge generates "UnboundLocalError: local variable 'id_length' referenced before assignment" #989. Closed. WeilerP closed this as completed in #990 on Jan 29, 2023. WeilerP mentioned this issue on Feb 2 ...

File "weird.py", line 5, in main. print f(3) UnboundLocalError: local variable 'f' referenced before assignment. Python sees the f is used as a local variable in [f for f in [1, 2, 3]], and decides that it is also a local variable in f(3). You could add a global f statement: def f(x): return x. def main():

DJANGO - Local Variable Referenced Before Assignment [Form] The program takes information from a form filled out by a user. Accordingly, an email is sent using the information. ... Therefore, we have examined the local variable referenced before the assignment Exception in Python. The differences between a local and global variable ...

If a variable is assigned a value in a function's body, it is a local variable unless explicitly declared as global. # Local variables shadow global ones with the same name You could reference the global name variable from inside the function but if you assign a value to the variable in the function's body, the local variable shadows the global one.

value = value + 1 print (value) increment() If you run this code, you'll get. BASH. UnboundLocalError: local variable 'value' referenced before assignment. The issue is that in this line: PYTHON. value = value + 1. We are defining a local variable called value and then trying to use it before it has been assigned a value, instead of using the ...

Output. Hangup (SIGHUP) Traceback (most recent call last): File "Solution.py", line 7, in <module> example_function() File "Solution.py", line 4, in example_function x += 1 # Trying to modify global variable 'x' without declaring it as global UnboundLocalError: local variable 'x' referenced before assignment Solution for Local variable Referenced Before Assignment in Python

scvelo.utils.merge(adata, ldata, copy=True) ¶. Merge two annotated data matrices. Parameters. adata : AnnData. Annotated data matrix (reference data set). ldata : AnnData. Annotated data matrix (to be merged into adata). copy : bool. Boolean flag to manipulate original AnnData or a copy of it.

Hi there, I'm trying to do an RNA velocity using scVelo, taking my Seurat object and converting to an Anndata object using my loom files. ... --> 526 clean_obs_names(adata, id_length=id_length) 527 clean_obs_names(ldata, id_length=id_length) ... (ldata.obs_names) UnboundLocalError: local variable 'id_length' referenced before assignment. I'm ...

Reliable monitoring for your app, databases, infrastructure, and the vendors they rely on. Ping Bot is a powerful uptime and performance monitoring tool that helps notify you and resolve issues before they affect your customers.

scVelo is a scalable toolkit for RNA velocity analysis in single cells; RNA velocity enables the recovery of directed dynamic information by leveraging splicing kinetics [Manno et al., 2018]. scVelo collects different methods for inferring RNA velocity using an expectation-maximization framework [Bergen et al., 2020], deep generative modeling [Gayoso et al., 2023], or metabolically labeled ...

Expect scvelo.merge to merge cells from two scvelo AnnData objects (i.e. barcode observations). Instead, merged object has ~7 cells. Looking through source code, it appears that scvelo.merge instead subsets adata input for common observations with ldata input. ... import scvelo as scv import os import re import numpy as np import pandas as pd ...

In order for you to modify test1 while inside a function you will need to do define test1 as a global variable, for example: test1 = 0. def test_func(): global test1. test1 += 1. test_func() However, if you only need to read the global variable you can print it without using the keyword global, like so: test1 = 0.

UnboundLocalError: local variable referenced before assignment. Example #1: Accessing a Local Variable. Solution #1: Passing Parameters to the Function. Solution #2: Use Global Keyword. Example #2: Function with if-elif statements. Solution #1: Include else statement. Solution #2: Use global keyword. Summary.

This tutorial explains the reason and solution of the python error local variable referenced before assignment

I think you are using 'global' incorrectly. See Python reference.You should declare variable without global and then inside the function when you want to access global variable you declare it global yourvar. #!/usr/bin/python total def checkTotal(): global total total = 0

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session. You switched accounts on another tab or window.

The unboundlocalerror: local variable referenced before assignment is raised when you try to use a variable before it has been assigned in the local context. Python doesn't have variable declarations , so it has to figure out the scope of variables itself. It does so by a simple rule: If there is an assignment to a variable inside a function ...

I've been looking t this for 6 hours now, and anything I try doesn't seem to work. I get a message back saying "UnboundLocalError: local variable 'Id' referenced before assignment". It's impossible because I'm assigning with: a = wks3.acell('A'+str(number)).value. So it grabs the ID number from the google spread sheet and checks if it is ...

1. The variables wins, money and losses were declared outside the scope of the fiftyfifty() function, so you cannot update them from inside the function unless you explicitly declare them as global variables like this: def fiftyfifty(bet): global wins, money, losses. chance = random.randint(0,100)