Deductive Approach (Deductive Reasoning)

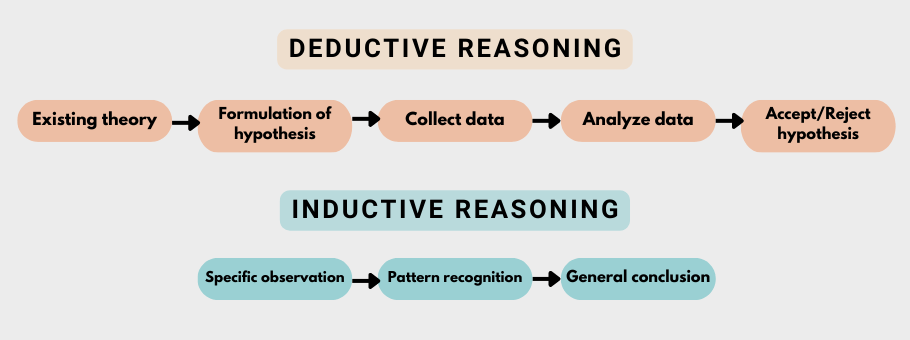

A deductive approach is concerned with “developing a hypothesis (or hypotheses) based on existing theory, and then designing a research strategy to test the hypothesis” [1]

It has been stated that “deductive means reasoning from the particular to the general. If a causal relationship or link seems to be implied by a particular theory or case example, it might be true in many cases. A deductive design might test to see if this relationship or link did obtain on more general circumstances” [2] .

Deductive approach can be explained by the means of hypotheses, which can be derived from the propositions of the theory. In other words, deductive approach is concerned with deducting conclusions from premises or propositions.

Deduction begins with an expected pattern “that is tested against observations, whereas induction begins with observations and seeks to find a pattern within them” [3] .

Advantages of Deductive Approach

Deductive approach offers the following advantages:

- Possibility to explain causal relationships between concepts and variables

- Possibility to measure concepts quantitatively

- Possibility to generalize research findings to a certain extent

Alternative to deductive approach is inductive approach. The table below guides the choice of specific approach depending on circumstances:

| Wealth of literature | Abundance of sources | Scarcity of sources |

| Time availability | Short time available to complete the study | There is no shortage of time to compete the study |

| Risk | To avoid risk | Risk is accepted, no theory may emerge at all |

Choice between deductive and inductive approaches

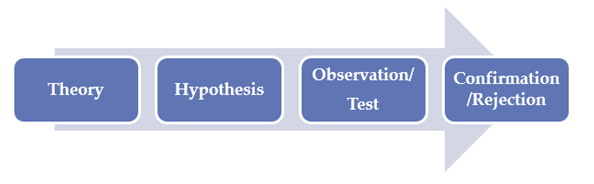

Deductive research approach explores a known theory or phenomenon and tests if that theory is valid in given circumstances. It has been noted that “the deductive approach follows the path of logic most closely. The reasoning starts with a theory and leads to a new hypothesis. This hypothesis is put to the test by confronting it with observations that either lead to a confirmation or a rejection of the hypothesis” [4] .

Moreover, deductive reasoning can be explained as “reasoning from the general to the particular” [5] , whereas inductive reasoning is the opposite. In other words, deductive approach involves formulation of hypotheses and their subjection to testing during the research process, while inductive studies do not deal with hypotheses in any ways.

Application of Deductive Approach (Deductive Reasoning) in Business Research

In studies with deductive approach, the researcher formulates a set of hypotheses at the start of the research. Then, relevant research methods are chosen and applied to test the hypotheses to prove them right or wrong.

Generally, studies using deductive approach follow the following stages:

- Deducing hypothesis from theory.

- Formulating hypothesis in operational terms and proposing relationships between two specific variables

- Testing hypothesis with the application of relevant method(s). These are quantitative methods such as regression and correlation analysis, mean, mode and median and others.

- Examining the outcome of the test, and thus confirming or rejecting the theory. When analysing the outcome of tests, it is important to compare research findings with the literature review findings.

- Modifying theory in instances when hypothesis is not confirmed.

My e-book, The Ultimate Guide to Writing a Dissertation in Business Studies: a step by step assistance contains discussions of theory and application of research approaches. The e-book also explains all stages of the research process starting from the selection of the research area to writing personal reflection. Important elements of dissertations such as research philosophy , research design , methods of data collection , data analysis and sampling are explained in this e-book in simple words.

John Dudovskiy

[1] Wilson, J. (2010) “Essentials of Business Research: A Guide to Doing Your Research Project” SAGE Publications, p.7

[2] Gulati, PM, 2009, Research Management: Fundamental and Applied Research, Global India Publications, p.42

[3] Babbie, E. R. (2010) “The Practice of Social Research” Cengage Learning, p.52

[4] Snieder, R. & Larner, K. (2009) “The Art of Being a Scientist: A Guide for Graduate Students and their Mentors”, Cambridge University Press, p.16

[5] Pelissier, R. (2008) “Business Research Made Easy” Juta & Co., p.3

- Privacy Policy

Home » Inductive Vs Deductive Research

Inductive Vs Deductive Research

Table of Contents

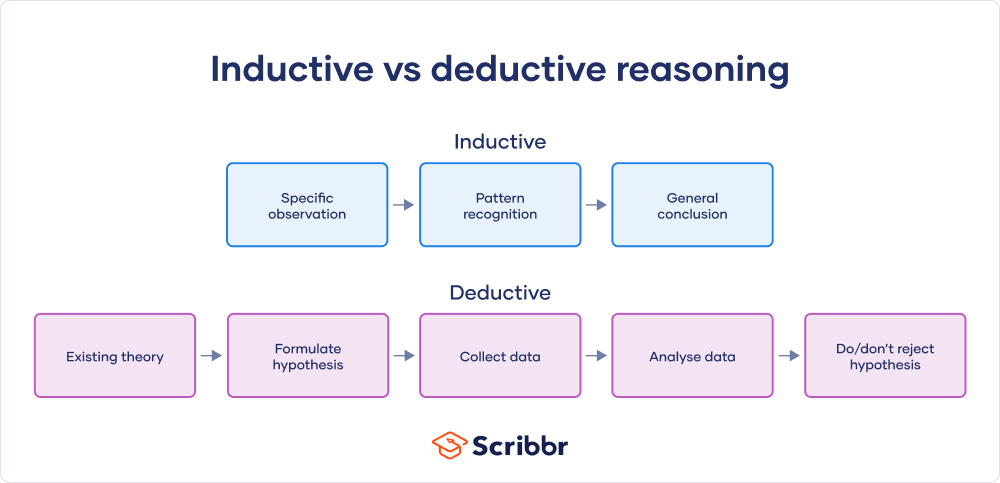

Inductive and deductive research are two contrasting approaches used in Research to develop and test theories .

Inductive Research

- Definition : Inductive research starts with specific observations or real examples of events, trends, or social processes. From these observations, researchers identify patterns and develop broader generalizations or theories.

- Observation : Begin with detailed observations of the world.

- Pattern Recognition : Identify patterns or regularities in these observations.

- Theory Formation : Develop theories or hypotheses based on the identified patterns.

- Conclusion : Make generalizations that can be applied to broader contexts.

- Example : A researcher observes that students who study in groups tend to perform better on exams. From this pattern, they might develop a theory that group study is more effective than studying alone.

Deductive Research

- Definition : Deductive research starts with a theory or hypothesis and then designs a research strategy to test this hypothesis. It moves from the general to the specific.

- Theory : Begin with an existing theory or hypothesis.

- Hypothesis Development : Formulate a hypothesis based on the theory.

- Data Collection : Collect data to test the hypothesis.

- Analysis : Analyze the data to determine whether it supports or refutes the hypothesis.

- Conclusion : Draw conclusions that confirm or challenge the initial theory.

- Example : A researcher starts with the hypothesis that “students who study for more than 3 hours a day perform better on exams.” They then collect data to see if this hypothesis holds true.

Key Differences

- Inductive : Moves from specific observations to broader generalizations (bottom-up approach).

- Deductive : Moves from a general theory to specific observations or experiments (top-down approach).

- Inductive : Theories are developed based on observed patterns.

- Deductive : Theories are tested through empirical observation.

- Inductive : Useful in exploring new phenomena or generating new theories.

- Deductive : Effective for testing existing theories or hypotheses.

Both inductive and deductive research approaches are crucial in the development and testing of theories. The choice between them depends on the research goal: inductive for exploring and generating new theories, and deductive for testing existing ones.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Clinical Research Vs Lab Research

Exploratory Vs Explanatory Research

Qualitative Vs Quantitative Research

Essay Vs Research Paper

Market Research Vs Marketing Research

Research Question Vs Hypothesis

- What is Deductive Research? Meaning, Stages & Examples

Introduction

Deductive research is a scientific approach that is used to test a theory or hypothesis through observations and empirical evidence. This research method is commonly used in disciplines such as physics, biology, psychology, and sociology, among others. In this article, we will explore the meaning of deductive research, its components, and some examples of its application.

What is Deductive Research?

Deductive research starts with a theory, forms a hypothesis, and tests it through observation and evidence. In other words, it involves starting with a general theory and then making a specific prediction based on that theory. This prediction is called a hypothesis, and it is tested through observations and data analysis. Deductive research aims to support or disprove theories with evidence, advancing scientific knowledge through experimentation and analysis.

Related – Inductive Research: What It Is, Benefits & When to Use

Components of Deductive Research

Deductive research is composed of several components, which are as follows:

- Theory Development

The first step in deductive research is to develop a theory that explains a particular phenomenon. This theory is based on existing research and knowledge, and it provides a general framework for understanding the phenomenon.

- Hypothesis Formulation

Next, create a hypothesis based on the theory. It tests the theory’s validity through a verifiable prediction. It asserts a statement that can be confirmed or refuted through careful observations and thorough data analysis.

- Data Collection

To test the hypothesis in deductive research, one must actively collect data. To study a phenomenon, methods like surveys, experiments, observations, etc. are used based on their nature.

- Data Analysis

The fourth step in deductive research is to analyze the data that has been collected. This involves using statistical methods to determine whether the hypothesis is supported by the data or not. If the data support the hypothesis, it provides evidence that the theory is valid. If the data does not support the hypothesis, it indicates that the theory needs to be revised or rejected.

To conclude deductive research, analyze data and draw a conclusion that either supports or rejects the hypothesis. Researchers may need to revise the theory based on the results or conduct further research to confirm them.

What are the Main Characteristics of Deductive Research?

Deductive research starts with a theory or hypothesis, followed by experiments that test its validity and support.

Another important characteristic of deductive research is that it uses objective and empirical methods to gather and analyze data. This means that researchers rely on measurable data and observations to test their hypotheses, rather than relying on personal opinions or subjective judgments.

Deductive research uses deductive reasoning. It starts with a theory, makes predictions, and tests them through observation or experimentation.

Read Also: What is Empirical Research Study? [Examples & Method]

Stages of the Deductive Research Process

The main stages of the deductive research process include theory development, hypothesis formulation, data collection, data analysis, and conclusion. Researchers start by identifying a problem or question, reviewing the literature, and building a theoretical framework. They formulate a hypothesis and collect and analyze data to test it. They draw a conclusion based on whether the data supports the hypothesis or not.

Difference between Deductive Vs Inductive Research

Deductive and inductive research are two different approaches to scientific research that have distinct differences. Researchers use a top-down approach called deductive research, beginning with a theory or hypothesis, and collecting data to test it. Inductive research, however, starts with observations and builds a theory or hypothesis from them, using a bottom-up approach.

One of the main differences between the two approaches is the order in which they proceed. Deductive research begins with a theory, then tests it with experiments. Inductive research starts with data, then forms a theory.

Another difference is the degree of certainty associated with the results. Deductive research aims to prove or disprove a specific hypothesis, so the results tend to be more definitive. Inductive research generates theories based on observations, resulting in more tentative and revisable outcomes compared to deductive research.

To choose between deductive and inductive research, consider the research question and resources. Deductive research suits experiments, while inductive research suits exploring novel or complex systems.

Steps in Deductive Research

A. Formulating a research question: This involves Identifying a research question based on existing knowledge and a literature review.

B. Developing a hypothesis: Develop a specific hypothesis or set of hypotheses based on the research question and relevant theories.

C. Designing the research: Plan the research design including sampling, data collection methods, and research instruments.

- Sampling: Identify the population and sampling technique to be used in the study.

- Data collection methods: Choose appropriate data collection methods such as surveys, experiments, or observations.

- Research instruments: Develop or select appropriate research instruments such as questionnaires or tests.

D. Analyzing the data: Collect and analyze the data using appropriate statistical techniques to test the hypothesis.

E. Drawing conclusions: Based on the analysis of the data, draw conclusions that either support or reject the hypothesis or suggest the need for further research.

Examples of Deductive Research

- The study tests the hypothesis that “exposure to violent video games increases aggressive behavior in children.”

In this study, the researcher would formulate the research question based on existing literature and knowledge. They would develop a hypothesis by proposing that exposure to violent video games increases aggressive behavior in children.

The researcher would first select a group of children, decide on the method of collecting data (such as surveys or observations), and create research tools (like a questionnaire) to study the potential impact of violent video games on aggressive behavior.

The researcher would then collect and analyze the data, using appropriate statistical techniques to test the hypothesis. If the results of the analysis support the hypothesis, the researcher would draw conclusions that exposure to violent video games increases aggressive behavior in children. If the results do not support the hypothesis, the researcher would draw conclusions that there is no significant relationship between exposure to violent video games and aggressive behavior.

- A test of the hypothesis that “ employees who work in a positive work environment have higher levels of job satisfaction compared to employees who work in a negative work environment.”

In this study, the researcher would formulate the research question based on existing literature and knowledge. After conducting some research, they developed a hypothesis that a positive work environment is strongly correlated with higher levels of job satisfaction.

Next, the researcher would design the study, including selecting a sample of employees, determining the data collection method (e.g., survey, observation), and developing the research instruments (e.g., a questionnaire to assess the work environment and job satisfaction).

Using appropriate statistical techniques to test the hypothesis, the researcher will analyze the sample. If the results of the analysis support the hypothesis, the researcher would conclude that working in a positive work environment leads to higher levels of job satisfaction. If the results do not support the hypothesis, the researcher would conclude that there is no significant relationship between work environment and job satisfaction.

- Testing the hypothesis that “increasing the amount of exercise a person does leads to a decrease in body weight.”

In this study, the researcher would formulate the research question based on existing literature and knowledge. They would then develop a hypothesis, in this case, the hypothesis that increasing exercise leads to a decrease in body weight.

Therefore, the researcher would design the study, including selecting a sample of participants, determining the data collection method (e.g., survey, observation), and developing the research instruments (e.g., a questionnaire to assess exercise habits and body weight).

The researcher would then collect and analyze the data, using appropriate statistical techniques to test the hypothesis. If the results of the analysis support the hypothesis, the researcher would conclude that increasing exercise leads to a decrease in body weight. If the results do not support the hypothesis, the researcher would draw conclusions that there is no significant relationship between exercise and body weight.

- A study tests the hypothesis that “ students who attend schools with a higher teacher-to-student ratio have higher levels of academic achievement compared to students who attend schools with a lower teacher-to-student ratio .”

In this study, the researcher would formulate the research question based on existing literature and knowledge. They would formulate a hypothesis that a higher teacher-to-student ratio correlates with greater academic achievement.

The researcher would design the study, including selecting a sample of students, determining the data collection method (e.g., observation, survey), and developing the research instruments (e.g., a questionnaire to assess teacher-to-student ratio and academic achievement).

If the results of the analysis support the hypothesis, the researcher would draw conclusions that a higher teacher-to-student ratio leads to higher levels of academic achievement. If the results do not support the hypothesis, the researcher would draw conclusions that there is no significant relationship between teacher-to-student ratio and academic achievement.

- A hypothesis test on “ smoking is a risk factor for lung cancer.”

In this study, the researcher would formulate the research question based on existing literature and knowledge. They would then develop a hypothesis, in this case, the hypothesis that smoking is a risk factor for lung cancer.

Then, the researcher would design the study, including selecting a sample of participants, determining the data collection method (e.g., medical records, interviews), and developing the research instruments (e.g., a questionnaire to assess smoking status and lung cancer diagnosis).

After collecting and analyzing the data, using appropriate statistical techniques to test the hypothesis, if the results of the analysis support the hypothesis, the researcher would draw conclusions that smoking is a risk factor for lung cancer. If the results do not support the hypothesis, the researcher would draw conclusions that there is no significant relationship between smoking and lung cancer.

Explore: Population of Interest – Definition, Determination, Comparisons

Advantages of Deductive Research

- Clearly defined research question: Deductive research starts with a clearly defined research question, which helps to keep the study focused and targeted.

- Testable hypotheses: The use of hypotheses in deductive research allows for the testing of specific predictions, which can produce more reliable and valid results.

- Structured approach: Deductive research is a structured approach that uses a logical and systematic process to test hypotheses, making it easier to replicate and build upon previous research.

- Clear conclusions: Deductive research actively generates clear and concise conclusions by analyzing data, thus providing valuable insights to inform policy decisions and guide future research.

- Efficiency: Deductive research can be more efficient in terms of time and resources since it focuses on testing specific hypotheses rather than exploring unknown phenomena.

Limitations of Deductive Research

- Limited scope: Deductive research may have a limited scope since it starts with a specific hypothesis and may miss other relevant factors that could impact the research question.

- Biases: The use of hypotheses in deductive research can lead to confirmation biases, where researchers may only look for evidence that supports their hypothesis while ignoring evidence that contradicts it.

- Limited generalizability: Since deductive research is based on specific hypotheses and tests, the results may only be generalizable to a specific population, time, or setting.

- Lack of flexibility: Deductive research is a structured approach, which may limit the researcher’s ability to explore new and unexpected findings that arise during the study.

- Reductionism: Deductive research can be reductionistic since it often breaks down complex phenomena into smaller, more manageable parts, potentially overlooking the complex interrelationships among different factors.

In conclusion, deductive research involves a systematic process of identifying a research question, formulating a hypothesis, designing and implementing a research plan, collecting and analyzing data, and drawing conclusions based on the results. By following this process, researchers can gain insights into the relationships between variables and develop a deeper understanding of the phenomenon they are studying.

Connect to Formplus, Get Started Now - It's Free!

- data analysis

- deductive research

- empirical research

- inductive vs deductive research

- research surveys

You may also like:

Research Summary: What Is It & How To Write One

Introduction A research summary is a requirement during academic research and sometimes you might need to prepare a research summary...

Serial Position Effect: Meaning & Implications in Research Surveys

Have you ever noticed how the first performer in a competition seems to set the tone for the rest of the competition, while everything...

Inductive Research: What It Is, Benefits & When to Use

You’ve probably heard or seen “inductive research” and “deductive research” countless times as a researcher. These are two different...

Documentary Research: Definition, Types, Applications & Examples

Introduction Over the years, social scientists have used documentary research to understand series of events that have occurred or...

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Inductive vs Deductive Research Approach (with Examples)

Inductive vs Deductive Reasoning | Difference & Examples

Published on 4 May 2022 by Raimo Streefkerk . Revised on 10 October 2022.

The main difference between inductive and deductive reasoning is that inductive reasoning aims at developing a theory while deductive reasoning aims at testing an existing theory .

Inductive reasoning moves from specific observations to broad generalisations , and deductive reasoning the other way around.

Both approaches are used in various types of research , and it’s not uncommon to combine them in one large study.

Table of contents

Inductive research approach, deductive research approach, combining inductive and deductive research, frequently asked questions about inductive vs deductive reasoning.

When there is little to no existing literature on a topic, it is common to perform inductive research because there is no theory to test. The inductive approach consists of three stages:

- A low-cost airline flight is delayed

- Dogs A and B have fleas

- Elephants depend on water to exist

- Another 20 flights from low-cost airlines are delayed

- All observed dogs have fleas

- All observed animals depend on water to exist

- Low-cost airlines always have delays

- All dogs have fleas

- All biological life depends on water to exist

Limitations of an inductive approach

A conclusion drawn on the basis of an inductive method can never be proven, but it can be invalidated.

Example You observe 1,000 flights from low-cost airlines. All of them experience a delay, which is in line with your theory. However, you can never prove that flight 1,001 will also be delayed. Still, the larger your dataset, the more reliable the conclusion.

Prevent plagiarism, run a free check.

When conducting deductive research , you always start with a theory (the result of inductive research). Reasoning deductively means testing these theories. If there is no theory yet, you cannot conduct deductive research.

The deductive research approach consists of four stages:

- If passengers fly with a low-cost airline, then they will always experience delays

- All pet dogs in my apartment building have fleas

- All land mammals depend on water to exist

- Collect flight data of low-cost airlines

- Test all dogs in the building for fleas

- Study all land mammal species to see if they depend on water

- 5 out of 100 flights of low-cost airlines are not delayed

- 10 out of 20 dogs didn’t have fleas

- All land mammal species depend on water

- 5 out of 100 flights of low-cost airlines are not delayed = reject hypothesis

- 10 out of 20 dogs didn’t have fleas = reject hypothesis

- All land mammal species depend on water = support hypothesis

Limitations of a deductive approach

The conclusions of deductive reasoning can only be true if all the premises set in the inductive study are true and the terms are clear.

- All dogs have fleas (premise)

- Benno is a dog (premise)

- Benno has fleas (conclusion)

Many scientists conducting a larger research project begin with an inductive study (developing a theory). The inductive study is followed up with deductive research to confirm or invalidate the conclusion.

In the examples above, the conclusion (theory) of the inductive study is also used as a starting point for the deductive study.

Inductive reasoning is a bottom-up approach, while deductive reasoning is top-down.

Inductive reasoning takes you from the specific to the general, while in deductive reasoning, you make inferences by going from general premises to specific conclusions.

Inductive reasoning is a method of drawing conclusions by going from the specific to the general. It’s usually contrasted with deductive reasoning, where you proceed from general information to specific conclusions.

Inductive reasoning is also called inductive logic or bottom-up reasoning.

Deductive reasoning is a logical approach where you progress from general ideas to specific conclusions. It’s often contrasted with inductive reasoning , where you start with specific observations and form general conclusions.

Deductive reasoning is also called deductive logic.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Streefkerk, R. (2022, October 10). Inductive vs Deductive Reasoning | Difference & Examples. Scribbr. Retrieved 15 October 2024, from https://www.scribbr.co.uk/research-methods/inductive-vs-deductive-reasoning/

Is this article helpful?

Raimo Streefkerk

Other students also liked, inductive reasoning | types, examples, explanation, what is deductive reasoning | explanation & examples, a quick guide to experimental design | 5 steps & examples.

- IND +91 8754467066

- UK +44 - 753 714 4372

- [email protected]

Deductive / Quantitative research approach

What is quantitative research approach?

The deductive or quantitative research approach is concerned with situations in which data can be analyzed in terms of numbers. The researcher primarily uses post positivist claims for developing knowledge (i.e., cause and effect thinking, reduction to specific variables and hypotheses and questions, uses of measurement and observation), employs strategies of inquiry such as experiments and questionnaires, and Data Collection via predetermined instruments that yield Statistical Analysis . Its results are more readily analyzed and interpreted. The most common quantitative research methods are experiments and questionnaires.

MAIN SERVICES

Hire a statistician, statswork popup.

- Privacy Overview

- Strictly Necessary Cookies

- 3rd Party Cookies

This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings.

If you disable this cookie, we will not be able to save your preferences. This means that every time you visit this website you will need to enable or disable cookies again.

This website uses Google Analytics to collect anonymous information such as the number of visitors to the site, and the most popular pages.

Keeping this cookie enabled helps us to improve our website.

Please enable Strictly Necessary Cookies first so that we can save your preferences!

- How it works

Inductive and Deductive Reasoning – Examples & Limitations

Published by Alvin Nicolas at August 14th, 2021 , Revised On October 26, 2023

“Deductive reasoning is the procedure for utilising the given information. On the other hand, Inductive reasoning is the procedure of achieving it.” Henry Mayhew. Inductive and deductive reasoning takes into account assumptions and incidents. Here is all you need to know about inductive vs deductive reasoning.

Deductive reasoning begins with an assumption and moves from generalised instances to a certain conclusion. On the other hand, inductive reasoning begins with a conclusion and moves from certain incidents to a generalised conclusion.

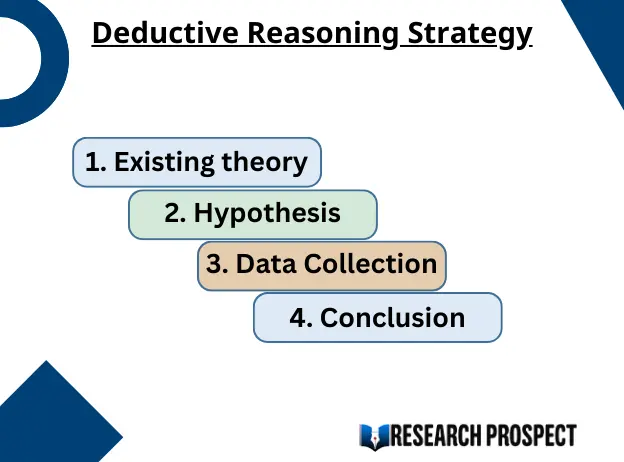

Deductive Reasoning Strategy

The deductive reasoning method starts with a theory-driven hypothesis that directs data collection and investigation.

If there is an existing theory to explain a specific topic , you form a hypothesis based on that theory that guides data collection and analysis. You might design a particular survey to collect information about a set of variables in your hypothesis which you then evaluate.

1. Existing theory

- All mangoes are fruits (general statement)

- All dancers know how to sing. (general statement)

2. Hypothesis

- All fruits have seeds (general statement)

- Seems knows how to sing (general statement)

3. Data Collection

- Collect different types of fruits to see if they have seeds.

- Collect information about singers and dancers to know how many of them can dance and sing?

4. Conclusion

- Mangoes have seeds (specific conclusion/confirmation)

- Hence, Seema is a dancer. (Rejection)

Limitations of Deductive Reasoning

Deductive reasoning considers no other evidence except the given premises. The conclusion is drawn based on proof. The basic premise needs to be true to draw a positive conclusion about the theory.

- All mangoes are fruits. (Premise)

- All fruits have seeds. (Premise)

- Mangoes have seeds. (Conclusion)

From the first and second premise in the above example, the third statement is concluded.

Does your Research Methodology Have the Following?

- Great Research/Sources

- Perfect Language

- Accurate Sources

If not, we can help. Our panel of experts makes sure to keep the 3 pillars of Research Methodology strong.

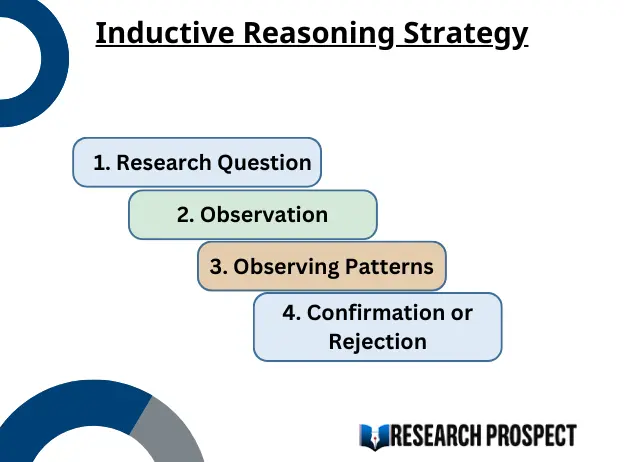

Inductive Reasoning Strategy

The inductive reasoning method starts with a research-based question and data collection to develop a hypothesis and theory.

If you have a research question that is a testable concept, you proceed with data collection about the connection between two or more observations. Based on those observations, you form a hypothesis which you use to evaluate and develop a theory.

1. Research Question

- Does regular exercise promote maximum weight loss?

- Are all cats brown?

2. Observation

- Regular exercise enables maximum weight loss.

- All cats in this area are brown.

3. Observing Patterns

- You might begin by collecting relevant data from a group of individuals. Try to understand their views, experiences through conducting surveys and interviews.

- You can examine cats from different areas to find out if all of them are brown?

4. Confirmation or Rejection

- Regular exercise promotes maximum weight loss if it is incorporated with a balanced diet and a healthy lifestyle.

- All cats in this particular area are brown, but all cats are not generally brown.

Limitations of Inductive Reasoning

Inductive reasoning may be logically true but may or may not be realistically true. Your consequences are likely to be wrong.

Suppose we take an example of a fruit basket and pick up two raw fruits and assume that all fruits in the basket are raw. It might be apparently true but may not be logically true because there might be ripe fruits along with the raw fruits.

Inductive Vs. Deductive Reasoning Comparative Analysis

- Inductive reasoning is the generalised conclusion based on general knowledge by observing a specific outcome. These observations may change or remain constant. It may be logically true or may not be true.

- Deductive reasoning may seem simple but it can go wrong if the given premise is wrong. However, deductive reasoning concludes based on the given premises and proofs. It is important to implement the logic properly otherwise the entire process of reasoning and its conclusion will be wrong.

- We use inductive and deductive reasoning in everyday life. We develop beliefs based on our experiences. Both types of reasoning depend on evidence. It helps to get close to the truth.

- “Never join a group of book burners. You can merely hide evidence, but you can’t suppress the existing truth.” Dwight D. Eisenhower

- One of the main differences between these two methods is that the conclusion may not be logical and may change. The outcome can be strong or weak but not entirely true or false.

- We get a new piece of information in the inductive reasoning approach based on the other available information. On the other hand, we don’t get a new piece of information as we already have an existing fact.

- In deductive reasoning, we already know that our basic information is a fact, and it leads to a new outcome which may be another fact. Whereas in inductive reasoning, the basic information is a hypothesis, and it may sometimes lead to a false conclusion.

- Inductive reasoning and deductive reasoning depend on the facts and evidence, the search for truth, which can be accepted or rejected. Lawyers and Scientists use facts followed by evidence to prove their statements. However, we can predict the situation based on the collected information, but no one can prove what happened exactly in the past without any strong proof. Example – Arthur Canon Doyle made inductive reasoning prominent. One of his iconic characters ‘Sherlock Holmes’ uses an inductive reasoning approach in his investigations in the quest for truth, which may or may not support a specific incident.

- Superstitious beliefs are inductive. For instance, it is considered to be a bad omen if a cat crosses someone’s path; it is believed to follow another path to avoid bad luck. It can be sometimes true coincidentally, but we cannot consider it as a logical fact.

- It happens when someone ignores the good luck experiences and remembers the associated bad experiences to prove an assumption without any strong evidence.

- The whole legal system is based on inductive reasoning, where a Lawyer’s arguments try to relate the facts based on the evidence to prove their assumption, which can either be strong or weak.

- One of the main disadvantages of inductive reasoning is that it does not guarantee 100% accuracy. The outcome is likely to be wrong. It turns out to be advantageous if you have incomplete information. Example – All pigeons are white. It is an assumption that needs research based on the shreds of evidence. You need to study pigeons because you haven’t seen all the pigeons. The conclusion may prove your assumption partially wrong as all pigeons are not white, but a few pigeons might be white.

“Deductive reasoning is the procedure for utilising the given information. On the other hand, Inductive reasoning is the procedure of achieving it.” Henry Mayhew

Inductive reasoning enables you to develop general ideas from a specific logic. You investigate a general hypothesis to get a deep knowledge about it, which enriches your thoughts, enables you to question, and develops an argument, which may not always be true but enriches your perception and knowledge.

Deductive reasoning is the opposite of inductive reasoning. As the word ‘deduct’ itself describes that it deducts a specific idea from the general hypothesis. It makes you dependent on the present theory and doesn’t allow you to reason as per your perception because it is already a proven theory which you use to study in detail.

“Inductive reasoning makes man master of his environment; it is an achievement.” Mohammed Iqbal

It makes it clear that we can’t acquire full knowledge at once. Inductive reasoning gives the ability to question a specific assumption, investigate, and gather evidence to support or reject it based on the consequences. On the other hand, deductive reasoning follows the existing fact without any personal assumption depending on the basics and premises.

Hence, both kinds of reasoning methods are used in research and have their specific limitations and advantages depending on the pieces of evidence, facts, and research procedure.

Frequently Asked Questions

What is meant by inductive reasoning.

Inductive reasoning involves drawing general conclusions from specific observations or evidence. It moves from specific instances to broader theories without guaranteeing absolute truth. It’s common in scientific research and helps formulate hypotheses based on patterns and trends observed.

What is the difference between inductive and deductive reasoning?

Inductive reasoning involves drawing general conclusions from specific observations or instances. It goes from specific to general. Deductive reasoning starts with a general statement or hypothesis and examines the possibilities to reach a specific, logical conclusion. It goes from general to specific. Both are critical to scientific and everyday thinking.

What is an example of inductive reasoning?

After observing that the sun rises in the east every morning for years, one concludes that the sun always rises in the east. This is inductive reasoning: drawing a general conclusion from consistent specific observations, even though the observations are not exhaustive or absolute proof of the conclusion.

What are examples of deductive reasoning?

All men are mortal. Socrates is a man. Therefore, Socrates is mortal. This is deductive reasoning: starting with a general principle and applying it to a specific case to reach a logical conclusion. It proceeds from general to specific, ensuring that the conclusion is certain if the premises are true.

What is inductive reasoning in simple words?

Inductive reasoning is making broad generalisations from specific observations. Essentially, you observe particular instances and then draw a probable conclusion about the entire group. For example, if all swans you’ve seen are white, you might conclude all swans are white, even if you haven’t seen every swan.

You May Also Like

This article provides the key advantages of primary research over secondary research so you can make an informed decision.

Experimental research refers to the experiments conducted in the laboratory or under observation in controlled conditions. Here is all you need to know about experimental research.

A case study is a detailed analysis of a situation concerning organizations, industries, and markets. The case study generally aims at identifying the weak areas.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

Frequently asked questions

How do you use deductive reasoning in research.

Deductive reasoning is commonly used in scientific research, and it’s especially associated with quantitative research .

In research, you might have come across something called the hypothetico-deductive method . It’s the scientific method of testing hypotheses to check whether your predictions are substantiated by real-world data.

Frequently asked questions: Methodology

Attrition refers to participants leaving a study. It always happens to some extent—for example, in randomized controlled trials for medical research.

Differential attrition occurs when attrition or dropout rates differ systematically between the intervention and the control group . As a result, the characteristics of the participants who drop out differ from the characteristics of those who stay in the study. Because of this, study results may be biased .

Action research is conducted in order to solve a particular issue immediately, while case studies are often conducted over a longer period of time and focus more on observing and analyzing a particular ongoing phenomenon.

Action research is focused on solving a problem or informing individual and community-based knowledge in a way that impacts teaching, learning, and other related processes. It is less focused on contributing theoretical input, instead producing actionable input.

Action research is particularly popular with educators as a form of systematic inquiry because it prioritizes reflection and bridges the gap between theory and practice. Educators are able to simultaneously investigate an issue as they solve it, and the method is very iterative and flexible.

A cycle of inquiry is another name for action research . It is usually visualized in a spiral shape following a series of steps, such as “planning → acting → observing → reflecting.”

To make quantitative observations , you need to use instruments that are capable of measuring the quantity you want to observe. For example, you might use a ruler to measure the length of an object or a thermometer to measure its temperature.

Criterion validity and construct validity are both types of measurement validity . In other words, they both show you how accurately a method measures something.

While construct validity is the degree to which a test or other measurement method measures what it claims to measure, criterion validity is the degree to which a test can predictively (in the future) or concurrently (in the present) measure something.

Construct validity is often considered the overarching type of measurement validity . You need to have face validity , content validity , and criterion validity in order to achieve construct validity.

Convergent validity and discriminant validity are both subtypes of construct validity . Together, they help you evaluate whether a test measures the concept it was designed to measure.

- Convergent validity indicates whether a test that is designed to measure a particular construct correlates with other tests that assess the same or similar construct.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related. This type of validity is also called divergent validity .

You need to assess both in order to demonstrate construct validity. Neither one alone is sufficient for establishing construct validity.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related

Content validity shows you how accurately a test or other measurement method taps into the various aspects of the specific construct you are researching.

In other words, it helps you answer the question: “does the test measure all aspects of the construct I want to measure?” If it does, then the test has high content validity.

The higher the content validity, the more accurate the measurement of the construct.

If the test fails to include parts of the construct, or irrelevant parts are included, the validity of the instrument is threatened, which brings your results into question.

Face validity and content validity are similar in that they both evaluate how suitable the content of a test is. The difference is that face validity is subjective, and assesses content at surface level.

When a test has strong face validity, anyone would agree that the test’s questions appear to measure what they are intended to measure.

For example, looking at a 4th grade math test consisting of problems in which students have to add and multiply, most people would agree that it has strong face validity (i.e., it looks like a math test).

On the other hand, content validity evaluates how well a test represents all the aspects of a topic. Assessing content validity is more systematic and relies on expert evaluation. of each question, analyzing whether each one covers the aspects that the test was designed to cover.

A 4th grade math test would have high content validity if it covered all the skills taught in that grade. Experts(in this case, math teachers), would have to evaluate the content validity by comparing the test to the learning objectives.

Snowball sampling is a non-probability sampling method . Unlike probability sampling (which involves some form of random selection ), the initial individuals selected to be studied are the ones who recruit new participants.

Because not every member of the target population has an equal chance of being recruited into the sample, selection in snowball sampling is non-random.

Snowball sampling is a non-probability sampling method , where there is not an equal chance for every member of the population to be included in the sample .

This means that you cannot use inferential statistics and make generalizations —often the goal of quantitative research . As such, a snowball sample is not representative of the target population and is usually a better fit for qualitative research .

Snowball sampling relies on the use of referrals. Here, the researcher recruits one or more initial participants, who then recruit the next ones.

Participants share similar characteristics and/or know each other. Because of this, not every member of the population has an equal chance of being included in the sample, giving rise to sampling bias .

Snowball sampling is best used in the following cases:

- If there is no sampling frame available (e.g., people with a rare disease)

- If the population of interest is hard to access or locate (e.g., people experiencing homelessness)

- If the research focuses on a sensitive topic (e.g., extramarital affairs)

The reproducibility and replicability of a study can be ensured by writing a transparent, detailed method section and using clear, unambiguous language.

Reproducibility and replicability are related terms.

- Reproducing research entails reanalyzing the existing data in the same manner.

- Replicating (or repeating ) the research entails reconducting the entire analysis, including the collection of new data .

- A successful reproduction shows that the data analyses were conducted in a fair and honest manner.

- A successful replication shows that the reliability of the results is high.

Stratified sampling and quota sampling both involve dividing the population into subgroups and selecting units from each subgroup. The purpose in both cases is to select a representative sample and/or to allow comparisons between subgroups.

The main difference is that in stratified sampling, you draw a random sample from each subgroup ( probability sampling ). In quota sampling you select a predetermined number or proportion of units, in a non-random manner ( non-probability sampling ).

Purposive and convenience sampling are both sampling methods that are typically used in qualitative data collection.

A convenience sample is drawn from a source that is conveniently accessible to the researcher. Convenience sampling does not distinguish characteristics among the participants. On the other hand, purposive sampling focuses on selecting participants possessing characteristics associated with the research study.

The findings of studies based on either convenience or purposive sampling can only be generalized to the (sub)population from which the sample is drawn, and not to the entire population.

Random sampling or probability sampling is based on random selection. This means that each unit has an equal chance (i.e., equal probability) of being included in the sample.

On the other hand, convenience sampling involves stopping people at random, which means that not everyone has an equal chance of being selected depending on the place, time, or day you are collecting your data.

Convenience sampling and quota sampling are both non-probability sampling methods. They both use non-random criteria like availability, geographical proximity, or expert knowledge to recruit study participants.

However, in convenience sampling, you continue to sample units or cases until you reach the required sample size.

In quota sampling, you first need to divide your population of interest into subgroups (strata) and estimate their proportions (quota) in the population. Then you can start your data collection, using convenience sampling to recruit participants, until the proportions in each subgroup coincide with the estimated proportions in the population.

A sampling frame is a list of every member in the entire population . It is important that the sampling frame is as complete as possible, so that your sample accurately reflects your population.

Stratified and cluster sampling may look similar, but bear in mind that groups created in cluster sampling are heterogeneous , so the individual characteristics in the cluster vary. In contrast, groups created in stratified sampling are homogeneous , as units share characteristics.

Relatedly, in cluster sampling you randomly select entire groups and include all units of each group in your sample. However, in stratified sampling, you select some units of all groups and include them in your sample. In this way, both methods can ensure that your sample is representative of the target population .

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

The key difference between observational studies and experimental designs is that a well-done observational study does not influence the responses of participants, while experiments do have some sort of treatment condition applied to at least some participants by random assignment .

An observational study is a great choice for you if your research question is based purely on observations. If there are ethical, logistical, or practical concerns that prevent you from conducting a traditional experiment , an observational study may be a good choice. In an observational study, there is no interference or manipulation of the research subjects, as well as no control or treatment groups .

It’s often best to ask a variety of people to review your measurements. You can ask experts, such as other researchers, or laypeople, such as potential participants, to judge the face validity of tests.

While experts have a deep understanding of research methods , the people you’re studying can provide you with valuable insights you may have missed otherwise.

Face validity is important because it’s a simple first step to measuring the overall validity of a test or technique. It’s a relatively intuitive, quick, and easy way to start checking whether a new measure seems useful at first glance.

Good face validity means that anyone who reviews your measure says that it seems to be measuring what it’s supposed to. With poor face validity, someone reviewing your measure may be left confused about what you’re measuring and why you’re using this method.

Face validity is about whether a test appears to measure what it’s supposed to measure. This type of validity is concerned with whether a measure seems relevant and appropriate for what it’s assessing only on the surface.

Statistical analyses are often applied to test validity with data from your measures. You test convergent validity and discriminant validity with correlations to see if results from your test are positively or negatively related to those of other established tests.

You can also use regression analyses to assess whether your measure is actually predictive of outcomes that you expect it to predict theoretically. A regression analysis that supports your expectations strengthens your claim of construct validity .

When designing or evaluating a measure, construct validity helps you ensure you’re actually measuring the construct you’re interested in. If you don’t have construct validity, you may inadvertently measure unrelated or distinct constructs and lose precision in your research.

Construct validity is often considered the overarching type of measurement validity , because it covers all of the other types. You need to have face validity , content validity , and criterion validity to achieve construct validity.

Construct validity is about how well a test measures the concept it was designed to evaluate. It’s one of four types of measurement validity , which includes construct validity, face validity , and criterion validity.

There are two subtypes of construct validity.

- Convergent validity : The extent to which your measure corresponds to measures of related constructs

- Discriminant validity : The extent to which your measure is unrelated or negatively related to measures of distinct constructs

Naturalistic observation is a valuable tool because of its flexibility, external validity , and suitability for topics that can’t be studied in a lab setting.

The downsides of naturalistic observation include its lack of scientific control , ethical considerations , and potential for bias from observers and subjects.

Naturalistic observation is a qualitative research method where you record the behaviors of your research subjects in real world settings. You avoid interfering or influencing anything in a naturalistic observation.

You can think of naturalistic observation as “people watching” with a purpose.

A dependent variable is what changes as a result of the independent variable manipulation in experiments . It’s what you’re interested in measuring, and it “depends” on your independent variable.

In statistics, dependent variables are also called:

- Response variables (they respond to a change in another variable)

- Outcome variables (they represent the outcome you want to measure)

- Left-hand-side variables (they appear on the left-hand side of a regression equation)

An independent variable is the variable you manipulate, control, or vary in an experimental study to explore its effects. It’s called “independent” because it’s not influenced by any other variables in the study.

Independent variables are also called:

- Explanatory variables (they explain an event or outcome)

- Predictor variables (they can be used to predict the value of a dependent variable)

- Right-hand-side variables (they appear on the right-hand side of a regression equation).

As a rule of thumb, questions related to thoughts, beliefs, and feelings work well in focus groups. Take your time formulating strong questions, paying special attention to phrasing. Be careful to avoid leading questions , which can bias your responses.

Overall, your focus group questions should be:

- Open-ended and flexible

- Impossible to answer with “yes” or “no” (questions that start with “why” or “how” are often best)

- Unambiguous, getting straight to the point while still stimulating discussion

- Unbiased and neutral

A structured interview is a data collection method that relies on asking questions in a set order to collect data on a topic. They are often quantitative in nature. Structured interviews are best used when:

- You already have a very clear understanding of your topic. Perhaps significant research has already been conducted, or you have done some prior research yourself, but you already possess a baseline for designing strong structured questions.

- You are constrained in terms of time or resources and need to analyze your data quickly and efficiently.

- Your research question depends on strong parity between participants, with environmental conditions held constant.

More flexible interview options include semi-structured interviews , unstructured interviews , and focus groups .

Social desirability bias is the tendency for interview participants to give responses that will be viewed favorably by the interviewer or other participants. It occurs in all types of interviews and surveys , but is most common in semi-structured interviews , unstructured interviews , and focus groups .

Social desirability bias can be mitigated by ensuring participants feel at ease and comfortable sharing their views. Make sure to pay attention to your own body language and any physical or verbal cues, such as nodding or widening your eyes.

This type of bias can also occur in observations if the participants know they’re being observed. They might alter their behavior accordingly.

The interviewer effect is a type of bias that emerges when a characteristic of an interviewer (race, age, gender identity, etc.) influences the responses given by the interviewee.

There is a risk of an interviewer effect in all types of interviews , but it can be mitigated by writing really high-quality interview questions.

A semi-structured interview is a blend of structured and unstructured types of interviews. Semi-structured interviews are best used when:

- You have prior interview experience. Spontaneous questions are deceptively challenging, and it’s easy to accidentally ask a leading question or make a participant uncomfortable.

- Your research question is exploratory in nature. Participant answers can guide future research questions and help you develop a more robust knowledge base for future research.

An unstructured interview is the most flexible type of interview, but it is not always the best fit for your research topic.

Unstructured interviews are best used when:

- You are an experienced interviewer and have a very strong background in your research topic, since it is challenging to ask spontaneous, colloquial questions.

- Your research question is exploratory in nature. While you may have developed hypotheses, you are open to discovering new or shifting viewpoints through the interview process.

- You are seeking descriptive data, and are ready to ask questions that will deepen and contextualize your initial thoughts and hypotheses.

- Your research depends on forming connections with your participants and making them feel comfortable revealing deeper emotions, lived experiences, or thoughts.

The four most common types of interviews are:

- Structured interviews : The questions are predetermined in both topic and order.

- Semi-structured interviews : A few questions are predetermined, but other questions aren’t planned.

- Unstructured interviews : None of the questions are predetermined.

- Focus group interviews : The questions are presented to a group instead of one individual.

Deductive reasoning is a logical approach where you progress from general ideas to specific conclusions. It’s often contrasted with inductive reasoning , where you start with specific observations and form general conclusions.

Deductive reasoning is also called deductive logic.

There are many different types of inductive reasoning that people use formally or informally.

Here are a few common types:

- Inductive generalization : You use observations about a sample to come to a conclusion about the population it came from.

- Statistical generalization: You use specific numbers about samples to make statements about populations.

- Causal reasoning: You make cause-and-effect links between different things.

- Sign reasoning: You make a conclusion about a correlational relationship between different things.

- Analogical reasoning: You make a conclusion about something based on its similarities to something else.

Inductive reasoning is a bottom-up approach, while deductive reasoning is top-down.

Inductive reasoning takes you from the specific to the general, while in deductive reasoning, you make inferences by going from general premises to specific conclusions.

In inductive research , you start by making observations or gathering data. Then, you take a broad scan of your data and search for patterns. Finally, you make general conclusions that you might incorporate into theories.

Inductive reasoning is a method of drawing conclusions by going from the specific to the general. It’s usually contrasted with deductive reasoning, where you proceed from general information to specific conclusions.

Inductive reasoning is also called inductive logic or bottom-up reasoning.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Triangulation can help:

- Reduce research bias that comes from using a single method, theory, or investigator

- Enhance validity by approaching the same topic with different tools

- Establish credibility by giving you a complete picture of the research problem

But triangulation can also pose problems:

- It’s time-consuming and labor-intensive, often involving an interdisciplinary team.

- Your results may be inconsistent or even contradictory.

There are four main types of triangulation :

- Data triangulation : Using data from different times, spaces, and people

- Investigator triangulation : Involving multiple researchers in collecting or analyzing data

- Theory triangulation : Using varying theoretical perspectives in your research

- Methodological triangulation : Using different methodologies to approach the same topic

Many academic fields use peer review , largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the published manuscript.

However, peer review is also common in non-academic settings. The United Nations, the European Union, and many individual nations use peer review to evaluate grant applications. It is also widely used in medical and health-related fields as a teaching or quality-of-care measure.

Peer assessment is often used in the classroom as a pedagogical tool. Both receiving feedback and providing it are thought to enhance the learning process, helping students think critically and collaboratively.

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. It also represents an excellent opportunity to get feedback from renowned experts in your field. It acts as a first defense, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process.

Peer-reviewed articles are considered a highly credible source due to this stringent process they go through before publication.

In general, the peer review process follows the following steps:

- First, the author submits the manuscript to the editor.

- Reject the manuscript and send it back to author, or

- Send it onward to the selected peer reviewer(s)

- Next, the peer review process occurs. The reviewer provides feedback, addressing any major or minor issues with the manuscript, and gives their advice regarding what edits should be made.

- Lastly, the edited manuscript is sent back to the author. They input the edits, and resubmit it to the editor for publication.

Exploratory research is often used when the issue you’re studying is new or when the data collection process is challenging for some reason.

You can use exploratory research if you have a general idea or a specific question that you want to study but there is no preexisting knowledge or paradigm with which to study it.

Exploratory research is a methodology approach that explores research questions that have not previously been studied in depth. It is often used when the issue you’re studying is new, or the data collection process is challenging in some way.

Explanatory research is used to investigate how or why a phenomenon occurs. Therefore, this type of research is often one of the first stages in the research process , serving as a jumping-off point for future research.

Exploratory research aims to explore the main aspects of an under-researched problem, while explanatory research aims to explain the causes and consequences of a well-defined problem.

Explanatory research is a research method used to investigate how or why something occurs when only a small amount of information is available pertaining to that topic. It can help you increase your understanding of a given topic.

Clean data are valid, accurate, complete, consistent, unique, and uniform. Dirty data include inconsistencies and errors.

Dirty data can come from any part of the research process, including poor research design , inappropriate measurement materials, or flawed data entry.

Data cleaning takes place between data collection and data analyses. But you can use some methods even before collecting data.

For clean data, you should start by designing measures that collect valid data. Data validation at the time of data entry or collection helps you minimize the amount of data cleaning you’ll need to do.

After data collection, you can use data standardization and data transformation to clean your data. You’ll also deal with any missing values, outliers, and duplicate values.

Every dataset requires different techniques to clean dirty data , but you need to address these issues in a systematic way. You focus on finding and resolving data points that don’t agree or fit with the rest of your dataset.

These data might be missing values, outliers, duplicate values, incorrectly formatted, or irrelevant. You’ll start with screening and diagnosing your data. Then, you’ll often standardize and accept or remove data to make your dataset consistent and valid.

Data cleaning is necessary for valid and appropriate analyses. Dirty data contain inconsistencies or errors , but cleaning your data helps you minimize or resolve these.

Without data cleaning, you could end up with a Type I or II error in your conclusion. These types of erroneous conclusions can be practically significant with important consequences, because they lead to misplaced investments or missed opportunities.

Data cleaning involves spotting and resolving potential data inconsistencies or errors to improve your data quality. An error is any value (e.g., recorded weight) that doesn’t reflect the true value (e.g., actual weight) of something that’s being measured.

In this process, you review, analyze, detect, modify, or remove “dirty” data to make your dataset “clean.” Data cleaning is also called data cleansing or data scrubbing.

Research misconduct means making up or falsifying data, manipulating data analyses, or misrepresenting results in research reports. It’s a form of academic fraud.

These actions are committed intentionally and can have serious consequences; research misconduct is not a simple mistake or a point of disagreement but a serious ethical failure.

Anonymity means you don’t know who the participants are, while confidentiality means you know who they are but remove identifying information from your research report. Both are important ethical considerations .

You can only guarantee anonymity by not collecting any personally identifying information—for example, names, phone numbers, email addresses, IP addresses, physical characteristics, photos, or videos.

You can keep data confidential by using aggregate information in your research report, so that you only refer to groups of participants rather than individuals.

Research ethics matter for scientific integrity, human rights and dignity, and collaboration between science and society. These principles make sure that participation in studies is voluntary, informed, and safe.

Ethical considerations in research are a set of principles that guide your research designs and practices. These principles include voluntary participation, informed consent, anonymity, confidentiality, potential for harm, and results communication.

Scientists and researchers must always adhere to a certain code of conduct when collecting data from others .

These considerations protect the rights of research participants, enhance research validity , and maintain scientific integrity.

In multistage sampling , you can use probability or non-probability sampling methods .

For a probability sample, you have to conduct probability sampling at every stage.

You can mix it up by using simple random sampling , systematic sampling , or stratified sampling to select units at different stages, depending on what is applicable and relevant to your study.

Multistage sampling can simplify data collection when you have large, geographically spread samples, and you can obtain a probability sample without a complete sampling frame.

But multistage sampling may not lead to a representative sample, and larger samples are needed for multistage samples to achieve the statistical properties of simple random samples .

These are four of the most common mixed methods designs :

- Convergent parallel: Quantitative and qualitative data are collected at the same time and analyzed separately. After both analyses are complete, compare your results to draw overall conclusions.

- Embedded: Quantitative and qualitative data are collected at the same time, but within a larger quantitative or qualitative design. One type of data is secondary to the other.

- Explanatory sequential: Quantitative data is collected and analyzed first, followed by qualitative data. You can use this design if you think your qualitative data will explain and contextualize your quantitative findings.

- Exploratory sequential: Qualitative data is collected and analyzed first, followed by quantitative data. You can use this design if you think the quantitative data will confirm or validate your qualitative findings.

Triangulation in research means using multiple datasets, methods, theories and/or investigators to address a research question. It’s a research strategy that can help you enhance the validity and credibility of your findings.

Triangulation is mainly used in qualitative research , but it’s also commonly applied in quantitative research . Mixed methods research always uses triangulation.

In multistage sampling , or multistage cluster sampling, you draw a sample from a population using smaller and smaller groups at each stage.

This method is often used to collect data from a large, geographically spread group of people in national surveys, for example. You take advantage of hierarchical groupings (e.g., from state to city to neighborhood) to create a sample that’s less expensive and time-consuming to collect data from.

No, the steepness or slope of the line isn’t related to the correlation coefficient value. The correlation coefficient only tells you how closely your data fit on a line, so two datasets with the same correlation coefficient can have very different slopes.

To find the slope of the line, you’ll need to perform a regression analysis .

Correlation coefficients always range between -1 and 1.

The sign of the coefficient tells you the direction of the relationship: a positive value means the variables change together in the same direction, while a negative value means they change together in opposite directions.

The absolute value of a number is equal to the number without its sign. The absolute value of a correlation coefficient tells you the magnitude of the correlation: the greater the absolute value, the stronger the correlation.

These are the assumptions your data must meet if you want to use Pearson’s r :

- Both variables are on an interval or ratio level of measurement

- Data from both variables follow normal distributions

- Your data have no outliers

- Your data is from a random or representative sample

- You expect a linear relationship between the two variables

Quantitative research designs can be divided into two main categories:

- Correlational and descriptive designs are used to investigate characteristics, averages, trends, and associations between variables.

- Experimental and quasi-experimental designs are used to test causal relationships .

Qualitative research designs tend to be more flexible. Common types of qualitative design include case study , ethnography , and grounded theory designs.

A well-planned research design helps ensure that your methods match your research aims, that you collect high-quality data, and that you use the right kind of analysis to answer your questions, utilizing credible sources . This allows you to draw valid , trustworthy conclusions.

The priorities of a research design can vary depending on the field, but you usually have to specify:

- Your research questions and/or hypotheses

- Your overall approach (e.g., qualitative or quantitative )

- The type of design you’re using (e.g., a survey , experiment , or case study )

- Your sampling methods or criteria for selecting subjects

- Your data collection methods (e.g., questionnaires , observations)

- Your data collection procedures (e.g., operationalization , timing and data management)

- Your data analysis methods (e.g., statistical tests or thematic analysis )

A research design is a strategy for answering your research question . It defines your overall approach and determines how you will collect and analyze data.

Questionnaires can be self-administered or researcher-administered.

Self-administered questionnaires can be delivered online or in paper-and-pen formats, in person or through mail. All questions are standardized so that all respondents receive the same questions with identical wording.

Researcher-administered questionnaires are interviews that take place by phone, in-person, or online between researchers and respondents. You can gain deeper insights by clarifying questions for respondents or asking follow-up questions.

You can organize the questions logically, with a clear progression from simple to complex, or randomly between respondents. A logical flow helps respondents process the questionnaire easier and quicker, but it may lead to bias. Randomization can minimize the bias from order effects.

Closed-ended, or restricted-choice, questions offer respondents a fixed set of choices to select from. These questions are easier to answer quickly.

Open-ended or long-form questions allow respondents to answer in their own words. Because there are no restrictions on their choices, respondents can answer in ways that researchers may not have otherwise considered.

A questionnaire is a data collection tool or instrument, while a survey is an overarching research method that involves collecting and analyzing data from people using questionnaires.

The third variable and directionality problems are two main reasons why correlation isn’t causation .

The third variable problem means that a confounding variable affects both variables to make them seem causally related when they are not.

The directionality problem is when two variables correlate and might actually have a causal relationship, but it’s impossible to conclude which variable causes changes in the other.

Correlation describes an association between variables : when one variable changes, so does the other. A correlation is a statistical indicator of the relationship between variables.

Causation means that changes in one variable brings about changes in the other (i.e., there is a cause-and-effect relationship between variables). The two variables are correlated with each other, and there’s also a causal link between them.

While causation and correlation can exist simultaneously, correlation does not imply causation. In other words, correlation is simply a relationship where A relates to B—but A doesn’t necessarily cause B to happen (or vice versa). Mistaking correlation for causation is a common error and can lead to false cause fallacy .

Controlled experiments establish causality, whereas correlational studies only show associations between variables.

- In an experimental design , you manipulate an independent variable and measure its effect on a dependent variable. Other variables are controlled so they can’t impact the results.

- In a correlational design , you measure variables without manipulating any of them. You can test whether your variables change together, but you can’t be sure that one variable caused a change in another.

In general, correlational research is high in external validity while experimental research is high in internal validity .