The Three Most Common Types of Hypotheses

In this post, I discuss three of the most common hypotheses in psychology research, and what statistics are often used to test them.

- Post author By sean

- Post date September 28, 2013

- 37 Comments on The Three Most Common Types of Hypotheses

Simple main effects (i.e., X leads to Y) are usually not going to get you published. Main effects can be exciting in the early stages of research to show the existence of a new effect, but as a field matures the types of questions that scientists are trying to answer tend to become more nuanced and specific. In this post, I’ll briefly describe the three most common kinds of hypotheses that expand upon simple main effects – at least, the most common ones I’ve seen in my research career in psychology – as well as providing some resources to help you learn about how to test these hypotheses using statistics.

Incremental Validity

“Can X predict Y over and above other important predictors?”

This is probably the simplest of the three hypotheses I propose. Basically, you attempt to rule out potential confounding variables by controlling for them in your analysis. We do this because (in many cases) our predictor variables are correlated with each other. This is undesirable from a statistical perspective, but is common with real data. The idea is that we want to see if X can predict unique variance in Y over and above the other variables you include.

In terms of analysis, you are probably going to use some variation of multiple regression or partial correlations. For example, in my own work I’ve shown in the past that friendship intimacy as coded from autobiographical narratives can predict concern for the next generation over and above numerous other variables, such as optimism, depression, and relationship status ( Mackinnon et al., 2011 ).

“Under what conditions does X lead to Y?”

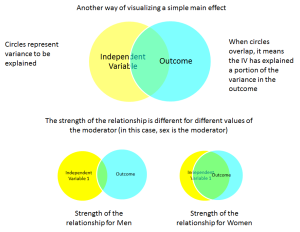

Of the three techniques I describe, moderation is probably the most tricky to understand. Essentially, it proposes that the size of a relationship between two variables changes depending upon the value of a third variable, known as a “moderator.” For example, in the diagram below you might find a simple main effect that is moderated by sex. That is, the relationship is stronger for women than for men:

With moderation, it is important to note that the moderating variable can be a category (e.g., sex) or it can be a continuous variable (e.g., scores on a personality questionnaire). When a moderator is continuous, usually you’re making statements like: “As the value of the moderator increases, the relationship between X and Y also increases.”

“Does X predict M, which in turn predicts Y?”

We might know that X leads to Y, but a mediation hypothesis proposes a mediating, or intervening variable. That is, X leads to M, which in turn leads to Y. In the diagram below I use a different way of visually representing things consistent with how people typically report things when using path analysis.

I use mediation a lot in my own research. For example, I’ve published data suggesting the relationship between perfectionism and depression is mediated by relationship conflict ( Mackinnon et al., 2012 ). That is, perfectionism leads to increased conflict, which in turn leads to heightened depression. Another way of saying this is that perfectionism has an indirect effect on depression through conflict.

Helpful links to get you started testing these hypotheses

Depending on the nature of your data, there are multiple ways to address each of these hypotheses using statistics. They can also be combined together (e.g., mediated moderation). Nonetheless, a core understanding of these three hypotheses and how to analyze them using statistics is essential for any researcher in the social or health sciences. Below are a few links that might help you get started:

Are you a little rusty with multiple regression? The basics of this technique are required for most common tests of these hypotheses. You might check out this guide as a helpful resource:

https://statistics.laerd.com/spss-tutorials/multiple-regression-using-spss-statistics.php

David Kenny’s Mediation Website provides an excellent overview of mediation and moderation for the beginner.

http://davidakenny.net/cm/mediate.htm

http://davidakenny.net/cm/moderation.htm

Preacher and Haye’s INDIRECT Macro is a great, easy way to implement mediation in SPSS software, and their MODPROBE macro is a useful tool for testing moderation.

http://afhayes.com/spss-sas-and-mplus-macros-and-code.html

If you want to graph the results of your moderation analyses, the excel calculators provided on Jeremy Dawson’s webpage are fantastic, easy-to-use tools:

http://www.jeremydawson.co.uk/slopes.htm

- Tags mediation , moderation , regression , tutorial

37 replies on “The Three Most Common Types of Hypotheses”

I want to see clearly the three types of hypothesis

Thanks for your information. I really like this

Thank you so much, writing up my masters project now and wasn’t sure whether one of my variables was mediating or moderating….Much clearer now.

Thank you for simplified presentation. It is clearer to me now than ever before.

Thank you. Concise and clear

hello there

I would like to ask about mediation relationship: If I have three variables( X-M-Y)how many hypotheses should I write down? Should I have 2 or 3? In other words, should I have hypotheses for the mediating relationship? What about questions and objectives? Should be 3? Thank you.

Hi Osama. It’s really a stylistic thing. You could write it out as 3 separate hypotheses (X -> Y; X -> M; M -> Y) or you could just write out one mediation hypotheses “X will have an indirect effect on Y through M.” Usually, I’d write just the 1 because it conserves space, but either would be appropriate.

Hi Sean, according to the three steps model (Dudley, Benuzillo and Carrico, 2004; Pardo and Román, 2013)., we can test hypothesis of mediator variable in three steps: (X -> Y; X -> M; X and M -> Y). Then, we must use the Sobel test to make sure that the effect is significant after using the mediator variable.

Yes, but this is older advice. Best practice now is to calculate an indirect effect and use bootstrapping, rather than the causal steps approach and the more out-dated Sobel test. I’d recommend reading Hayes (2018) book for more info:

Hayes, A. F. (2018). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach (2nd ed). Guilford Publications.

Hi! It’s been really helpful but I still don’t know how to formulate the hypothesis with my mediating variable.

I have one dependent variable DV which is formed by DV1 and DV2, then I have MV (mediating variable), and then 2 independent variables IV1, and IV2.

How many hypothesis should I write? I hope you can help me 🙂

Thank you so much!!

If I’m understanding you correctly, I guess 2 mediation hypotheses:

IV1 –> Med –> DV1&2 IV2 –> Med –> DV1&2

Thank you so much for your quick answer! ^^

Could you help me formulate my research question? English is not my mother language and I have trouble choosing the right words. My x = psychopathy y = aggression m = deficis in emotion recognition

thank you in advance

I have mediator and moderator how should I make my hypothesis

Can you have a negative partial effect? IV – M – DV. That is my M will have negative effect on the DV – e.g Social media usage (M) will partial negative mediate the relationship between father status (IV) and social connectedness (DV)?

Thanks in advance

Hi Ashley. Yes, this is possible, but often it means you have a condition known as “inconsistent mediation” which isn’t usually desirable. See this entry on David Kenny’s page:

Or look up “inconsistent mediation” in this reference:

MacKinnon, D. P., Fairchild, A. J., & Fritz, M. S. (2007). Mediation analysis. Annual Review of Psychology, 58, 593-614.

This is very interesting presentation. i love it.

This is very interesting and educative. I love it.

Hello, you mentioned that for the moderator, it changes the relationship between iv and dv depending on its strength. How would one describe a situation where if the iv is high iv and dv relationship is opposite from when iv is low. And then a 3rd variable maybe the moderator increases dv when iv is low and decreases dv when iv is high.

This isn’t problematic for moderation. Moderation just proposes that the magnitude of the relationship changes as levels of the moderator changes. If the sign flips, probably the original relationship was small. Sometimes people call this a “cross-over” effect, but really, it’s nothing special and can happen in any moderation analysis.

i want to use an independent variable as moderator after this i will have 3 independent variable and 1 dependent variable…. my confusion is do i need to have some past evidence of the X variable moderate the relationship of Y independent variable and Z dependent variable.

Dear Sean It is really helpful as my research model will use mediation. Because I still face difficulty in developing hyphothesis, can you give examples ? Thank you

Hi! is it possible to have all three pathways negative? My regression analysis showed significant negative relationships between x to y, x to m and m to y.

Hi, I have 1 independent variable, 1 dependent variable and 4 mediating variable May I know how many hypothesis should I develop?

Hello I have 4 IV , 1 mediating Variable and 1 DV

My model says that 4 IVs when mediated by 1MV leads to 1 Dv

Pls tell me how to set the hypothesis for mediation

Hi I have 4 IVs ,2 Mediating Variables , 1DV and 3 Outcomes (criterion variables).

Pls can u tell me how many hypotheses to set.

Thankyou in advance

I am in fact happy to read this webpage posts which carries tons of useful information, thanks for providing such data.

I see you don’t monetize savvystatistics.com, don’t waste your traffic, you can earn additional bucks every month with new monetization method. This is the best adsense alternative for any type of website (they approve all websites), for more info simply search in gooogle: murgrabia’s tools

what if the hypothesis and moderator significant in regrestion and insgificant in moderation?

Thank you so much!! Your slide on the mediator variable let me understand!

Very informative material. The author has used very clear language and I would recommend this for any student of research/

Hi Sean, thanks for the nice material. I have a question: for the second type of hypothesis, you state “That is, the relationship is stronger for men than for women”. Based on the illustration, wouldn’t the opposite be true?

Yes, your right! I updated the post to fix the typo, thank you!

I have 3 independent variable one mediator and 2 dependant variable how many hypothesis I have 2 write?

Sounds like 6 mediation hypotheses total:

X1 -> M -> Y1 X2 -> M -> Y1 X3 -> M -> Y1 X1 -> M -> Y2 X2 -> M -> Y2 X3 -> M -> Y2

Clear explanation! Thanks!

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

Section 7.3: Moderation Models, Assumptions, Interpretation, and Write Up

- Last updated

- Save as PDF

- Page ID 31601

Learning Objectives

At the end of this section you should be able to answer the following questions:

- What are some basic assumptions behind moderation?

- What are the key components of a write up of moderation analysis?

Moderation Models

Difference between mediation & moderation.

The main difference between a simple interaction, like in ANOVA models or in moderation models, is that mediation implies that there is a causal sequence. In this case, we know that stress causes ill effects on health, so that would be the causal factor.

Some predictor variables interact in a sequence, rather than impacting the outcome variable singly or as a group (like regression).

Moderation and mediation is a form of regression that allows researchers to analyse how a third variable effects the relationship of the predictor and outcome variable.

Moderation analyses imply an interaction on the different levels of M

PowerPoint: Basic Moderation Model

Consider the below model:

- Chapter Seven – Basic Moderation Model

Would the muscle percentage be the same for young, middle-aged, and older participants after training? We know that it is harder to build muscle as we age, so would training have a lower effect on muscle growth in older people?

Example Research Question:

Does cyberbullying moderate the relationship between perceived stress and mental distress?

Moderation Assumptions

- The dependent and independent variables should be measured on a continuous scale.

- There should be a moderator variable that is a nominal variable with at least two groups.

- The variables of interest (the dependent variable and the independent and moderator variables) should have a linear relationship, which you can check with a scatterplot.

- The data must not show multicollinearity (see Multiple Regression).

- There should be no significant outliers, and the distribution of the variables should be approximately normal.

Moderation Interpretation

PowerPoint: Moderation menu, results and output

Please have a look at the following link for the Moderation Menu and Output:

- Chapter Seven – Moderation Output

Interpretation

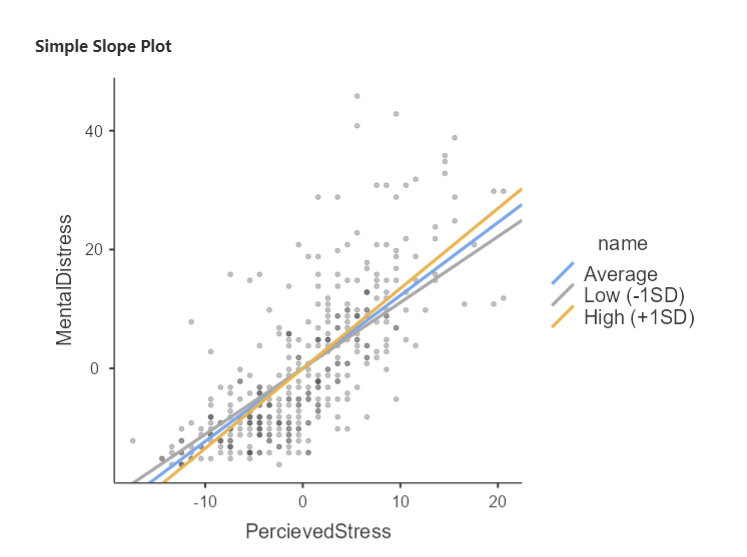

The effects of cyberbullying can be seen in blue, with the perceived stress in green. These are the main effects of the X and M variable on the outcome variable (Y). The interaction effect can be seen in purple. This will tell us if perceived stress is effecting mental distress equally for average, lower than average or higher than average levels of cyberbullying. If this is significant, then there is a difference in that effect. As can be seen in yellow and grey, cyberbullying has an effect on mental distress, but the effect is stronger for those who report higher levels of cyberbullying (see graph).

Moderation Write Up

The following text represents a moderation write up:

A moderation test was run, with perceived stress as the predictor, mental distress as the dependant, and cyberbullying as a moderator. There was a significant main effect found between perceived stress and mental distress, b = -1.23, BCa CI [1.11, 1.34], z =21.38 , p <.001, and nonsignificant main effect of cyberbullying on mental distress b = 1.05, BCa CI [0.72, 1.38], z=6.28, p < .001. There was a significant interaction found by cyberbullying on perceived stress and mental distress, b = -0.05, BCa CI [0.01, 0.09], z=2.16, p =.031. It was found that participants who reported higher than average levels of cyberbullying experienced a greater effect of perceived stress on mental distress ( b = 1.35, BCa CI [1.19, 1.50], z=17.1, p < .001), when compared to average or lower than average levels of cyberbullying ( b = 1.23, BCa CI [1.11, 1.34], z=21.3, p < .001, b = 1.11, BCa CI [0.95, 1.27], z=13.8, p < .001, respectively). From these results, it can be concluded that the effect of perceived stress on mental distress is partially moderated by cyberbullying.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Strong Hypothesis | Steps & Examples

How to Write a Strong Hypothesis | Steps & Examples

Published on May 6, 2022 by Shona McCombes . Revised on November 20, 2023.

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection .

Example: Hypothesis

Daily apple consumption leads to fewer doctor’s visits.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, other interesting articles, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more types of variables .

- An independent variable is something the researcher changes or controls.

- A dependent variable is something the researcher observes and measures.

If there are any control variables , extraneous variables , or confounding variables , be sure to jot those down as you go to minimize the chances that research bias will affect your results.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

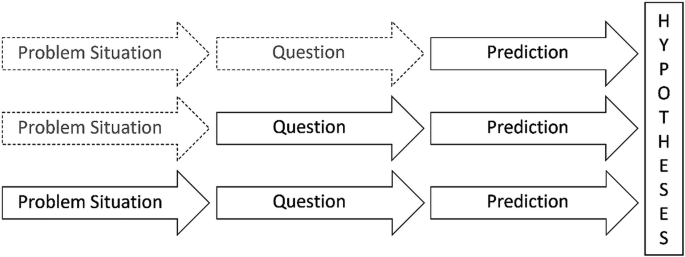

Step 1. Ask a question

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2. Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to ensure that you’re embarking on a relevant topic . This can also help you identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalize more complex constructs.

Step 3. Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

4. Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

5. Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if…then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis . The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

- H 0 : The number of lectures attended by first-year students has no effect on their final exam scores.

- H 1 : The number of lectures attended by first-year students has a positive effect on their final exam scores.

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). How to Write a Strong Hypothesis | Steps & Examples. Scribbr. Retrieved March 29, 2024, from https://www.scribbr.com/methodology/hypothesis/

Is this article helpful?

Shona McCombes

Other students also liked, construct validity | definition, types, & examples, what is a conceptual framework | tips & examples, operationalization | a guide with examples, pros & cons, what is your plagiarism score.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- How to Write a Strong Hypothesis | Guide & Examples

How to Write a Strong Hypothesis | Guide & Examples

Published on 6 May 2022 by Shona McCombes .

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more variables . An independent variable is something the researcher changes or controls. A dependent variable is something the researcher observes and measures.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Prevent plagiarism, run a free check.

Step 1: ask a question.

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2: Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalise more complex constructs.

Step 3: Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

Step 4: Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

Step 5: Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if … then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

Step 6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis. The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis is not just a guess. It should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, May 06). How to Write a Strong Hypothesis | Guide & Examples. Scribbr. Retrieved 25 March 2024, from https://www.scribbr.co.uk/research-methods/hypothesis-writing/

Is this article helpful?

Shona McCombes

Other students also liked, operationalisation | a guide with examples, pros & cons, what is a conceptual framework | tips & examples, a quick guide to experimental design | 5 steps & examples.

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

5.2 - writing hypotheses.

The first step in conducting a hypothesis test is to write the hypothesis statements that are going to be tested. For each test you will have a null hypothesis (\(H_0\)) and an alternative hypothesis (\(H_a\)).

When writing hypotheses there are three things that we need to know: (1) the parameter that we are testing (2) the direction of the test (non-directional, right-tailed or left-tailed), and (3) the value of the hypothesized parameter.

- At this point we can write hypotheses for a single mean (\(\mu\)), paired means(\(\mu_d\)), a single proportion (\(p\)), the difference between two independent means (\(\mu_1-\mu_2\)), the difference between two proportions (\(p_1-p_2\)), a simple linear regression slope (\(\beta\)), and a correlation (\(\rho\)).

- The research question will give us the information necessary to determine if the test is two-tailed (e.g., "different from," "not equal to"), right-tailed (e.g., "greater than," "more than"), or left-tailed (e.g., "less than," "fewer than").

- The research question will also give us the hypothesized parameter value. This is the number that goes in the hypothesis statements (i.e., \(\mu_0\) and \(p_0\)). For the difference between two groups, regression, and correlation, this value is typically 0.

Hypotheses are always written in terms of population parameters (e.g., \(p\) and \(\mu\)). The tables below display all of the possible hypotheses for the parameters that we have learned thus far. Note that the null hypothesis always includes the equality (i.e., =).

- Chapter 1: Introduction

- Chapter 2: Indexing

- Chapter 3: Loops & Logicals

- Chapter 4: Apply Family

- Chapter 5: Plyr Package

- Chapter 6: Vectorizing

- Chapter 7: Sample & Replicate

- Chapter 8: Melting & Casting

- Chapter 9: Tidyr Package

- Chapter 10: GGPlot1: Basics

- Chapter 11: GGPlot2: Bars & Boxes

- Chapter 12: Linear & Multiple

- Chapter 13: Ploting Interactions

- Chapter 14: Moderation/Mediation

- Chapter 15: Moderated-Mediation

- Chapter 16: MultiLevel Models

- Chapter 17: Mixed Models

- Chapter 18: Mixed Assumptions Testing

- Chapter 19: Logistic & Poisson

- Chapter 20: Between-Subjects

- Chapter 21: Within- & Mixed-Subjects

- Chapter 22: Correlations

- Chapter 23: ARIMA

- Chapter 24: Decision Trees

- Chapter 25: Signal Detection

- Chapter 26: Intro to Shiny

- Chapter 27: ANOVA Variance

- Download Rmd

Chapter 14: Mediation and Moderation

Alyssa blair, 1 what are mediation and moderation.

Mediation analysis tests a hypothetical causal chain where one variable X affects a second variable M and, in turn, that variable affects a third variable Y. Mediators describe the how or why of a (typically well-established) relationship between two other variables and are sometimes called intermediary variables since they often describe the process through which an effect occurs. This is also sometimes called an indirect effect. For instance, people with higher incomes tend to live longer but this effect is explained by the mediating influence of having access to better health care.

In R, this kind of analysis may be conducted in two ways: Baron & Kenny’s (1986) 4-step indirect effect method and the more recent mediation package (Tingley, Yamamoto, Hirose, Keele, & Imai, 2014). The Baron & Kelly method is among the original methods for testing for mediation but tends to have low statistical power. It is covered in this chapter because it provides a very clear approach to establishing relationships between variables and is still occassionally requested by reviewers. However, the mediation package method is highly recommended as a more flexible and statistically powerful approach.

Moderation analysis also allows you to test for the influence of a third variable, Z, on the relationship between variables X and Y. Rather than testing a causal link between these other variables, moderation tests for when or under what conditions an effect occurs. Moderators can stength, weaken, or reverse the nature of a relationship. For example, academic self-efficacy (confidence in own’s ability to do well in school) moderates the relationship between task importance and the amount of test anxiety a student feels (Nie, Lau, & Liau, 2011). Specifically, students with high self-efficacy experience less anxiety on important tests than students with low self-efficacy while all students feel relatively low anxiety for less important tests. Self-efficacy is considered a moderator in this case because it interacts with task importance, creating a different effect on test anxiety at different levels of task importance.

In general (and thus in R), moderation can be tested by interacting variables of interest (moderator with IV) and plotting the simple slopes of the interaction, if present. A variety of packages also include functions for testing moderation but as the underlying statistical approaches are the same, only the “by hand” approach is covered in detail in here.

Finally, this chapter will cover these basic mediation and moderation techniques only. For more complicated techniques, such as multiple mediation, moderated mediation, or mediated moderation please see the mediation package’s full documentation.

1.1 Getting Started

If necessary, review the Chapter on regression. Regression test assumptions may be tested with gvlma . You may load all the libraries below or load them as you go along. Review the help section of any packages you may be unfamiliar with ?(packagename).

2 Mediation Analyses

Mediation tests whether the effects of X (the independent variable) on Y (the dependent variable) operate through a third variable, M (the mediator). In this way, mediators explain the causal relationship between two variables or “how” the relationship works, making it a very popular method in psychological research.

Both mediation and moderation assume that there is little to no measurement error in the mediator/moderator variable and that the DV did not CAUSE the mediator/moderator. If mediator error is likely to be high, researchers should collect multiple indicators of the construct and use SEM to estimate latent variables. The safest ways to make sure your mediator is not caused by your DV are to experimentally manipulate the variable or collect the measurement of your mediator before you introduce your IV.

Total Effect Model.

Basic Mediation Model.

c = the total effect of X on Y c = c’ + ab c’= the direct effect of X on Y after controlling for M; c’=c-ab ab= indirect effect of X on Y

The above shows the standard mediation model. Perfect mediation occurs when the effect of X on Y decreases to 0 with M in the model. Partial mediation occurs when the effect of X on Y decreases by a nontrivial amount (the actual amount is up for debate) with M in the model.

2.1 Example Mediation Data

Set an appropriate working directory and generate the following data set.

In this example we’ll say we are interested in whether the number of hours since dawn (X) affect the subjective ratings of wakefulness (Y) 100 graduate students through the consumption of coffee (M).

Note that we are intentionally creating a mediation effect here (because statistics is always more fun if we have something to find) and we do so below by creating M so that it is related to X and Y so that it is related to M. This creates the causal chain for our analysis to parse.

2.2 Method 1: Baron & Kenny

This is the original 4-step method used to describe a mediation effect. Steps 1 and 2 use basic linear regression while steps 3 and 4 use multiple regression. For help with regression, see Chapter 10.

The Steps: 1. Estimate the relationship between X on Y (hours since dawn on degree of wakefulness) -Path “c” must be significantly different from 0; must have a total effect between the IV & DV

Estimate the relationship between X on M (hours since dawn on coffee consumption) -Path “a” must be significantly different from 0; IV and mediator must be related.

Estimate the relationship between M on Y controlling for X (coffee consumption on wakefulness, controlling for hours since dawn) -Path “b” must be significantly different from 0; mediator and DV must be related. -The effect of X on Y decreases with the inclusion of M in the model

Estimate the relationship between Y on X controlling for M (wakefulness on hours since dawn, controlling for coffee consumption) -Should be non-significant and nearly 0.

2.3 Interpreting Barron & Kenny Results

Here we find that our total effect model shows a significant positive relationship between hours since dawn (X) and wakefulness (Y). Our Path A model shows that hours since down (X) is also positively related to coffee consumption (M). Our Path B model then shows that coffee consumption (M) positively predicts wakefulness (Y) when controlling for hours since dawn (X). Finally, wakefulness (Y) does not predict hours since dawn (X) when controlling for coffee consumption (M).

Since the relationship between hours since dawn and wakefulness is no longer significant when controlling for coffee consumption, this suggests that coffee consumption does in fact mediate this relationship. However, this method alone does not allow for a formal test of the indirect effect so we don’t know if the change in this relationship is truly meaningful.

There are two primary methods for formally testing the significance of the indirect test: the Sobel test & bootstrapping (covered under the mediatation method).

The Sobel Test uses a specialized t-test to determine if there is a significant reduction in the effect of X on Y when M is present. Using the sobel function of the multilevel package will show provide you with three of the basic models we ran before (Mod1 = Total Effect; Mod2 = Path B; and Mod3 = Path A) as well as an estimate of the indirect effect, the standard error of that effect, and the z-value for that effect. You can either use this value to calculate your p-value or run the mediation.test function from the bda package to receive a p-value for this estimate.

In this case, we can now confirm that the relationship between hours since dawn and feelings of wakefulness are significantly mediated by the consumption of coffee (z’ = 3.84, p < .001).

However, the Sobel Test is largely considered an outdated method since it assumes that the indirect effect (ab) is normally distributed and tends to only have adequate power with large sample sizes. Thus, again, it is highly recommended to use the mediation bootstrapping method instead.

2.4 Method 2: The Mediation Pacakge Method

This package uses the more recent bootstrapping method of Preacher & Hayes (2004) to address the power limitations of the Sobel Test. This method computes the point estimate of the indirect effect (ab) over a large number of random sample (typically 1000) so it does not assume that the data are normally distributed and is especially more suitable for small sample sizes than the Barron & Kenny method.

To run the mediate function, we will again need a model of our IV (hours since dawn), predicting our mediator (coffee consumption) like our Path A model above. We will also need a model of the direct effect of our IV (hours since dawn) on our DV (wakefulness), when controlling for our mediator (coffee consumption). When can then use mediate to repeatedly simulate a comparsion between these models and to test the signifcance of the indirect effect of coffee consumption.

2.5 Interpreting Mediation Results

The mediate function gives us our Average Causal Mediation Effects (ACME), our Average Direct Effects (ADE), our combined indirect and direct effects (Total Effect), and the ratio of these estimates (Prop. Mediated). The ACME here is the indirect effect of M (total effect - direct effect) and thus this value tells us if our mediation effect is significant.

In this case, our fitMed model again shows a signifcant affect of coffee consumption on the relationship between hours since dawn and feelings of wakefulness, (ACME = .28, p < .001) with no direct effect of hours since dawn (ADE = -0.11, p = .27) and significant total effect ( p < .05).

We can then bootstrap this comparison to verify this result in fitMedBoot and again find a significant mediation effect (ACME = .28, p < .001) and no direct effect of hours since dawn (ADE = -0.11, p = .27). However, with increased power, this analysis no longer shows a significant total effect ( p = .08).

3 Moderation Analyses

Moderation tests whether a variable (Z) affects the direction and/or strength of the relation between an IV (X) and a DV (Y). In other words, moderation tests for interactions that affect WHEN relationships between variables occur. Moderators are conceptually different from mediators (when versus how/why) but some variables may be a moderator or a mediator depending on your question. See the mediation package documentation for ways of testing more complicated mediated moderation/moderated mediation relationships.

Like mediation, moderation assumes that there is little to no measurement error in the moderator variable and that the DV did not CAUSE the moderator. If moderator error is likely to be high, researchers should collect multiple indicators of the construct and use SEM to estimate latent variables. The safest ways to make sure your moderator is not caused by your DV are to experimentally manipulate the variable or collect the measurement of your moderator before you introduce your IV.

Basic Moderation Model.

3.1 Example Moderation Data

In this example we’ll say we are interested in whether the relationship between the number of hours of sleep (X) a graduate student receives and the attention that they pay to this tutorial (Y) is influenced by their consumption of coffee (Z). Here we create the moderation effect by making our DV (Y) the product of levels of the IV (X) and our moderator (Z).

3.2 Moderation Analysis

Moderation can be tested by looking for significant interactions between the moderating variable (Z) and the IV (X). Notably, it is important to mean center both your moderator and your IV to reduce multicolinearity and make interpretation easier. Centering can be done using the scale function, which subtracts the mean of a variable from each value in that variable. For more information on the use of centering, see ?scale and any number of statistical textbooks that cover regression (we recommend Cohen, 2008).

A number of packages in R can also be used to conduct and plot moderation analyses, including the moderate.lm function of the QuantPsyc package and the pequod package. However, it is simple to do this “by hand” using traditional multiple regression, as shown here, and the underlying analysis (interacting the moderator and the IV) in these packages is identical to this approach. The rockchalk package used here is one of many graphing and plotting packages available in R and was chosen because it was especially designed for use with regression analyses (unlike the more general graphing options described in Chapters 8 & 9).

3.3 Interpreting Moderation Results

Results are presented similar to regular multiple regression results (see Chapter 10). Since we have significant interactions in this model, there is no need to interpret the separate main effects of either our IV or our moderator.

Our by hand model shows a significant interaction between hours slept and coffee consumption on attention paid to this tutorial (b = .23, SE = .04, p < .001). However, we’ll need to unpack this interaction visually to get a better idea of what this means.

The rockchalk function will automatically plot the simple slopes (1 SD above and 1 SD below the mean) of the moderating effect. This figure shows that those who drank less coffee (the black line) paid more attention with the more sleep that they got last night but paid less attention overall that average (the red line). Those who drank more coffee (the green line) paid more when they slept more as well and paid more attention than average. The difference in the slopes for those who drank more or less coffee shows that coffee consumption moderates the relationship between hours of sleep and attention paid.

4 References and Further Reading

Baron, R., & Kenny, D. (1986). The moderator-mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. Journal of Personality and Social Psychology, 51, 1173-1182.

Cohen, B. H. (2008). Explaining psychological statistics. John Wiley & Sons.

Imai, K., Keele, L., & Tingley, D. (2010). A general approach to causal mediation analysis. Psychological methods, 15(4), 309.

MacKinnon, D. P., Lockwood, C. M., Hoffman, J. M., West, S. G., & Sheets, V. (2002). A comparison of methods to test mediation and other intervening variable effects. Psychological methods, 7(1), 83.

Nie, Y., Lau, S., & Liau, A. K. (2011). Role of academic self-efficacy in moderating the relation between task importance and test anxiety. Learning and Individual Differences, 21(6), 736-741.

Tingley, D., Yamamoto, T., Hirose, K., Keele, L., & Imai, K. (2014). Mediation: R package for causal mediation analysis.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Section 7.1: Mediation and Moderation Models

Learning Objectives

At the end of this section you should be able to answer the following questions:

- Define the concept of a moderator variable.

- Define the concept of a mediator variable.

As we discussed in the lesson on correlations and regressions, understanding associations between psychological constructs can tell researchers a great deal about how certain mental health concerns and behaviours affects us on an emotional level. Correlation analyses focus on the relationship between two variables, and regression is the association of multiple independent variables with a single dependant variable.

Some predictor variables interact in a sequence, rather than impacting the outcome variable singly or as a group (like regression).

Moderation and mediation is a form of regression that allows researchers to analyse how a third variable effects the relationship of the predictor and outcome variable.

PowerPoint: Basic Mediation Model

Consider the Basic Mediation Model in this slide:

- Chapter Seven – Basic Mediation Model

We know that high levels of stress can negatively impact health, we also know that a high level of social support can be beneficial to health. With these two points of knowledge, could it be that social support might provide a protective factor from the effects of stress on health? Thinking about a sequence of effects, perhaps social support can mediate the effect of stress on health.

Mediation is a more complicated extension of multiple regression procedures. Mediation examines the pattern of relationships among three variables (Simple Mediation Model), and can be used on four or more variables.

Examples of Research Questions

Here are some examples of research questions that could use a mediation analysis.

- If an intervention increases secure attachment among young children, do behavioural problems decrease when the children enter school?

- Does physical abuse in early childhood lead to deviant processing of social information that leads to aggressive behaviour?

- Do performance expectations start a self-fulfilling prophecy that affects behaviour?

- Can changes in cognitive attributions reduce depression?

PowerPoint: Three Mediation Figures

Consider the Three Figures Illustrating Mediation from the following slides:

- Chapter Seven – Three Mediation Figures

Looking at this conceptual model, you can see the direct effect of X on Y. You can also see the effect of M on Y. What we are interested in is the effects of X on Y, accounting for the effects of M.

An example mediation model is that of the mediating effect of health-related behaviours on conscientiousness and overall physical health. Conscientiousness, or the personality trait associated with hardworking has relationship with overall physical health, but if an individual is hardworking, but does not perform health-related behaviours like exercise or diet control, then they are likely to be less healthy. From this, we can assume that health-related behaviours mediates the relationship between conscientiousness and physical health.

Statistics for Research Students Copyright © 2022 by University of Southern Queensland is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

Share This Book

Statistics: Data analysis and modelling

Chapter 6 moderation and mediation.

In this chapter, we will focus on two ways in which one predictor variable may affect the relation between another predictor variable and the dependent variable. Moderation means the strength of the relation (in terms of the slope) of a predictor variable is determined by the value of another predictor variable. For instance, while physical attractiveness is generally positively related to mating success, for very rich people, physical attractiveness may not be so important. This is also called an interaction between the two predictor variables. Mediation is a different way in which two predictors affect a dependent variable. It is best thought of as a causal chain , where one predictor variable determines the value of another predictor variable, which then in turn determines the value of the dependent variable. the difference between moderation and mediation is illustrated in Figure 6.1 .

Figure 6.1: Graphical depiction of the difference between moderation and mediation. Moderation means that the effect of a predictor ( \(X_1\) ) on the dependent variable ( \(Y\) ) depends on the value of another predictor ( \(X_2\) ). Mediation means that a predictor ( \(X_1\) ) affects the dependent variable ( \(Y\) ) indirectly, through its relation to another predictor ( \(X_2\) ) which is directly related to the dependent variable.

6.1 Moderation

6.1.1 physical attractiveness and intelligence in speed dating.

Fisman, Iyengar, Kamenica, & Simonson ( 2006 ) conducted a large scale experiment 15 on dating behaviour. They placed their participants in a speed dating context, where they were randomly matched with a number of potential partners (between 5 and 20) and could converse for four minutes. As part of the study, after each meeting, participants rated how much they liked their speed dating partners, as well as more specifically on their attractiveness, sincerity, intelligence, fun, and ambition. We will focus in particular on ratings of physical attractiveness, fun, and intelligence, and how these are related to the general liking of a person. Ratings were given on a 10-point scale, from 1 (“awful”) to 10 (“great”). A multiple regression analysis predicting general liking from attractiveness, fun, and intelligence (Table 6.1 ) shows that all three predictors have a significant and positive relation with general liking.

6.1.2 Conditional slopes

If we were to model the relation between overall liking and physical attractiveness and intelligence, we might use a multiple regression model such as: 16 \[\texttt{like}_i = \beta_0 + \beta_{\texttt{attr}} \times \texttt{attr}_i + \beta_\texttt{intel} \times \texttt{intel}_i + \epsilon_i \quad \quad \epsilon_i \sim \mathbf{Normal}(0,\sigma_\epsilon)\] which is estimated as \[\texttt{like}_i = -0.0733 + 0.527 \times \texttt{attr}_i + 0.392 \times \texttt{intel}_i + \hat{\epsilon}_i \quad \quad \hat{\epsilon}_i \sim \mathbf{Normal}(0, 1.25)\] The estimates indicate a positive relation to liking of both attractiveness and intelligence. Note that the values of the slopes are different from those in Table 6.1 . The reason for this is that the model in the Table also includes fun as a predictor. Because the slopes reflect unique effects , these depend on all predictors included in the model. When there is dependence between the predictors (i.e. there is multicollinearity) both the estimates of the slopes and the corresponding significance tests will vary when you add or remove predictors from the model.

In the model above, a relative lack in physical attractiveness can be overcome by high intelligence, because in the end, the general liking of someone depends on the sum of both attractiveness and intelligence (each “scaled” by their corresponding slope). For example, someone with an attractiveness rating of \(\texttt{attr}_i = 8\) and an intelligence rating of \(\texttt{intel}_i = 2\) would be expected to be liked as much as a partner as someone with an attractiveness rating of \(\texttt{attr}_i = 3.538\) and an intelligence rating of \(\texttt{intel}_i = 8\) : \[\begin{aligned} \texttt{like}_i &= -0.073 + 0.527 \times 8 + 0.392 \times 2 = 4.924 \\ \texttt{like}_i &= -0.073 + 0.527 \times 3.538 + 0.392 \times 8 = 4.924 \end{aligned}\]

But what if for those lucky people who are very physically attractive, their intelligence doesn’t matter that much , or even at all ? And what if, for those lucky people who are very intelligent, their physical attractiveness doesn’t really matter much or at all? In other words, what if the more attractive people are, the less intelligence determines how much other people like them as a potential partner, and conversely, the more intelligent people are, the less attractiveness determines how much others like them as a potential partner? This implies that the effect of attractiveness on liking depends on intelligence, and that the effect of intelligence on liking depends on attractiveness. Such dependence is not captured by the multiple regression model above. While a relative lack of intelligence might be overcome by a relative abundance of attractiveness, for any level of intelligence, the additional effect of attractiveness is the same (i.e., an increase in attractiveness by one unit will always result in an increase of the predicted liking of 0.527).

Let’s define \(\beta_{\texttt{attr}|\texttt{intel}_i}\) as the slope of \(\texttt{attr}\) conditional on the value of \(\texttt{intel}_i\) . That is, we allow the slope of \(\texttt{attr}\) to vary as a function of \(\texttt{intel}\) . Similarly, we can define \(\beta_{\texttt{intel}|\texttt{attr}_i}\) as the slope of \(\texttt{intel}\) conditional on the value of \(\texttt{attr}\) . Our regression model can then be written as: \[\begin{equation} \texttt{like}_i = \beta_0 + \beta_{\texttt{attr}|\texttt{intel}_i} \times \texttt{attr}_i + \beta_{\texttt{intel} | \texttt{attr}_i} \times \texttt{intel}_i + \epsilon_i \tag{6.1} \end{equation}\] That’s a good start, but what would the value of \(\beta_{\texttt{attr}|\texttt{intel}_i}\) be? Estimating the slope of \(\texttt{attr}\) for each value of \(\texttt{intel}\) by fitting regression models to each subset of data with a particular value of \(\texttt{intel}\) is not really doable. We’d need lots and lots of data, and furthermore, we wouldn’t also be able to simultaneously estimate the value of \(\beta_{\texttt{intel} | \texttt{attr}_i}\) . We need to supply some structure to \(\beta_{\texttt{attr}|\texttt{intel}_i}\) to allow us to estimate its value without overcomplicating things.

6.1.3 Modeling slopes with linear models

One idea is to define \(\beta_{\texttt{attr}|\texttt{intel}_i}\) with a linear model: \[\beta_{\texttt{attr}|\texttt{intel}_i} = \beta_{\texttt{attr},0} + \beta_{\texttt{attr},1} \times \texttt{intel}_i\] This is just like a simple linear regression model, but now the “dependent variable” is the slope of \(\texttt{attr}\) . Defined in this way, the slope of \(\texttt{attr}\) is \(\beta_{\texttt{attr},0}\) when \(\texttt{intel}_i = 0\) , and for every one-unit increase in \(\texttt{intel}_i\) , the slope of \(\texttt{attr}\) increases (or decreases) by \(\beta_{\texttt{attr},1}\) . For example, let’s assume \(\beta_{\texttt{attr},0} = 1\) and \(\beta_{\texttt{attr},1} = 0.5\) . For someone with an intelligence rating of \(\texttt{intel}_i = 0\) , the slope of \(\texttt{attr}\) is \[\beta_{\texttt{attr}|\texttt{intel}_i} = 1 + 0.5 \times 0 = 1\] For someone with an intelligence rating of \(\texttt{intel}_i = 1\) , the slope of \(\texttt{attr}\) is \[\beta_{\texttt{attr}|\texttt{intel}_i} = 1 + 0.5 \times 1 = 1.5\] For someone with an intelligence rating of \(\texttt{intel}_i = 2\) , the slope of \(\texttt{attr}\) is \[\beta_{\texttt{attr}|\texttt{intel}_i} = 1 + 0.5 \times 2 = 2\] As you can see, for every increase in intelligence rating by 1 point, the slope of \(\texttt{attr}\) increases by 0.5. In such a model, there will be values of \(\texttt{intel}\) which result in a negative slope of \(\texttt{attr}\) . For instance, for \(\texttt{intel}_i = -4\) , the slope of \(\texttt{attr}\) is \[\beta_{\texttt{attr}|\texttt{intel}_i} = 1 + 0.5 \times (-4) = - 1\]

We can define the slope of \(\texttt{intel}\) in a similar manner as \[\beta_{\texttt{intel}|\texttt{attr}_i} = \beta_{\texttt{intel},0} + \beta_{\texttt{intel},1} \times \texttt{attr}_i\] When we plug these definitions into Equation (6.1) , we get \[\begin{aligned} \texttt{like}_i &= \beta_0 + (\beta_{\texttt{attr},0} + \beta_{\texttt{attr},1} \times \texttt{intel}_i) \times \texttt{attr}_i + (\beta_{\texttt{intel},0} + \beta_{\texttt{intel},1} \times \texttt{attr}_i) \times \texttt{intel}_i + \epsilon_i \\ &= \beta_0 + \beta_{\texttt{attr},0} \times \texttt{attr}_i + \beta_{\texttt{intel},0} \times \texttt{intel}_i + (\beta_{\texttt{attr},1} + \beta_{\texttt{intel},1}) \times (\texttt{attr}_i \times \texttt{intel}_i) + \epsilon_i \end{aligned}\]

Looking carefully at this formula, you can recognize a multiple regression model with three predictors: \(\texttt{attr}\) , \(\texttt{intel}\) , and a new predictor \(\texttt{attr}_i \times \texttt{intel}_i\) , which is computed as the product of these two variables. While it is thus related to both variables, we can treat this product as just another predictor in the model. The slope of this new predictor is the sum of two terms, \(\beta_{\texttt{attr},1} + \beta_{\texttt{intel},1}\) . Although we have defined these as different things (i.e. as the effect of \(\texttt{intel}\) on the slope of \(\texttt{attr}\) , and the effect of \(\texttt{attr}\) on the slope of \(\texttt{intel}\) , respectively), their value can not be estimated uniquely. We can only estimate their summed value. That means that moderation in regression is “symmetric”, in the sense that each predictor determines the slope of the other one. We can not say that it is just intelligence that determines the effect of attraction on liking, nor can we say that it is just attraction that determines the effect of intelligence on liking. The two variables interact and each determine the other’s effect on the dependent variable.

With that in mind, we can simplify the notation of the resulting model somewhat, by renaming the slopes of the two predictors to \(\beta_{\texttt{attr}} = \beta_{\texttt{attr},0}\) and \(\beta_{\texttt{intel}} = \beta_{\texttt{intel},0}\) , and using a single parameter for the sum \(\beta_{\texttt{attr} \times \texttt{intel}} = \beta_{\texttt{attr},1} + \beta_{\texttt{intel},1}\) :

\[\begin{equation} \texttt{like}_i = \beta_0 + \beta_{\texttt{attr}} \times \texttt{attr}_i + \beta_{\texttt{intel}} \times \texttt{intel}_i + \beta_{\texttt{attr} \times \texttt{intel}} \times (\texttt{attr} \times \texttt{intel})_i + \epsilon_i \end{equation}\]

Estimating this model gives \[\texttt{like}_i = -0.791 + 0.657 \times \texttt{attr}_i + 0.488 \times \texttt{intel}_i - 0.0171 \times \texttt{(attr}\times\texttt{intel)}_i + \hat{\epsilon}_i \] The estimate of the slope of the interaction, \(\hat{\beta}_{\texttt{attr} \times \texttt{intel}} = -0.017\) , is negative. That means that the higher the value of \(\texttt{intel}\) , the less steep the regression line relating \(\texttt{attr}\) to \(\texttt{like}\) . At the same time, the higher the value of \(\texttt{attr}\) , the less steep the regression line relating \(\texttt{intel}\) to \(\texttt{like}\) . You can interpret this as meaning that for more intelligent people, physical attractiveness is less of a defining factor in their liking by a potential partner. And for more attractive people, intelligence is less important.

A graphical view of this model, and the earlier one without moderation, is provided in Figure 6.2 . The plot on the left represents the model which does not allow for interaction. You can see that, for different values of intelligence, the model predicts parallel regression lines for the relation between attractiveness and liking. While intelligence affects the intercept of these regression lines, it does not affect the slope. In the plot on the right – although subtle – you can see that the regression lines are not parallel. This is a model with an interaction between intelligence and attractiveness. For different values of intelligence, the model predicts a linear relation between attractiveness and liking, but crucially, intelligence determines both the intercept and slope of these lines.

Figure 6.2: Liking as a function of attractiveness (intelligence) for different levels of intelligence (attractiveness), either without moderation or with moderation of the slope of attraciveness by intelligence. Note that the actual values of liking, attractiveness, and intelligence, are whole numbers (ratings on a scale between 1 and 10). For visualization purposes, the values have been randomly jittered by adding a Normal-distributed displacement term.

Note that we have constructed this model by simply including a new predictor in the model, which is computed by multiplying the values of \(\texttt{attr}\) and \(\texttt{intel}\) . While including such an “interaction predictor” has important implications for the resulting relations between \(\texttt{attr}\) and \(\texttt{like}\) for different values of \(\texttt{intel}\) , as well as the relations between \(\texttt{intel}\) and \(\texttt{like}\) for different values of \(\texttt{attr}\) , the model itself is just like any other regression model. Thus, parameter estimation and inference are exactly the same as before. Table 6.2 shows the results of comparing the full MODEL G (with three predictors) to different versions of MODEL R, where in each we fix one of the parameters to 0. As you can see, these comparisons indicate that we can reject the null hypothesis \(H_0\) : \(\beta_0 = 0\) , as well as \(H_0\) : \(\beta_{\texttt{attr}} = 0\) and \(H_0\) : \(\beta_{\texttt{intel}} = 0\) . However, as the p-value is above the conventional significance level of \(\alpha=.05\) , we would not reject the null hypothesis \(H_0\) : \(\beta_{\texttt{attr} \times \texttt{intel}} = 0\) . That implies that, in the context of this model, there is not sufficient evidence that there is an interaction. That may seem a little disappointing. We’ve done a lot of work to construct a model where we allow the effect of attractiveness to depend on intelligence, and vice versa. And now the hypothesis test indicates that there is no evidence that this moderation is present. As we will see later, there is evidence of this moderation when we also include \(\texttt{fun}\) in the model. I have left this predictor out of the model for now to keep things as simple as possible.

6.1.4 Simple slopes and centering

It is very important to realise that in a model with interactions, there is no single slope for any of the predictors involved in an interaction, that is particularly meaningful in principle. An interaction means that the slope of one predictor varies as a function of another predictor. Depending on which value of that other predictor you focus on, the slope of the predictor can be positive, negative, or zero. Let’s consider the model we estimated again: \[\texttt{like}_i = -0.791 + 0.657 \times \texttt{attr}_i + 0.488 \times \texttt{intel}_i - 0.0171 \times \texttt{(attr}\times\texttt{intel)}_i + \hat{\epsilon}_i \] If we fill in a particular value for intelligence, say \(\texttt{intel} = 1\) , we can write this as

\[\begin{aligned} \texttt{intel}_i &= -0.791 + 0.657 \times \texttt{attr}_i + 0.488 \times 1 -0.017 \times (\texttt{attr} \times 1)_i + \epsilon_i \\ &= (-0.791 + 0.488) + (0.657 -0.017) \times \texttt{attr}_i + \epsilon_i \\ &= -0.303 + 0.64 \times \texttt{attr}_i + \epsilon_i \end{aligned}\]

If we pick a different value, say \(\texttt{intel} = 10\) , the the model becomes \[\begin{aligned} \texttt{intel}_i &= -0.791 + 0.657 \times \texttt{attr}_i + 0.488 \times 10 -0.017 \times (\texttt{attr} \times 10)_i + \epsilon_i \\ &= (-0.791 + 0.488 \times 10) + (0.657 -0.017\times 10) \times \texttt{attr}_i + \epsilon_i \\ &= 4.09 + 0.486 \times \texttt{attr}_i + \epsilon_i \end{aligned}\] This shows that the higher the value of intelligence, the lower the slope of \(\texttt{attr}\) becomes. If you’d pick \(\texttt{intel} = 38.337\) , the slope would be exactly equal to 0. 17 Because there is not just a single value of the slope, testing whether “the” slope of \(\texttt{attr}\) is equal to 0 doesn’t really make sense, because there is no single value to represent “the” slope. What, then, does \(\hat{\beta}_\texttt{attr} = 0.657\) represent? Well, it is the (estimated) slope of \(\texttt{attr}\) when \(\texttt{intel}_i = 0\) . Similarly, \(\hat{\beta}_\texttt{intel} = 0.488\) is the estimated slope of \(\texttt{intel}\) when \(\texttt{attr}_i = 0\)

A significance test of the null hypothesis \(H_0\) : \(\beta_\texttt{attr} = 0\) is thus a test whether, when \(\texttt{intel} = 0\) , the slope of \(\texttt{attr}\) is 0. This test is easy enough to perform, but is it interesting to know whether liking is related to attractiveness for people who’s intelligence was rated as 0? Perhaps not. For one thing, the ratings were on a scale from 1 to 10, so no one could actually receive a rating of 0. Because the slope depends on \(\texttt{intel}\) and we know that for some value of \(\texttt{intel}\) , the slope of \(\texttt{attr}\) will equal 0, the hypothesis test will not be significant for some values of \(\texttt{intel}\) , and will be significant for others. At which value of \(\texttt{intel}\) we might want to perform such a test is up to us, but the result seems somewhat arbitrary.

That said, we might be interested in assessing whether there is an effect of \(\texttt{attr}\) for particular values of \(\texttt{intel}\) . For instance, whether, for someone with an average intelligence rating, their physical attractiveness matters for how much someone likes them as a potential partner. We can obtain this test by centering the predictors. Centering is basically just subtracting the sample mean of each value of a variable. So for example, we can center \(\texttt{attr}\) as follows: \[\texttt{attr_cent}_i = \texttt{attr}_i - \overline{\texttt{attr}}\] Centering does not affect the relation between variables. You can view it as a simple relabelling of the values, where the value which was the sample mean is now \(\texttt{attr_cent}_i = \overline{\texttt{attr}} - \overline{\texttt{attr}} = 0\) , all values below the mean are now negative, and values above the mean are now positive. The important part of this is that the centered predictor is 0 where the original predictor was at the sample mean. In a model with centered predictors \[\begin{align} \texttt{like}_i =& \beta_0 + \beta_{\texttt{attr_cent}} \times \texttt{attr_cent}_i + \beta_{\texttt{intel_cent}} \times \texttt{intel_cent}_i \\ &+ \beta_{\texttt{attr_cent} \times \texttt{intel_cent}} \times (\texttt{attr_cent} \times \texttt{intel_cent})_i + \epsilon_i \end{align}\] the slope \(\beta_{\texttt{attr_cent}}\) is, as usual, the slope of \(\texttt{attr_cent}\) whenever \(\texttt{intel_cent}_i = 0\) . We know that \(\texttt{intel_cent}_i = 0\) when \(\texttt{intel}_i = \overline{\texttt{intel}}\) . Hence, \(\beta_{\texttt{attr_cent}}\) is the slope of \(\texttt{attr}\) when \(\texttt{intel} = \overline{\texttt{intel}}\) , i.e. it represents the effect of \(\texttt{attr}\) for those with an average intelligence ratings.

Figure 6.3 shows the resulting model after centering both attractiveness and intelligence. When you compare this to the corresponding plot in Figure 6.2 , you can see that the only real difference is in the labels for the x-axis and the scale for intelligence. In all other respects, the uncentered and centered models predict the same relations between attractiveness and liking, and the models provide an equally good account, providing the same prediction errors.

Figure 6.3: Liking as a function of centered attractiveness for different levels of (centered) intelligence in a model including an interaction between attractiveness and intelligence. Note that the actual values of liking, attractiveness, and intelligence, are whole numbers (ratings on a scale between 1 and 10). For visualization purposes, the values have been randomly jittered by adding a Normal-distributed displacement term.

The results of all model comparisons after centering are given in Table 6.3 . A first important thing to notice is that centering does not affect the estimate and test of the interaction term . The slope of the interaction predictor reflects the increase in the slope relating \(\texttt{attr}\) to \(\texttt{like}\) for every one-unit increase in \(\texttt{intel}\) . Such changes to the steepness of the relation between \(\texttt{attr}\) and \(\texttt{like}\) should not – and are not – affected by changing the 0-point of the predictors through centering. A second thing to notice is that centering changes the estimates and test of the “simple slopes” and intercept . In the centered model, the simple slope \(\hat{\beta}_\texttt{attr_cent}\) reflects the effect of \(\texttt{attr}\) on \(\texttt{like}\) for cases with an average rating on \(\texttt{intel}\) . In Figure 6.3 , this is (approximately) the regression line in the middle. In the uncentered model, the simple slope \(\hat{\beta}_\texttt{attr}\) reflects the effect of \(\texttt{attr}\) on \(\texttt{like}\) for cases with \(\texttt{intel} = 0\) . In the top right plot in Figure 6.2 , this is (approximately) the lower regression line. This latter regression line is quite far removed from most of the data, because there are no cases with an intelligence rating of 0. The regression line for people with an average intelligence rating lies much more “within the cloud of data points”, and reflects the model predictions for many more cases in the data. As a result, the reduction in the SSE that can be attributed to the simple slope is much higher in the centered model (Table 6.3 ) than the uncentered one (Table 6.2 ). This results in a much higher \(F\) statistic. You can also think of this as follows: because there are hardly any cases with an intelligence rating close to 0, estimating the effect of attractiveness on liking for these cases is rather difficult and unreliable. Estimating the effect of attractiveness on liking for cases with an average intelligence rating is much more reliable, because there are many more cases with a close-to-average intelligence rating.

6.1.5 Don’t forget about fun! A model with multiple interactions

Up to now, we have looked at a model with two predictors, attractiveness and intelligence, and have allowed for an interaction between these. To simplify the discussion a little, we have not included \(\texttt{fun}\) in the model. It is relatively straightforward to extend this idea to multiple predictors. For instance, it might also be the case that the effect of \(\texttt{fun}\) is moderated by \(\texttt{intel}\) . To investigate this, we can estimate the following regression model:

\[\begin{aligned} \texttt{like}_i =& \beta_0 + \beta_{\texttt{attr}} \times \texttt{attr}_i + \beta_{\texttt{intel}} \times \texttt{intel}_i + \beta_{\texttt{fun}} \times \texttt{fun}_i \\ &+ \beta_{\texttt{attr} \times \texttt{intel}} \times (\texttt{attr} \times \texttt{intel})_i + \beta_{\texttt{fun} \times \texttt{intel}} \times (\texttt{fun} \times \texttt{intel})_i + \epsilon_i \end{aligned}\]

The results, having centered all predictors, are given in Table 6.4 . As you can see there, the simple slopes of \(\texttt{attr}\) , \(\texttt{intel}\) , and \(\texttt{fun}\) are all positive. Each of these represents the effect of that predictor when the other predictors have the value 0. Because the predictors are centered, that means that e.g. the slope of \(\texttt{attr}\) reflects the effect of attractiveness for people with an average rating on intelligence and fun. As before, the estimated interaction between \(\texttt{attr}\) and \(\texttt{intel}\) is negative, indicating that attractiveness has less of an effect on liking for those seen as more intelligent, and that intelligence has less of an effect for those seen as more attractive. The hypothesis test of this effect is now also significant, indicating that we have reliable evidence for this moderation. This shows that by including more predictors in a model, it is possible to increase the reliability of the estimates for other predictors. There is also a significant interaction between \(\texttt{fun}\) and \(\texttt{intel}.\) The estimated interaction is positive here. This indicates that fun has more of an effect on liking for those seen as more intelligent, and that intelligence has more of an effect for those seen as more fun. Perhaps you can think of a reason why intelligence appears to lessen the effect of attractiveness, but appears to strengthen the effect of fun…

6.2 Mediation

6.2.1 legacy motives and pro-environmental behaviours.

Zaval, Markowitz, & Weber ( 2015 ) investigated whether there is a relation between individuals’ motivation to leave a positive legacy in the world, and their pro-environmental behaviours and intentions. The authors reasoned that long time horizons and social distance are key psychological barriers to pro-environmental action, particularly regarding climate change. But if people with a legacy motivation put more emphasis on future others than those without such motivation, they may also be motivated to behave more pro-environmentally in order to benefit those future others. In a pilot study, they recruited a diverse sample of 245 U.S. participants through Amazon’s Mechanical Turk. Participants answered three sets of questions: one assessing individual differences in legacy motives, one assessing their beliefs about climate change, and one assessing their willingness to take pro-environmental action. Following these sets of questions, participants were told they would be entered into a lottery to win a $10 bonus. They were then given the option to donate part (between $0 and $10) of their bonus to an environmental cause (Trees for the Future). This last measure was meant to test whether people actually act on any intention to act pro-environmentally.

For ease of analysis, the three sets of questions measuring legacy motive, belief about the reality of climate change, and intention to take pro-environmental action, were transformed into three overall scores by computing the average over the items in each set. After eliminating participants who did not answer all questions, we have data from \(n = 237\) participants. Figure 6.4 depicts the pairwise relations between the four variables. As can be seen, all variables are significantly correlated. The relation is most obvious for \(\texttt{belief}\) and \(\texttt{intention}\) . Looking at the histogram of \(\texttt{donation}\) , you can see that although all whole amounts between $0 and $10 have been chosen at least once, it looks like three values were particularly popular, namely $0, $5, and to a lesser extent $10. This results in what looks like a tri-modal distribution. This is not necessarily an issue when modelling \(\texttt{donation}\) with a regression model, as the assumptions in a regression model concern the prediction errors , and not the dependent variable itself.

Figure 6.4: Pairwise plots for legacy motives, climate change belief, intention for pro-environmental action, and donations.

According to the Theory of Planned Behavior ( Ajzen, 1991 ) , attitudes and norms shape a person’s behavioural intentions, which in turn result in behaviour itself. In the context of the present example, that could mean that legacy motive and climate change beliefs do not directly determine whether someone behaves in a pro-environmental way. Rather, these factors shape a person’s intentions towards pro-environmental behaviour, which in turn may actually lead to said pro-environmental behaviour. This is an example of an assumed causal chain , where legacy motive (partly) determines behavioural intention, and intention determines behaviour. Mediation analysis is aimed at detecting an indirect effect of a predictor (e.g. \(\texttt{legacy}\) ) on the dependent variable (e.g. \(\texttt{donation}\) ), via another variable called the mediator (e.g. \(\texttt{intention}\) ), which is the middle variable in the causal chain.

6.2.2 Causal steps