How it works

For Business

Join Mind Tools

Article • 8 min read

Critical Thinking

Developing the right mindset and skills.

By the Mind Tools Content Team

We make hundreds of decisions every day and, whether we realize it or not, we're all critical thinkers.

We use critical thinking each time we weigh up our options, prioritize our responsibilities, or think about the likely effects of our actions. It's a crucial skill that helps us to cut out misinformation and make wise decisions. The trouble is, we're not always very good at it!

In this article, we'll explore the key skills that you need to develop your critical thinking skills, and how to adopt a critical thinking mindset, so that you can make well-informed decisions.

What Is Critical Thinking?

Critical thinking is the discipline of rigorously and skillfully using information, experience, observation, and reasoning to guide your decisions, actions, and beliefs. You'll need to actively question every step of your thinking process to do it well.

Collecting, analyzing and evaluating information is an important skill in life, and a highly valued asset in the workplace. People who score highly in critical thinking assessments are also rated by their managers as having good problem-solving skills, creativity, strong decision-making skills, and good overall performance. [1]

Key Critical Thinking Skills

Critical thinkers possess a set of key characteristics which help them to question information and their own thinking. Focus on the following areas to develop your critical thinking skills:

Being willing and able to explore alternative approaches and experimental ideas is crucial. Can you think through "what if" scenarios, create plausible options, and test out your theories? If not, you'll tend to write off ideas and options too soon, so you may miss the best answer to your situation.

To nurture your curiosity, stay up to date with facts and trends. You'll overlook important information if you allow yourself to become "blinkered," so always be open to new information.

But don't stop there! Look for opposing views or evidence to challenge your information, and seek clarification when things are unclear. This will help you to reassess your beliefs and make a well-informed decision later. Read our article, Opening Closed Minds , for more ways to stay receptive.

Logical Thinking

You must be skilled at reasoning and extending logic to come up with plausible options or outcomes.

It's also important to emphasize logic over emotion. Emotion can be motivating but it can also lead you to take hasty and unwise action, so control your emotions and be cautious in your judgments. Know when a conclusion is "fact" and when it is not. "Could-be-true" conclusions are based on assumptions and must be tested further. Read our article, Logical Fallacies , for help with this.

Use creative problem solving to balance cold logic. By thinking outside of the box you can identify new possible outcomes by using pieces of information that you already have.

Self-Awareness

Many of the decisions we make in life are subtly informed by our values and beliefs. These influences are called cognitive biases and it can be difficult to identify them in ourselves because they're often subconscious.

Practicing self-awareness will allow you to reflect on the beliefs you have and the choices you make. You'll then be better equipped to challenge your own thinking and make improved, unbiased decisions.

One particularly useful tool for critical thinking is the Ladder of Inference . It allows you to test and validate your thinking process, rather than jumping to poorly supported conclusions.

Developing a Critical Thinking Mindset

Combine the above skills with the right mindset so that you can make better decisions and adopt more effective courses of action. You can develop your critical thinking mindset by following this process:

Gather Information

First, collect data, opinions and facts on the issue that you need to solve. Draw on what you already know, and turn to new sources of information to help inform your understanding. Consider what gaps there are in your knowledge and seek to fill them. And look for information that challenges your assumptions and beliefs.

Be sure to verify the authority and authenticity of your sources. Not everything you read is true! Use this checklist to ensure that your information is valid:

- Are your information sources trustworthy ? (For example, well-respected authors, trusted colleagues or peers, recognized industry publications, websites, blogs, etc.)

- Is the information you have gathered up to date ?

- Has the information received any direct criticism ?

- Does the information have any errors or inaccuracies ?

- Is there any evidence to support or corroborate the information you have gathered?

- Is the information you have gathered subjective or biased in any way? (For example, is it based on opinion, rather than fact? Is any of the information you have gathered designed to promote a particular service or organization?)

If any information appears to be irrelevant or invalid, don't include it in your decision making. But don't omit information just because you disagree with it, or your final decision will be flawed and bias.

Now observe the information you have gathered, and interpret it. What are the key findings and main takeaways? What does the evidence point to? Start to build one or two possible arguments based on what you have found.

You'll need to look for the details within the mass of information, so use your powers of observation to identify any patterns or similarities. You can then analyze and extend these trends to make sensible predictions about the future.

To help you to sift through the multiple ideas and theories, it can be useful to group and order items according to their characteristics. From here, you can compare and contrast the different items. And once you've determined how similar or different things are from one another, Paired Comparison Analysis can help you to analyze them.

The final step involves challenging the information and rationalizing its arguments.

Apply the laws of reason (induction, deduction, analogy) to judge an argument and determine its merits. To do this, it's essential that you can determine the significance and validity of an argument to put it in the correct perspective. Take a look at our article, Rational Thinking , for more information about how to do this.

Once you have considered all of the arguments and options rationally, you can finally make an informed decision.

Afterward, take time to reflect on what you have learned and what you found challenging. Step back from the detail of your decision or problem, and look at the bigger picture. Record what you've learned from your observations and experience.

Critical thinking involves rigorously and skilfully using information, experience, observation, and reasoning to guide your decisions, actions and beliefs. It's a useful skill in the workplace and in life.

You'll need to be curious and creative to explore alternative possibilities, but rational to apply logic, and self-aware to identify when your beliefs could affect your decisions or actions.

You can demonstrate a high level of critical thinking by validating your information, analyzing its meaning, and finally evaluating the argument.

Critical Thinking Infographic

See Critical Thinking represented in our infographic: An Elementary Guide to Critical Thinking .

You've accessed 1 of your 2 free resources.

Get unlimited access

Discover more content

How to write a business case.

Getting Approval and Funding for Your Project

How to Reboot Your Career Video

Video Transcript

Add comment

Comments (1)

priyanka ghogare

Try Mind Tools for FREE

Get unlimited access to all our career-boosting content and member benefits with our 7-day free trial.

Sign-up to our newsletter

Subscribing to the Mind Tools newsletter will keep you up-to-date with our latest updates and newest resources.

Subscribe now

Business Skills

Personal Development

Leadership and Management

Member Extras

Most Popular

Newest Releases

Team Briefings

Onboarding With STEPS

Mind Tools Store

About Mind Tools Content

Discover something new today

New pain points podcast - perfectionism.

Why Am I Such a Perfectionist?

Pain Points Podcast - Building Trust

Developing and Strengthening Trust at Work

How Emotionally Intelligent Are You?

Boosting Your People Skills

Self-Assessment

What's Your Leadership Style?

Learn About the Strengths and Weaknesses of the Way You Like to Lead

Recommended for you

Communicate like a leader.

Dianna Booher

Expert Interviews

Business Operations and Process Management

Strategy Tools

Customer Service

Business Ethics and Values

Handling Information and Data

Project Management

Knowledge Management

Self-Development and Goal Setting

Time Management

Presentation Skills

Learning Skills

Career Skills

Communication Skills

Negotiation, Persuasion and Influence

Working With Others

Difficult Conversations

Creativity Tools

Self-Management

Work-Life Balance

Stress Management and Wellbeing

Coaching and Mentoring

Change Management

Team Management

Managing Conflict

Delegation and Empowerment

Performance Management

Leadership Skills

Developing Your Team

Talent Management

Problem Solving

Decision Making

Member Podcast

How it works

Transform your enterprise with the scalable mindsets, skills, & behavior change that drive performance.

Explore how BetterUp connects to your core business systems.

We pair AI with the latest in human-centered coaching to drive powerful, lasting learning and behavior change.

Build leaders that accelerate team performance and engagement.

Unlock performance potential at scale with AI-powered curated growth journeys.

Build resilience, well-being and agility to drive performance across your entire enterprise.

Transform your business, starting with your sales leaders.

Unlock business impact from the top with executive coaching.

Foster a culture of inclusion and belonging.

Accelerate the performance and potential of your agencies and employees.

See how innovative organizations use BetterUp to build a thriving workforce.

Discover how BetterUp measurably impacts key business outcomes for organizations like yours.

A demo is the first step to transforming your business. Meet with us to develop a plan for attaining your goals.

- What is coaching?

Learn how 1:1 coaching works, who its for, and if it's right for you.

Accelerate your personal and professional growth with the expert guidance of a BetterUp Coach.

Types of Coaching

Navigate career transitions, accelerate your professional growth, and achieve your career goals with expert coaching.

Enhance your communication skills for better personal and professional relationships, with tailored coaching that focuses on your needs.

Find balance, resilience, and well-being in all areas of your life with holistic coaching designed to empower you.

Discover your perfect match : Take our 5-minute assessment and let us pair you with one of our top Coaches tailored just for you.

Research, expert insights, and resources to develop courageous leaders within your organization.

Best practices, research, and tools to fuel individual and business growth.

View on-demand BetterUp events and learn about upcoming live discussions.

The latest insights and ideas for building a high-performing workplace.

- BetterUp Briefing

The online magazine that helps you understand tomorrow's workforce trends, today.

Innovative research featured in peer-reviewed journals, press, and more.

Founded in 2022 to deepen the understanding of the intersection of well-being, purpose, and performance

We're on a mission to help everyone live with clarity, purpose, and passion.

Join us and create impactful change.

Read the buzz about BetterUp.

Meet the leadership that's passionate about empowering your workforce.

For Business

For Individuals

How to develop critical thinking skills

Jump to section

What are critical thinking skills?

How to develop critical thinking skills: 12 tips, how to practice critical thinking skills at work, become your own best critic.

A client requests a tight deadline on an intense project. Your childcare provider calls in sick on a day full of meetings. Payment from a contract gig is a month behind.

Your day-to-day will always have challenges, big and small. And no matter the size and urgency, they all ask you to use critical thinking to analyze the situation and arrive at the right solution.

Critical thinking includes a wide set of soft skills that encourage continuous learning, resilience , and self-reflection. The more you add to your professional toolbelt, the more equipped you’ll be to tackle whatever challenge presents itself. Here’s how to develop critical thinking, with examples explaining how to use it.

Critical thinking skills are the skills you use to analyze information, imagine scenarios holistically, and create rational solutions. It’s a type of emotional intelligence that stimulates effective problem-solving and decision-making .

When you fine-tune your critical thinking skills, you seek beyond face-value observations and knee-jerk reactions. Instead, you harvest deeper insights and string together ideas and concepts in logical, sometimes out-of-the-box , ways.

Imagine a team working on a marketing strategy for a new set of services. That team might use critical thinking to balance goals and key performance indicators , like new customer acquisition costs, average monthly sales, and net profit margins. They understand the connections between overlapping factors to build a strategy that stays within budget and attracts new sales.

Looking for ways to improve critical thinking skills? Start by brushing up on the following soft skills that fall under this umbrella:

- Analytical thinking: Approaching problems with an analytical eye includes breaking down complex issues into small chunks and examining their significance. An example could be organizing customer feedback to identify trends and improve your product offerings.

- Open-mindedness: Push past cognitive biases and be receptive to different points of view and constructive feedback . Managers and team members who keep an open mind position themselves to hear new ideas that foster innovation .

- Creative thinking: With creative thinking , you can develop several ideas to address a single problem, like brainstorming more efficient workflow best practices to boost productivity and employee morale .

- Self-reflection: Self-reflection lets you examine your thinking and assumptions to stimulate healthier collaboration and thought processes. Maybe a bad first impression created a negative anchoring bias with a new coworker. Reflecting on your own behavior stirs up empathy and improves the relationship.

- Evaluation: With evaluation skills, you tackle the pros and cons of a situation based on logic rather than emotion. When prioritizing tasks , you might be tempted to do the fun or easy ones first, but evaluating their urgency and importance can help you make better decisions.

There’s no magic method to change your thinking processes. Improvement happens with small, intentional changes to your everyday habits until a more critical approach to thinking is automatic.

Here are 12 tips for building stronger self-awareness and learning how to improve critical thinking:

1. Be cautious

There’s nothing wrong with a little bit of skepticism. One of the core principles of critical thinking is asking questions and dissecting the available information. You might surprise yourself at what you find when you stop to think before taking action.

Before making a decision, use evidence, logic, and deductive reasoning to support your own opinions or challenge ideas. It helps you and your team avoid falling prey to bad information or resistance to change .

2. Ask open-ended questions

“Yes” or “no” questions invite agreement rather than reflection. Instead, ask open-ended questions that force you to engage in analysis and rumination. Digging deeper can help you identify potential biases, uncover assumptions, and arrive at new hypotheses and possible solutions.

3. Do your research

No matter your proficiency, you can always learn more. Turning to different points of view and information is a great way to develop a comprehensive understanding of a topic and make informed decisions. You’ll prioritize reliable information rather than fall into emotional or automatic decision-making.

4. Consider several opinions

You might spend so much time on your work that it’s easy to get stuck in your own perspective, especially if you work independently on a remote team . Make an effort to reach out to colleagues to hear different ideas and thought patterns. Their input might surprise you.

If or when you disagree, remember that you and your team share a common goal. Divergent opinions are constructive, so shift the focus to finding solutions rather than defending disagreements.

5. Learn to be quiet

Active listening is the intentional practice of concentrating on a conversation partner instead of your own thoughts. It’s about paying attention to detail and letting people know you value their opinions, which can open your mind to new perspectives and thought processes.

If you’re brainstorming with your team or having a 1:1 with a coworker , listen, ask clarifying questions, and work to understand other peoples’ viewpoints. Listening to your team will help you find fallacies in arguments to improve possible solutions.

6. Schedule reflection

Whether waking up at 5 am or using a procrastination hack, scheduling time to think puts you in a growth mindset . Your mind has natural cognitive biases to help you simplify decision-making, but squashing them is key to thinking critically and finding new solutions besides the ones you might gravitate toward. Creating time and calm space in your day gives you the chance to step back and visualize the biases that impact your decision-making.

7. Cultivate curiosity

With so many demands and job responsibilities, it’s easy to seek solace in routine. But getting out of your comfort zone helps spark critical thinking and find more solutions than you usually might.

If curiosity doesn’t come naturally to you, cultivate a thirst for knowledge by reskilling and upskilling . Not only will you add a new skill to your resume , but expanding the limits of your professional knowledge might motivate you to ask more questions.

You don’t have to develop critical thinking skills exclusively in the office. Whether on your break or finding a hobby to do after work, playing strategic games or filling out crosswords can prime your brain for problem-solving.

9. Write it down

Recording your thoughts with pen and paper can lead to stronger brain activity than typing them out on a keyboard. If you’re stuck and want to think more critically about a problem, writing your ideas can help you process information more deeply.

The act of recording ideas on paper can also improve your memory . Ideas are more likely to linger in the background of your mind, leading to deeper thinking that informs your decision-making process.

10. Speak up

Take opportunities to share your opinion, even if it intimidates you. Whether at a networking event with new people or a meeting with close colleagues, try to engage with people who challenge or help you develop your ideas. Having conversations that force you to support your position encourages you to refine your argument and think critically.

11. Stay humble

Ideas and concepts aren’t the same as real-life actions. There may be such a thing as negative outcomes, but there’s no such thing as a bad idea. At the brainstorming stage , don’t be afraid to make mistakes.

Sometimes the best solutions come from off-the-wall, unorthodox decisions. Sit in your creativity , let ideas flow, and don’t be afraid to share them with your colleagues. Putting yourself in a creative mindset helps you see situations from new perspectives and arrive at innovative conclusions.

12. Embrace discomfort

Get comfortable feeling uncomfortable . It isn’t easy when others challenge your ideas, but sometimes, it’s the only way to see new perspectives and think critically.

By willingly stepping into unfamiliar territory, you foster the resilience and flexibility you need to become a better thinker. You’ll learn how to pick yourself up from failure and approach problems from fresh angles.

Thinking critically is easier said than done. To help you understand its impact (and how to use it), here are two scenarios that require critical thinking skills and provide teachable moments.

Scenario #1: Unexpected delays and budget

Imagine your team is working on producing an event. Unexpectedly, a vendor explains they’ll be a week behind on delivering materials. Then another vendor sends a quote that’s more than you can afford. Unless you develop a creative solution, the team will have to push back deadlines and go over budget, potentially costing the client’s trust.

Here’s how you could approach the situation with creative thinking:

- Analyze the situation holistically: Determine how the delayed materials and over-budget quote will impact the rest of your timeline and financial resources . That way, you can identify whether you need to build an entirely new plan with new vendors, or if it’s worth it to readjust time and resources.

- Identify your alternative options: With careful assessment, your team decides that another vendor can’t provide the same materials in a quicker time frame. You’ll need to rearrange assignment schedules to complete everything on time.

- Collaborate and adapt: Your team has an emergency meeting to rearrange your project schedule. You write down each deliverable and determine which ones you can and can’t complete by the deadline. To compensate for lost time, you rearrange your task schedule to complete everything that doesn’t need the delayed materials first, then advance as far as you can on the tasks that do.

- Check different resources: In the meantime, you scour through your contact sheet to find alternative vendors that fit your budget. Accounting helps by providing old invoices to determine which vendors have quoted less for previous jobs. After pulling all your sources, you find a vendor that fits your budget.

- Maintain open communication: You create a special Slack channel to keep everyone up to date on changes, challenges, and additional delays. Keeping an open line encourages transparency on the team’s progress and boosts everyone’s confidence.

Scenario #2: Differing opinions

A conflict arises between two team members on the best approach for a new strategy for a gaming app. One believes that small tweaks to the current content are necessary to maintain user engagement and stay within budget. The other believes a bold revamp is needed to encourage new followers and stronger sales revenue.

Here’s how critical thinking could help this conflict:

- Listen actively: Give both team members the opportunity to present their ideas free of interruption. Encourage the entire team to ask open-ended questions to more fully understand and develop each argument.

- Flex your analytical skills: After learning more about both ideas, everyone should objectively assess the benefits and drawbacks of each approach. Analyze each idea's risk, merits, and feasibility based on available data and the app’s goals and objectives.

- Identify common ground: The team discusses similarities between each approach and brainstorms ways to integrate both idea s, like making small but eye-catching modifications to existing content or using the same visual design in new media formats.

- Test new strategy: To test out the potential of a bolder strategy, the team decides to A/B test both approaches. You create a set of criteria to evenly distribute users by different demographics to analyze engagement, revenue, and customer turnover.

- Monitor and adapt: After implementing the A/B test, the team closely monitors the results of each strategy. You regroup and optimize the changes that provide stronger results after the testing. That way, all team members understand why you’re making the changes you decide to make.

You can’t think your problems away. But you can equip yourself with skills that help you move through your biggest challenges and find innovative solutions. Learning how to develop critical thinking is the start of honing an adaptable growth mindset.

Now that you have resources to increase critical thinking skills in your professional development, you can identify whether you embrace change or routine, are open or resistant to feedback, or turn to research or emotion will build self-awareness. From there, tweak and incorporate techniques to be a critical thinker when life presents you with a problem.

Cultivate your creativity

Foster creativity and continuous learning with guidance from our certified Coaches.

Elizabeth Perry, ACC

Elizabeth Perry is a Coach Community Manager at BetterUp. She uses strategic engagement strategies to cultivate a learning community across a global network of Coaches through in-person and virtual experiences, technology-enabled platforms, and strategic coaching industry partnerships. With over 3 years of coaching experience and a certification in transformative leadership and life coaching from Sofia University, Elizabeth leverages transpersonal psychology expertise to help coaches and clients gain awareness of their behavioral and thought patterns, discover their purpose and passions, and elevate their potential. She is a lifelong student of psychology, personal growth, and human potential as well as an ICF-certified ACC transpersonal life and leadership Coach.

How to improve your creative skills for effective problem-solving

6 ways to leverage ai for hyper-personalized corporate learning, can dreams help you solve problems 6 ways to try, how divergent thinking can drive your creativity, what is lateral thinking 7 techniques to encourage creative ideas, what’s convergent thinking how to be a better problem-solver, 8 creative solutions to your most challenging problems, thinking outside the box: 8 ways to become a creative problem solver, why asynchronous learning is the key to successful upskilling, similar articles, what is creative thinking and why does it matter, discover the 7 essential types of life skills you need, 6 big picture thinking strategies that you'll actually use, what are analytical skills examples and how to level up, how intrapersonal skills shape teams, plus 5 ways to build them, critical thinking is the one skillset you can't afford not to master, stay connected with betterup, get our newsletter, event invites, plus product insights and research..

3100 E 5th Street, Suite 350 Austin, TX 78702

- Platform Overview

- Integrations

- Powered by AI

- BetterUp Lead

- BetterUp Manage™

- BetterUp Care™

- Sales Performance

- Diversity & Inclusion

- Case Studies

- Why BetterUp?

- About Coaching

- Find your Coach

- Career Coaching

- Communication Coaching

- Life Coaching

- News and Press

- Leadership Team

- Become a BetterUp Coach

- BetterUp Labs

- Center for Purpose & Performance

- Leadership Training

- Business Coaching

- Contact Support

- Contact Sales

- Privacy Policy

- Acceptable Use Policy

- Trust & Security

- Cookie Preferences

Work Life is Atlassian’s flagship publication dedicated to unleashing the potential of every team through real-life advice, inspiring stories, and thoughtful perspectives from leaders around the world.

Contributing Writer

Work Futurist

Senior Quantitative Researcher, People Insights

Principal Writer

How to build critical thinking skills for better decision-making

It’s simple in theory, but tougher in practice – here are five tips to get you started.

Get stories like this in your inbox

Have you heard the riddle about two coins that equal thirty cents, but one of them is not a nickel? What about the one where a surgeon says they can’t operate on their own son?

Those brain teasers tap into your critical thinking skills. But your ability to think critically isn’t just helpful for solving those random puzzles – it plays a big role in your career.

An impressive 81% of employers say critical thinking carries a lot of weight when they’re evaluating job candidates. It ranks as the top competency companies consider when hiring recent graduates (even ahead of communication ). Plus, once you’re hired, several studies show that critical thinking skills are highly correlated with better job performance.

So what exactly are critical thinking skills? And even more importantly, how do you build and improve them?

What is critical thinking?

Critical thinking is the ability to evaluate facts and information, remain objective, and make a sound decision about how to move forward.

Does that sound like how you approach every decision or problem? Not so fast. Critical thinking seems simple in theory but is much tougher in practice, which helps explain why 65% of employers say their organization has a need for more critical thinking.

In reality, critical thinking doesn’t come naturally to a lot of us. In order to do it well, you need to:

- Remain open-minded and inquisitive, rather than relying on assumptions or jumping to conclusions

- Ask questions and dig deep, rather than accepting information at face value

- Keep your own biases and perceptions in check to stay as objective as possible

- Rely on your emotional intelligence to fill in the blanks and gain a more well-rounded understanding of a situation

So, critical thinking isn’t just being intelligent or analytical. In many ways, it requires you to step outside of yourself, let go of your own preconceived notions, and approach a problem or situation with curiosity and fairness.

It’s a challenge, but it’s well worth it. Critical thinking skills will help you connect ideas, make reasonable decisions, and solve complex problems.

7 critical thinking skills to help you dig deeper

Critical thinking is often labeled as a skill itself (you’ll see it bulleted as a desired trait in a variety of job descriptions). But it’s better to think of critical thinking less as a distinct skill and more as a collection or category of skills.

To think critically, you’ll need to tap into a bunch of your other soft skills. Here are seven of the most important.

Open-mindedness

It’s important to kick off the critical thinking process with the idea that anything is possible. The more you’re able to set aside your own suspicions, beliefs, and agenda, the better prepared you are to approach the situation with the level of inquisitiveness you need.

That means not closing yourself off to any possibilities and allowing yourself the space to pull on every thread – yes, even the ones that seem totally implausible.

As Christopher Dwyer, Ph.D. writes in a piece for Psychology Today , “Even if an idea appears foolish, sometimes its consideration can lead to an intelligent, critically considered conclusion.” He goes on to compare the critical thinking process to brainstorming . Sometimes the “bad” ideas are what lay the foundation for the good ones.

Open-mindedness is challenging because it requires more effort and mental bandwidth than sticking with your own perceptions. Approaching problems or situations with true impartiality often means:

- Practicing self-regulation : Giving yourself a pause between when you feel something and when you actually react or take action.

- Challenging your own biases: Acknowledging your biases and seeking feedback are two powerful ways to get a broader understanding.

Critical thinking example

In a team meeting, your boss mentioned that your company newsletter signups have been decreasing and she wants to figure out why.

At first, you feel offended and defensive – it feels like she’s blaming you for the dip in subscribers. You recognize and rationalize that emotion before thinking about potential causes. You have a hunch about what’s happening, but you will explore all possibilities and contributions from your team members.

Observation

Observation is, of course, your ability to notice and process the details all around you (even the subtle or seemingly inconsequential ones). Critical thinking demands that you’re flexible and willing to go beyond surface-level information, and solid observation skills help you do that.

Your observations help you pick up on clues from a variety of sources and experiences, all of which help you draw a final conclusion. After all, sometimes it’s the most minuscule realization that leads you to the strongest conclusion.

Over the next week or so, you keep a close eye on your company’s website and newsletter analytics to see if numbers are in fact declining or if your boss’s concerns were just a fluke.

Critical thinking hinges on objectivity. And, to be objective, you need to base your judgments on the facts – which you collect through research. You’ll lean on your research skills to gather as much information as possible that’s relevant to your problem or situation.

Keep in mind that this isn’t just about the quantity of information – quality matters too. You want to find data and details from a variety of trusted sources to drill past the surface and build a deeper understanding of what’s happening.

You dig into your email and website analytics to identify trends in bounce rates, time on page, conversions, and more. You also review recent newsletters and email promotions to understand what customers have received, look through current customer feedback, and connect with your customer support team to learn what they’re hearing in their conversations with customers.

The critical thinking process is sort of like a treasure hunt – you’ll find some nuggets that are fundamental for your final conclusion and some that might be interesting but aren’t pertinent to the problem at hand.

That’s why you need analytical skills. They’re what help you separate the wheat from the chaff, prioritize information, identify trends or themes, and draw conclusions based on the most relevant and influential facts.

It’s easy to confuse analytical thinking with critical thinking itself, and it’s true there is a lot of overlap between the two. But analytical thinking is just a piece of critical thinking. It focuses strictly on the facts and data, while critical thinking incorporates other factors like emotions, opinions, and experiences.

As you analyze your research, you notice that one specific webpage has contributed to a significant decline in newsletter signups. While all of the other sources have stayed fairly steady with regard to conversions, that one has sharply decreased.

You decide to move on from your other hypotheses about newsletter quality and dig deeper into the analytics.

One of the traps of critical thinking is that it’s easy to feel like you’re never done. There’s always more information you could collect and more rabbit holes you could fall down.

But at some point, you need to accept that you’ve done your due diligence and make a decision about how to move forward. That’s where inference comes in. It’s your ability to look at the evidence and facts available to you and draw an informed conclusion based on those.

When you’re so focused on staying objective and pursuing all possibilities, inference can feel like the antithesis of critical thinking. But ultimately, it’s your inference skills that allow you to move out of the thinking process and onto the action steps.

You dig deeper into the analytics for the page that hasn’t been converting and notice that the sharp drop-off happened around the same time you switched email providers.

After looking more into the backend, you realize that the signup form on that page isn’t correctly connected to your newsletter platform. It seems like anybody who has signed up on that page hasn’t been fed to your email list.

Communication

3 ways to improve your communication skills at work

If and when you identify a solution or answer, you can’t keep it close to the vest. You’ll need to use your communication skills to share your findings with the relevant stakeholders – like your boss, team members, or anybody who needs to be involved in the next steps.

Your analysis skills will come in handy here too, as they’ll help you determine what information other people need to know so you can avoid bogging them down with unnecessary details.

In your next team meeting, you pull up the analytics and show your team the sharp drop-off as well as the missing connection between that page and your email platform. You ask the web team to reinstall and double-check that connection and you also ask a member of the marketing team to draft an apology email to the subscribers who were missed.

Problem-solving

Critical thinking and problem-solving are two more terms that are frequently confused. After all, when you think critically, you’re often doing so with the objective of solving a problem.

The best way to understand how problem-solving and critical thinking differ is to think of problem-solving as much more narrow. You’re focused on finding a solution.

In contrast, you can use critical thinking for a variety of use cases beyond solving a problem – like answering questions or identifying opportunities for improvement. Even so, within the critical thinking process, you’ll flex your problem-solving skills when it comes time to take action.

Once the fix is implemented, you monitor the analytics to see if subscribers continue to increase. If not (or if they increase at a slower rate than you anticipated), you’ll roll out some other tests like changing the CTA language or the placement of the subscribe form on the page.

5 ways to improve your critical thinking skills

Beyond the buzzwords: Why interpersonal skills matter at work

Think critically about critical thinking and you’ll quickly realize that it’s not as instinctive as you’d like it to be. Fortunately, your critical thinking skills are learned competencies and not inherent gifts – and that means you can improve them. Here’s how:

- Practice active listening: Active listening helps you process and understand what other people share. That’s crucial as you aim to be open-minded and inquisitive.

- Ask open-ended questions: If your critical thinking process involves collecting feedback and opinions from others, ask open-ended questions (meaning, questions that can’t be answered with “yes” or “no”). Doing so will give you more valuable information and also prevent your own biases from influencing people’s input.

- Scrutinize your sources: Figuring out what to trust and prioritize is crucial for critical thinking. Boosting your media literacy and asking more questions will help you be more discerning about what to factor in. It’s hard to strike a balance between skepticism and open-mindedness, but approaching information with questions (rather than unquestioning trust) will help you draw better conclusions.

- Play a game: Remember those riddles we mentioned at the beginning? As trivial as they might seem, games and exercises like those can help you boost your critical thinking skills. There are plenty of critical thinking exercises you can do individually or as a team .

- Give yourself time: Research shows that rushed decisions are often regrettable ones. That’s likely because critical thinking takes time – you can’t do it under the wire. So, for big decisions or hairy problems, give yourself enough time and breathing room to work through the process. It’s hard enough to think critically without a countdown ticking in your brain.

Critical thinking really is critical

The ability to think critically is important, but it doesn’t come naturally to most of us. It’s just easier to stick with biases, assumptions, and surface-level information.

But that route often leads you to rash judgments, shaky conclusions, and disappointing decisions. So here’s a conclusion we can draw without any more noodling: Even if it is more demanding on your mental resources, critical thinking is well worth the effort.

Advice, stories, and expertise about work life today.

- Reference Manager

- Simple TEXT file

People also looked at

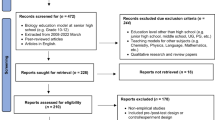

Original research article, performance assessment of critical thinking: conceptualization, design, and implementation.

- 1 Lynch School of Education and Human Development, Boston College, Chestnut Hill, MA, United States

- 2 Graduate School of Education, Stanford University, Stanford, CA, United States

- 3 Department of Business and Economics Education, Johannes Gutenberg University, Mainz, Germany

Enhancing students’ critical thinking (CT) skills is an essential goal of higher education. This article presents a systematic approach to conceptualizing and measuring CT. CT generally comprises the following mental processes: identifying, evaluating, and analyzing a problem; interpreting information; synthesizing evidence; and reporting a conclusion. We further posit that CT also involves dealing with dilemmas involving ambiguity or conflicts among principles and contradictory information. We argue that performance assessment provides the most realistic—and most credible—approach to measuring CT. From this conceptualization and construct definition, we describe one possible framework for building performance assessments of CT with attention to extended performance tasks within the assessment system. The framework is a product of an ongoing, collaborative effort, the International Performance Assessment of Learning (iPAL). The framework comprises four main aspects: (1) The storyline describes a carefully curated version of a complex, real-world situation. (2) The challenge frames the task to be accomplished (3). A portfolio of documents in a range of formats is drawn from multiple sources chosen to have specific characteristics. (4) The scoring rubric comprises a set of scales each linked to a facet of the construct. We discuss a number of use cases, as well as the challenges that arise with the use and valid interpretation of performance assessments. The final section presents elements of the iPAL research program that involve various refinements and extensions of the assessment framework, a number of empirical studies, along with linkages to current work in online reading and information processing.

Introduction

In their mission statements, most colleges declare that a principal goal is to develop students’ higher-order cognitive skills such as critical thinking (CT) and reasoning (e.g., Shavelson, 2010 ; Hyytinen et al., 2019 ). The importance of CT is echoed by business leaders ( Association of American Colleges and Universities [AACU], 2018 ), as well as by college faculty (for curricular analyses in Germany, see e.g., Zlatkin-Troitschanskaia et al., 2018 ). Indeed, in the 2019 administration of the Faculty Survey of Student Engagement (FSSE), 93% of faculty reported that they “very much” or “quite a bit” structure their courses to support student development with respect to thinking critically and analytically. In a listing of 21st century skills, CT was the most highly ranked among FSSE respondents ( Indiana University, 2019 ). Nevertheless, there is considerable evidence that many college students do not develop these skills to a satisfactory standard ( Arum and Roksa, 2011 ; Shavelson et al., 2019 ; Zlatkin-Troitschanskaia et al., 2019 ). This state of affairs represents a serious challenge to higher education – and to society at large.

In view of the importance of CT, as well as evidence of substantial variation in its development during college, its proper measurement is essential to tracking progress in skill development and to providing useful feedback to both teachers and learners. Feedback can help focus students’ attention on key skill areas in need of improvement, and provide insight to teachers on choices of pedagogical strategies and time allocation. Moreover, comparative studies at the program and institutional level can inform higher education leaders and policy makers.

The conceptualization and definition of CT presented here is closely related to models of information processing and online reasoning, the skills that are the focus of this special issue. These two skills are especially germane to the learning environments that college students experience today when much of their academic work is done online. Ideally, students should be capable of more than naïve Internet search, followed by copy-and-paste (e.g., McGrew et al., 2017 ); rather, for example, they should be able to critically evaluate both sources of evidence and the quality of the evidence itself in light of a given purpose ( Leu et al., 2020 ).

In this paper, we present a systematic approach to conceptualizing CT. From that conceptualization and construct definition, we present one possible framework for building performance assessments of CT with particular attention to extended performance tasks within the test environment. The penultimate section discusses some of the challenges that arise with the use and valid interpretation of performance assessment scores. We conclude the paper with a section on future perspectives in an emerging field of research – the iPAL program.

Conceptual Foundations, Definition and Measurement of Critical Thinking

In this section, we briefly review the concept of CT and its definition. In accordance with the principles of evidence-centered design (ECD; Mislevy et al., 2003 ), the conceptualization drives the measurement of the construct; that is, implementation of ECD directly links aspects of the assessment framework to specific facets of the construct. We then argue that performance assessments designed in accordance with such an assessment framework provide the most realistic—and most credible—approach to measuring CT. The section concludes with a sketch of an approach to CT measurement grounded in performance assessment .

Concept and Definition of Critical Thinking

Taxonomies of 21st century skills ( Pellegrino and Hilton, 2012 ) abound, and it is neither surprising that CT appears in most taxonomies of learning, nor that there are many different approaches to defining and operationalizing the construct of CT. There is, however, general agreement that CT is a multifaceted construct ( Liu et al., 2014 ). Liu et al. (2014) identified five key facets of CT: (i) evaluating evidence and the use of evidence; (ii) analyzing arguments; (iii) understanding implications and consequences; (iv) developing sound arguments; and (v) understanding causation and explanation.

There is empirical support for these facets from college faculty. A 2016–2017 survey conducted by the Higher Education Research Institute (HERI) at the University of California, Los Angeles found that a substantial majority of faculty respondents “frequently” encouraged students to: (i) evaluate the quality or reliability of the information they receive; (ii) recognize biases that affect their thinking; (iii) analyze multiple sources of information before coming to a conclusion; and (iv) support their opinions with a logical argument ( Stolzenberg et al., 2019 ).

There is general agreement that CT involves the following mental processes: identifying, evaluating, and analyzing a problem; interpreting information; synthesizing evidence; and reporting a conclusion (e.g., Erwin and Sebrell, 2003 ; Kosslyn and Nelson, 2017 ; Shavelson et al., 2018 ). We further suggest that CT includes dealing with dilemmas of ambiguity or conflict among principles and contradictory information ( Oser and Biedermann, 2020 ).

Importantly, Oser and Biedermann (2020) posit that CT can be manifested at three levels. The first level, Critical Analysis , is the most complex of the three levels. Critical Analysis requires both knowledge in a specific discipline (conceptual) and procedural analytical (deduction, inclusion, etc.) knowledge. The second level is Critical Reflection , which involves more generic skills “… necessary for every responsible member of a society” (p. 90). It is “a basic attitude that must be taken into consideration if (new) information is questioned to be true or false, reliable or not reliable, moral or immoral etc.” (p. 90). To engage in Critical Reflection, one needs not only apply analytic reasoning, but also adopt a reflective stance toward the political, social, and other consequences of choosing a course of action. It also involves analyzing the potential motives of various actors involved in the dilemma of interest. The third level, Critical Alertness , involves questioning one’s own or others’ thinking from a skeptical point of view.

Wheeler and Haertel (1993) categorized higher-order skills, such as CT, into two types: (i) when solving problems and making decisions in professional and everyday life, for instance, related to civic affairs and the environment; and (ii) in situations where various mental processes (e.g., comparing, evaluating, and justifying) are developed through formal instruction, usually in a discipline. Hence, in both settings, individuals must confront situations that typically involve a problematic event, contradictory information, and possibly conflicting principles. Indeed, there is an ongoing debate concerning whether CT should be evaluated using generic or discipline-based assessments ( Nagel et al., 2020 ). Whether CT skills are conceptualized as generic or discipline-specific has implications for how they are assessed and how they are incorporated into the classroom.

In the iPAL project, CT is characterized as a multifaceted construct that comprises conceptualizing, analyzing, drawing inferences or synthesizing information, evaluating claims, and applying the results of these reasoning processes to various purposes (e.g., solve a problem, decide on a course of action, find an answer to a given question or reach a conclusion) ( Shavelson et al., 2019 ). In the course of carrying out a CT task, an individual typically engages in activities such as specifying or clarifying a problem; deciding what information is relevant to the problem; evaluating the trustworthiness of information; avoiding judgmental errors based on “fast thinking”; avoiding biases and stereotypes; recognizing different perspectives and how they can reframe a situation; considering the consequences of alternative courses of actions; and communicating clearly and concisely decisions and actions. The order in which activities are carried out can vary among individuals and the processes can be non-linear and reciprocal.

In this article, we focus on generic CT skills. The importance of these skills derives not only from their utility in academic and professional settings, but also the many situations involving challenging moral and ethical issues – often framed in terms of conflicting principles and/or interests – to which individuals have to apply these skills ( Kegan, 1994 ; Tessier-Lavigne, 2020 ). Conflicts and dilemmas are ubiquitous in the contexts in which adults find themselves: work, family, civil society. Moreover, to remain viable in the global economic environment – one characterized by increased competition and advances in second generation artificial intelligence (AI) – today’s college students will need to continually develop and leverage their CT skills. Ideally, colleges offer a supportive environment in which students can develop and practice effective approaches to reasoning about and acting in learning, professional and everyday situations.

Measurement of Critical Thinking

Critical thinking is a multifaceted construct that poses many challenges to those who would develop relevant and valid assessments. For those interested in current approaches to the measurement of CT that are not the focus of this paper, consult Zlatkin-Troitschanskaia et al. (2018) .

In this paper, we have singled out performance assessment as it offers important advantages to measuring CT. Extant tests of CT typically employ response formats such as forced-choice or short-answer, and scenario-based tasks (for an overview, see Liu et al., 2014 ). They all suffer from moderate to severe construct underrepresentation; that is, they fail to capture important facets of the CT construct such as perspective taking and communication. High fidelity performance tasks are viewed as more authentic in that they provide a problem context and require responses that are more similar to what individuals confront in the real world than what is offered by traditional multiple-choice items ( Messick, 1994 ; Braun, 2019 ). This greater verisimilitude promises higher levels of construct representation and lower levels of construct-irrelevant variance. Such performance tasks have the capacity to measure facets of CT that are imperfectly assessed, if at all, using traditional assessments ( Lane and Stone, 2006 ; Braun, 2019 ; Shavelson et al., 2019 ). However, these assertions must be empirically validated, and the measures should be subjected to psychometric analyses. Evidence of the reliability, validity, and interpretative challenges of performance assessment (PA) are extensively detailed in Davey et al. (2015) .

We adopt the following definition of performance assessment:

A performance assessment (sometimes called a work sample when assessing job performance) … is an activity or set of activities that requires test takers, either individually or in groups, to generate products or performances in response to a complex, most often real-world task. These products and performances provide observable evidence bearing on test takers’ knowledge, skills, and abilities—their competencies—in completing the assessment ( Davey et al., 2015 , p. 10).

A performance assessment typically includes an extended performance task and short constructed-response and selected-response (i.e., multiple-choice) tasks (for examples, see Zlatkin-Troitschanskaia and Shavelson, 2019 ). In this paper, we refer to both individual performance- and constructed-response tasks as performance tasks (PT) (For an example, see Table 1 in section “iPAL Assessment Framework”).

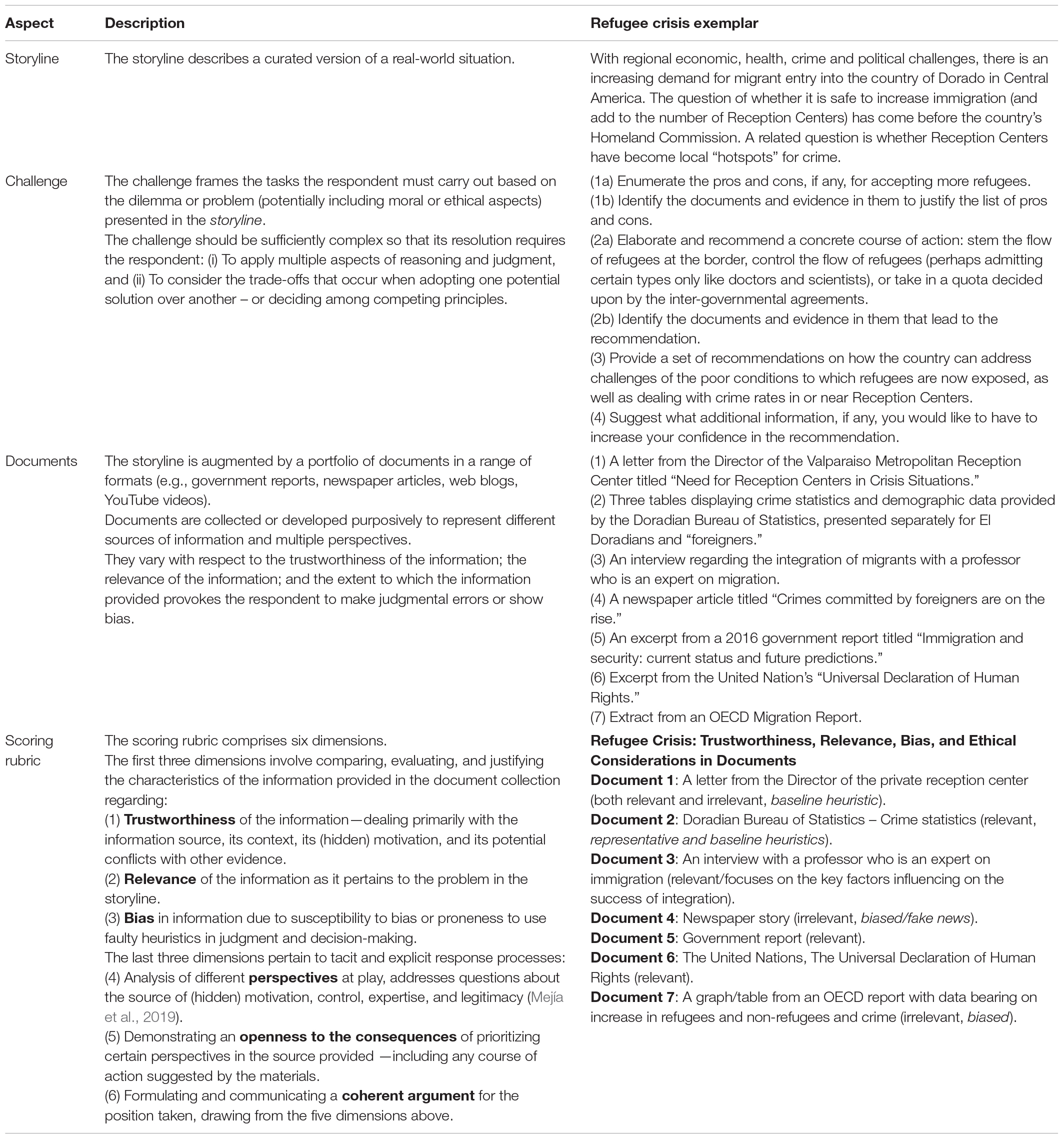

Table 1. The iPAL assessment framework.

An Approach to Performance Assessment of Critical Thinking: The iPAL Program

The approach to CT presented here is the result of ongoing work undertaken by the International Performance Assessment of Learning collaborative (iPAL 1 ). iPAL is an international consortium of volunteers, primarily from academia, who have come together to address the dearth in higher education of research and practice in measuring CT with performance tasks ( Shavelson et al., 2018 ). In this section, we present iPAL’s assessment framework as the basis of measuring CT, with examples along the way.

iPAL Background

The iPAL assessment framework builds on the Council of Aid to Education’s Collegiate Learning Assessment (CLA). The CLA was designed to measure cross-disciplinary, generic competencies, such as CT, analytic reasoning, problem solving, and written communication ( Klein et al., 2007 ; Shavelson, 2010 ). Ideally, each PA contained an extended PT (e.g., examining a range of evidential materials related to the crash of an aircraft) and two short PT’s: one in which students either critique an argument or provide a solution in response to a real-world societal issue.

Motivated by considerations of adequate reliability, in 2012, the CLA was later modified to create the CLA+. The CLA+ includes two subtests: a PT and a 25-item Selected Response Question (SRQ) section. The PT presents a document or problem statement and an assignment based on that document which elicits an open-ended response. The CLA+ added the SRQ section (which is not linked substantively to the PT scenario) to increase the number of student responses to obtain more reliable estimates of performance at the student-level than could be achieved with a single PT ( Zahner, 2013 ; Davey et al., 2015 ).

iPAL Assessment Framework

Methodological foundations.

The iPAL framework evolved from the Collegiate Learning Assessment developed by Klein et al. (2007) . It was also informed by the results from the AHELO pilot study ( Organisation for Economic Co-operation and Development [OECD], 2012 , 2013 ), as well as the KoKoHs research program in Germany (for an overview see, Zlatkin-Troitschanskaia et al., 2017 , 2020 ). The ongoing refinement of the iPAL framework has been guided in part by the principles of Evidence Centered Design (ECD) ( Mislevy et al., 2003 ; Mislevy and Haertel, 2006 ; Haertel and Fujii, 2017 ).

In educational measurement, an assessment framework plays a critical intermediary role between the theoretical formulation of the construct and the development of the assessment instrument containing tasks (or items) intended to elicit evidence with respect to that construct ( Mislevy et al., 2003 ). Builders of the assessment framework draw on the construct theory and operationalize it in a way that provides explicit guidance to PT’s developers. Thus, the framework should reflect the relevant facets of the construct, where relevance is determined by substantive theory or an appropriate alternative such as behavioral samples from real-world situations of interest (criterion-sampling; McClelland, 1973 ), as well as the intended use(s) (for an example, see Shavelson et al., 2019 ). By following the requirements and guidelines embodied in the framework, instrument developers strengthen the claim of construct validity for the instrument ( Messick, 1994 ).

An assessment framework can be specified at different levels of granularity: an assessment battery (“omnibus” assessment, for an example see below), a single performance task, or a specific component of an assessment ( Shavelson, 2010 ; Davey et al., 2015 ). In the iPAL program, a performance assessment comprises one or more extended performance tasks and additional selected-response and short constructed-response items. The focus of the framework specified below is on a single PT intended to elicit evidence with respect to some facets of CT, such as the evaluation of the trustworthiness of the documents provided and the capacity to address conflicts of principles.

From the ECD perspective, an assessment is an instrument for generating information to support an evidentiary argument and, therefore, the intended inferences (claims) must guide each stage of the design process. The construct of interest is operationalized through the Student Model , which represents the target knowledge, skills, and abilities, as well as the relationships among them. The student model should also make explicit the assumptions regarding student competencies in foundational skills or content knowledge. The Task Model specifies the features of the problems or items posed to the respondent, with the goal of eliciting the evidence desired. The assessment framework also describes the collection of task models comprising the instrument, with considerations of construct validity, various psychometric characteristics (e.g., reliability) and practical constraints (e.g., testing time and cost). The student model provides grounds for evidence of validity, especially cognitive validity; namely, that the students are thinking critically in responding to the task(s).

In the present context, the target construct (CT) is the competence of individuals to think critically, which entails solving complex, real-world problems, and clearly communicating their conclusions or recommendations for action based on trustworthy, relevant and unbiased information. The situations, drawn from actual events, are challenging and may arise in many possible settings. In contrast to more reductionist approaches to assessment development, the iPAL approach and framework rests on the assumption that properly addressing these situational demands requires the application of a constellation of CT skills appropriate to the particular task presented (e.g., Shavelson, 2010 , 2013 ). For a PT, the assessment framework must also specify the rubric by which the responses will be evaluated. The rubric must be properly linked to the target construct so that the resulting score profile constitutes evidence that is both relevant and interpretable in terms of the student model (for an example, see Zlatkin-Troitschanskaia et al., 2019 ).

iPAL Task Framework

The iPAL ‘omnibus’ framework comprises four main aspects: A storyline , a challenge , a document library , and a scoring rubric . Table 1 displays these aspects, brief descriptions of each, and the corresponding examples drawn from an iPAL performance assessment (Version adapted from original in Hyytinen and Toom, 2019 ). Storylines are drawn from various domains; for example, the worlds of business, public policy, civics, medicine, and family. They often involve moral and/or ethical considerations. Deriving an appropriate storyline from a real-world situation requires careful consideration of which features are to be kept in toto , which adapted for purposes of the assessment, and which to be discarded. Framing the challenge demands care in wording so that there is minimal ambiguity in what is required of the respondent. The difficulty of the challenge depends, in large part, on the nature and extent of the information provided in the document library , the amount of scaffolding included, as well as the scope of the required response. The amount of information and the scope of the challenge should be commensurate with the amount of time available. As is evident from the table, the characteristics of the documents in the library are intended to elicit responses related to facets of CT. For example, with regard to bias, the information provided is intended to play to judgmental errors due to fast thinking and/or motivational reasoning. Ideally, the situation should accommodate multiple solutions of varying degrees of merit.

The dimensions of the scoring rubric are derived from the Task Model and Student Model ( Mislevy et al., 2003 ) and signal which features are to be extracted from the response and indicate how they are to be evaluated. There should be a direct link between the evaluation of the evidence and the claims that are made with respect to the key features of the task model and student model . More specifically, the task model specifies the various manipulations embodied in the PA and so informs scoring, while the student model specifies the capacities students employ in more or less effectively responding to the tasks. The score scales for each of the five facets of CT (see section “Concept and Definition of Critical Thinking”) can be specified using appropriate behavioral anchors (for examples, see Zlatkin-Troitschanskaia and Shavelson, 2019 ). Of particular importance is the evaluation of the response with respect to the last dimension of the scoring rubric; namely, the overall coherence and persuasiveness of the argument, building on the explicit or implicit characteristics related to the first five dimensions. The scoring process must be monitored carefully to ensure that (trained) raters are judging each response based on the same types of features and evaluation criteria ( Braun, 2019 ) as indicated by interrater agreement coefficients.

The scoring rubric of the iPAL omnibus framework can be modified for specific tasks ( Lane and Stone, 2006 ). This generic rubric helps ensure consistency across rubrics for different storylines. For example, Zlatkin-Troitschanskaia et al. (2019 , p. 473) used the following scoring scheme:

Based on our construct definition of CT and its four dimensions: (D1-Info) recognizing and evaluating information, (D2-Decision) recognizing and evaluating arguments and making decisions, (D3-Conseq) recognizing and evaluating the consequences of decisions, and (D4-Writing), we developed a corresponding analytic dimensional scoring … The students’ performance is evaluated along the four dimensions, which in turn are subdivided into a total of 23 indicators as (sub)categories of CT … For each dimension, we sought detailed evidence in students’ responses for the indicators and scored them on a six-point Likert-type scale. In order to reduce judgment distortions, an elaborate procedure of ‘behaviorally anchored rating scales’ (Smith and Kendall, 1963) was applied by assigning concrete behavioral expectations to certain scale points (Bernardin et al., 1976). To this end, we defined the scale levels by short descriptions of typical behavior and anchored them with concrete examples. … We trained four raters in 1 day using a specially developed training course to evaluate students’ performance along the 23 indicators clustered into four dimensions (for a description of the rater training, see Klotzer, 2018).

Shavelson et al. (2019) examined the interrater agreement of the scoring scheme developed by Zlatkin-Troitschanskaia et al. (2019) and “found that with 23 items and 2 raters the generalizability (“reliability”) coefficient for total scores to be 0.74 (with 4 raters, 0.84)” ( Shavelson et al., 2019 , p. 15). In the study by Zlatkin-Troitschanskaia et al. (2019 , p. 478) three score profiles were identified (low-, middle-, and high-performer) for students. Proper interpretation of such profiles requires care. For example, there may be multiple possible explanations for low scores such as poor CT skills, a lack of a disposition to engage with the challenge, or the two attributes jointly. These alternative explanations for student performance can potentially pose a threat to the evidentiary argument. In this case, auxiliary information may be available to aid in resolving the ambiguity. For example, student responses to selected- and short-constructed-response items in the PA can provide relevant information about the levels of the different skills possessed by the student. When sufficient data are available, the scores can be modeled statistically and/or qualitatively in such a way as to bring them to bear on the technical quality or interpretability of the claims of the assessment: reliability, validity, and utility evidence ( Davey et al., 2015 ; Zlatkin-Troitschanskaia et al., 2019 ). These kinds of concerns are less critical when PT’s are used in classroom settings. The instructor can draw on other sources of evidence, including direct discussion with the student.

Use of iPAL Performance Assessments in Educational Practice: Evidence From Preliminary Validation Studies

The assessment framework described here supports the development of a PT in a general setting. Many modifications are possible and, indeed, desirable. If the PT is to be more deeply embedded in a certain discipline (e.g., economics, law, or medicine), for example, then the framework must specify characteristics of the narrative and the complementary documents as to the breadth and depth of disciplinary knowledge that is represented.

At present, preliminary field trials employing the omnibus framework (i.e., a full set of documents) indicated that 60 min was generally an inadequate amount of time for students to engage with the full set of complementary documents and to craft a complete response to the challenge (for an example, see Shavelson et al., 2019 ). Accordingly, it would be helpful to develop modified frameworks for PT’s that require substantially less time. For an example, see a short performance assessment of civic online reasoning, requiring response times from 10 to 50 min ( Wineburg et al., 2016 ). Such assessment frameworks could be derived from the omnibus framework by focusing on a reduced number of facets of CT, and specifying the characteristics of the complementary documents to be included – or, perhaps, choices among sets of documents. In principle, one could build a ‘family’ of PT’s, each using the same (or nearly the same) storyline and a subset of the full collection of complementary documents.

Paul and Elder (2007) argue that the goal of CT assessments should be to provide faculty with important information about how well their instruction supports the development of students’ CT. In that spirit, the full family of PT’s could represent all facets of the construct while affording instructors and students more specific insights on strengths and weaknesses with respect to particular facets of CT. Moreover, the framework should be expanded to include the design of a set of short answer and/or multiple choice items to accompany the PT. Ideally, these additional items would be based on the same narrative as the PT to collect more nuanced information on students’ precursor skills such as reading comprehension, while enhancing the overall reliability of the assessment. Areas where students are under-prepared could be addressed before, or even in parallel with the development of the focal CT skills. The parallel approach follows the co-requisite model of developmental education. In other settings (e.g., for summative assessment), these complementary items would be administered after the PT to augment the evidence in relation to the various claims. The full PT taking 90 min or more could serve as a capstone assessment.

As we transition from simply delivering paper-based assessments by computer to taking full advantage of the affordances of a digital platform, we should learn from the hard-won lessons of the past so that we can make swifter progress with fewer missteps. In that regard, we must take validity as the touchstone – assessment design, development and deployment must all be tightly linked to the operational definition of the CT construct. Considerations of reliability and practicality come into play with various use cases that highlight different purposes for the assessment (for future perspectives, see next section).

The iPAL assessment framework represents a feasible compromise between commercial, standardized assessments of CT (e.g., Liu et al., 2014 ), on the one hand, and, on the other, freedom for individual faculty to develop assessment tasks according to idiosyncratic models. It imposes a degree of standardization on both task development and scoring, while still allowing some flexibility for faculty to tailor the assessment to meet their unique needs. In so doing, it addresses a key weakness of the AAC&U’s VALUE initiative 2 (retrieved 5/7/2020) that has achieved wide acceptance among United States colleges.

The VALUE initiative has produced generic scoring rubrics for 15 domains including CT, problem-solving and written communication. A rubric for a particular skill domain (e.g., critical thinking) has five to six dimensions with four ordered performance levels for each dimension (1 = lowest, 4 = highest). The performance levels are accompanied by language that is intended to clearly differentiate among levels. 3 Faculty are asked to submit student work products from a senior level course that is intended to yield evidence with respect to student learning outcomes in a particular domain and that, they believe, can elicit performances at the highest level. The collection of work products is then graded by faculty from other institutions who have been trained to apply the rubrics.

A principal difficulty is that there is neither a common framework to guide the design of the challenge, nor any control on task complexity and difficulty. Consequently, there is substantial heterogeneity in the quality and evidential value of the submitted responses. This also causes difficulties with task scoring and inter-rater reliability. Shavelson et al. (2009) discuss some of the problems arising with non-standardized collections of student work.

In this context, one advantage of the iPAL framework is that it can provide valuable guidance and an explicit structure for faculty in developing performance tasks for both instruction and formative assessment. When faculty design assessments, their focus is typically on content coverage rather than other potentially important characteristics, such as the degree of construct representation and the adequacy of their scoring procedures ( Braun, 2019 ).

Concluding Reflections

Challenges to interpretation and implementation.

Performance tasks such as those generated by iPAL are attractive instruments for assessing CT skills (e.g., Shavelson, 2010 ; Shavelson et al., 2019 ). The attraction mainly rests on the assumption that elaborated PT’s are more authentic (direct) and more completely capture facets of the target construct (i.e., possess greater construct representation) than the widely used selected-response tests. However, as Messick (1994) noted authenticity is a “promissory note” that must be redeemed with empirical research. In practice, there are trade-offs among authenticity, construct validity, and psychometric quality such as reliability ( Davey et al., 2015 ).

One reason for Messick (1994) caution is that authenticity does not guarantee construct validity. The latter must be established by drawing on multiple sources of evidence ( American Educational Research Association et al., 2014 ). Following the ECD principles in designing and developing the PT, as well as the associated scoring rubrics, constitutes an important type of evidence. Further, as Leighton (2019) argues, response process data (“cognitive validity”) is needed to validate claims regarding the cognitive complexity of PT’s. Relevant data can be obtained through cognitive laboratory studies involving methods such as think aloud protocols or eye-tracking. Although time-consuming and expensive, such studies can yield not only evidence of validity, but also valuable information to guide refinements of the PT.

Going forward, iPAL PT’s must be subjected to validation studies as recommended in the Standards for Psychological and Educational Testing by American Educational Research Association et al. (2014) . With a particular focus on the criterion “relationships to other variables,” a framework should include assumptions about the theoretically expected relationships among the indicators assessed by the PT, as well as the indicators’ relationships to external variables such as intelligence or prior (task-relevant) knowledge.

Complementing the necessity of evaluating construct validity, there is the need to consider potential sources of construct-irrelevant variance (CIV). One pertains to student motivation, which is typically greater when the stakes are higher. If students are not motivated, then their performance is likely to be impacted by factors unrelated to their (construct-relevant) ability ( Lane and Stone, 2006 ; Braun et al., 2011 ; Shavelson, 2013 ). Differential motivation across groups can also bias comparisons. Student motivation might be enhanced if the PT is administered in the context of a course with the promise of generating useful feedback on students’ skill profiles.

Construct-irrelevant variance can also occur when students are not equally prepared for the format of the PT or fully appreciate the response requirements. This source of CIV could be alleviated by providing students with practice PT’s. Finally, the use of novel forms of documentation, such as those from the Internet, can potentially introduce CIV due to differential familiarity with forms of representation or contents. Interestingly, this suggests that there may be a conflict between enhancing construct representation and reducing CIV.

Another potential source of CIV is related to response evaluation. Even with training, human raters can vary in accuracy and usage of the full score range. In addition, raters may attend to features of responses that are unrelated to the target construct, such as the length of the students’ responses or the frequency of grammatical errors ( Lane and Stone, 2006 ). Some of these sources of variance could be addressed in an online environment, where word processing software could alert students to potential grammatical and spelling errors before they submit their final work product.

Performance tasks generally take longer to administer and are more costly than traditional assessments, making it more difficult to reliably measure student performance ( Messick, 1994 ; Davey et al., 2015 ). Indeed, it is well known that more than one performance task is needed to obtain high reliability ( Shavelson, 2013 ). This is due to both student-task interactions and variability in scoring. Sources of student-task interactions are differential familiarity with the topic ( Hyytinen and Toom, 2019 ) and differential motivation to engage with the task. The level of reliability required, however, depends on the context of use. For use in formative assessment as part of an instructional program, reliability can be lower than use for summative purposes. In the former case, other types of evidence are generally available to support interpretation and guide pedagogical decisions. Further studies are needed to obtain estimates of reliability in typical instructional settings.